Skynet This Year 2018

A roundup of notable AI news from 2018 that are still relevant today

Ever since starting this May, our Skynet This Week newsletter has been providing a digest of important AI news on a (roughly) bi-weekly basis. This edition collects the most noteworthy news from all 14 editions of Skynet This Week that are still relevant to be aware of today, and presents a little roundup of all the other writing we’ve put out this year. Big thanks to contributors Limor Gultchin, Alex Constantino, Viraat Aryabumi, Arnav Arora, and Lana Sinapayen for making this possible! And as always, feel free to provide feedback, suggest coverage, or express interest in helping.

- Skynet Today This Year

- Advances & Business

- Boston Dynamics is going to start selling its creepy robots in 2019

- A team of AI algorithms just crushed humans in a complex computer game

- AI bots are getting better than elite gamers

- Artificial intelligence and the rise of the robots in China

- The poet in the machine: Auto-generation of poetry directly from images through multi-adversarial training – and a little inspiration

- Germany plans 3 billion in AI investment: government paper

- Montezuma’s Revenge can finally be laid to rest as Uber AI researchers crack the classic game

- Concerns & Hype

- Why AI will not replace radiologists

- Emergency Braking Was Disabled When Self-Driving Uber Killed Woman, Report Says

- ‘The discourse is unhinged’: how the media gets AI alarmingly wrong

- Strategic Competition in an Era of Artificial Intelligence

- UK cops run machine learning trials on live police operations. Unregulated. What could go wrong?

- In Advanced and Emerging Economies Alike, Worries About Job Automation

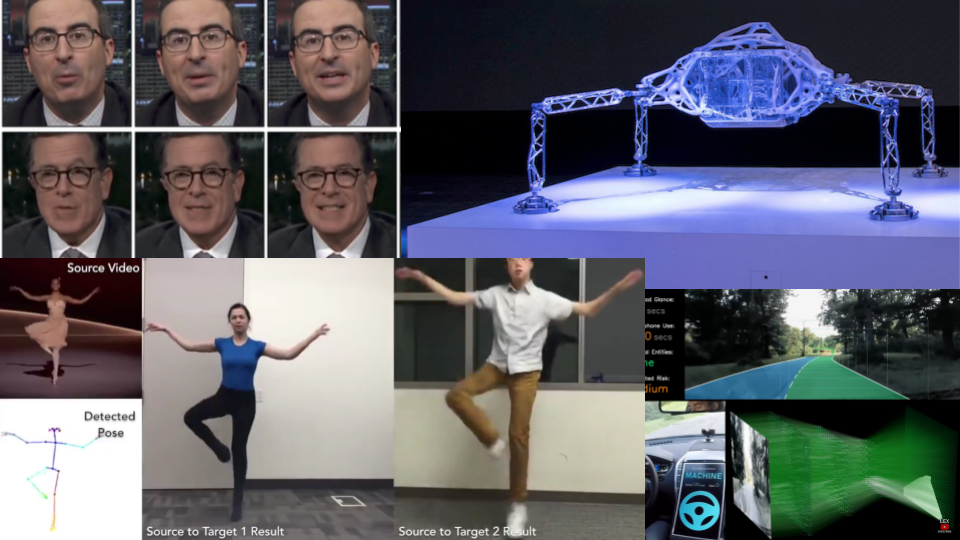

- In the Age of A.I., Is Seeing Still Believing?

- Analysis & Policy

- An Overview of National AI Strategies

- Lethal Autonomous Weapons Pledge

- What Algorithmic Art can teach us about Artificial Intelligence

- Defense Department pledges billions toward artificial intelligence research

- Women Breaking Barriers in A.I.

- Fed Scours Data for Signs of a Robot Takeover

- Facial recognition technology: The need for public regulation and corporate responsibility

- The Impact of Artificial Intelligence on the World Economy

- Expert Opinions & Discussion within the field

- AI researchers allege that machine learning is alchemy

- AI researchers should help with some military work

- What Happens if AI Doesn’t Live Up to the Hype?

- Machine learning will be the engine of global growth

- Steps Toward Super Intelligence I, How We Got Here

- Q&A with Yoshua Bengio on building a research lab

- Economics Nobel Prize Winner Sees No Singularity on the Horizon

- The compute and data moats are dead

- Artificial Intelligence Hits the Barrier of Meaning

- Explainers

- NLP’s ImageNet moment has arrived

- The man who invented the self-driving car (in 1986)

- Yann LeCun: An AI Groundbreaker Takes Stock

- The Great AI Paradox

- Machine Translation. From the Cold War to Deep Learning

- This quirky experiment highlights AI’s biggest challenges

- Inside the world of AI that forges beautiful art and terrifying deepfakes

- Favourite Videos

- Favourite Tweets

- Favorite memes

Skynet Today This Year

Before we get into the news proper, the end of the year presents a good opportunity to highlight all the writing we have done to explain AI in more depth than we do with the digests.

Briefs

The site was started with the primary intention of writing briefs on AI news - concise yet thorough articles that summarize and comment on an important recent AI story. This site publicly launched little more than 8 months ago, but we managed to finish 14 articles this year. Not bad! And here they all are for your browsing:

- OpenAI’s Not So Open DotA AI - An impressive demo by OpenAI leaves many questions unanswered

- Deepfakes - Is Seeing Still Believing? - Has widespread misuse of AI arrived? Not quite yet…

- Can a "Google AI" Build Your Genome Sequence? - A new AI-powered tool from Google promises more-accurate genome sequences, but its impact on genomics research remains to be seen

- Is Data-Driven AI Brainwashing us all, or is it Just the Same as Good ol’ Marketing? - The many claims made as part of the recent Cambridge Analytica & Facebook scandal, reviewed

- Tesla’s Lethal Autopilot Crash — A Failing of UI as Much as AI - The tragedy might have been avoided if the limitations of the Autopilot were communicated more clearly

- So What Was Up With Alexa’s Creepy Laughter Anyway? - A funny viral event talks to our ever increasing anxiety about AI and pervasive technology

- Biased Facial Recognition - a Problem of Data and Diversity - Flashy headlines often hijack meaningful and important conversations on this topic, even when the articles are solid, as was the case here

- ‘Do You Trust This Computer’ Gets a Whole Lot Wrong About AI Risks - A Chris Paine documentary about AI promoted by Elon Musk conflates real AI risks with imaginary ones

- Evil Software Du Jour: Google’s Cocktail Party Algorithm - Recent privacy concerns over work from Google showcased how easy it is for media to immediately jump to unlikely worst-case outcomes

- Google Translate’s ‘Sinister Religious Prophecies’, Demystified - Yet again, an unremarkable and well understood aspect of an AI system has been made out to be creepy and hard to explain

- Amazon Rekognition Mistook Congressmen for Criminals? A Closer Look - Examining ACLU’s claims of racial bias in face recognition technology as surveillance risk

- Examining Henry Kissinger’s Uninformed Comments on AI - Another public figure with no expertise on AI issues sweeping, unfounded statements about it threatening humanity

- Google’s LYmph Node Assistant - a Boost, not Replacement, for Doctors - A new tool from Google promises 99 percent accuracy in identifying cancer in lymph nodes - but it’s too early to claim it surpasses humans

- Sophia the Robot, More Marketing Machine Than AI Marvel - First robot to be granted a citizenship and a visa, Sophia does not have much to offer in terms of technology

Big thanks to authors Joshua Morton, Alex Constantino, Ben Shih, Viraat Aryabumi, Limor Gultchin, Lana Sinapayen, Aidan Rocke, Julia Gong, and Sneha Jha!

Editorials

But, not all topics fit into the short format of a digest, and that’s why we have editorials - longer and more in-depth pieces that are more opinionated and less news-specific. And here too, we did pretty well with 12 articles. And here they all are:

- Why Skynet Today - We are working on the Snopes for AI — here is why

- AlphaGo Zero Is Not A Sign of Imminent Human-Level AI - Why DeepMind’s Go playing program is not about to solve all of AI

- Call for Collaborators and Submissions - Know about AI and agree with this site’s mission? Contribute!

- Skynet Yesterday, Today and Tomorrow - The evolution of discussions about AI has never been as fast paced as it is now, and so we too have been up to a lot lately

- Artificial Intelligence: Think Again - AI has a public relations problem, and AI researchers should do something about it

- Autonomous Driving, Both Close and Far from Ubiquity - Autonomous vehicle technology has made huge advances in the last couple of years — what’s left to solve? A whole lot.

- Has AI surpassed humans at translation? Not even close! - Neural network translation systems still have many significant issues which make them far from superior to human translators

- Inside an AI Conference - Robotics Science and Systems - What do AI researchers do at conferences? Check this out to find out!

- Why We Find Self-Driving Cars So Scary - Even if autonomous cars are safer overall, the public will accept the new technology only when it fails in predictable and reasonable ways

- The singularity isn’t here yet. Biased AI is. - A summary of what AI bias is, why we should care, and what we can and are doing about it

- Symbiotic human-level AI: fear not, for I am your creation - Human-level AI may well be possible — and we may not have to fear it

- Why Your AI Might Be Racist - On the risks of enshrining all sorts of injustices into computer programs, where they could fester undetected in perpetuity.

Big thanks to contributors Jerry Kaplan, Olaf Witkowski, Sharon Zhou, Limor Gultchin, Apoorva Dornadula, and Henry Mei!

Advances & Business

Boston Dynamics is going to start selling its creepy robots in 2019

Quartz | from the May 14th edition

Apparently, “The robot apocalypse has been tentatively scheduled for late 2019”. The robots in focus are products of Boston Dynamics, now a SoftBank company, which . Where to start? Perhaps at the start - that first sentence is obviously hyperbolic, and though it may be in jest given the amount of anxiety about AI and robotics that may not be the best tact to take. Towards the end of the piece the author speculates ‘It’s easy to see how a robot like this could be used in office security, or trained to hunt and kill us. (Oh wait, that was Black Mirror.)’ It’s easy to see why this piece earned itself a place in our downright silly section; the gap between a robot dog with 90 minutes of battery life and Terminator-esque apocalypse is, well, significant.

A team of AI algorithms just crushed humans in a complex computer game

Will Knight, MIT Tech Review | from the July 16th edition

OpenAI has followed up on its 2017 achievement of beating pros at a 1v1 variation of the popular strategy game DoTA with a far more impressive feat: managing to beat a team of human players at a much more complex 5v5 variation of the game. Interestingly, the achievement was reached without any algorithmic advances, as OpenAI explain:

“OpenAI Five plays 180 years worth of games against itself every day, learning via self-play. It trains using a scaled-up version of Proximal Policy Optimization running on 256 GPUs and 128,000 CPU cores … This indicates that reinforcement learning can yield long-term planning with large but achievable scale — without fundamental advances, contrary to our own expectations upon starting the project.”

Though definitely impressive, it should be remembered that these systems took hundreds of human lifetimes to train just to play one game. So, just as with prior achievements in Go, they represent the success of present day AI at mastering single narrow skills using a ton of computation, and not the ability to match humans at learning many skills with much less experience.

AI bots are getting better than elite gamers

Oscar Schwartz, The Outline | from the August 13th edition

The Outline has an accessible piece on Open AI’s Dota victories. It does a great job positioning the achievement relative to others like IBM’s Watson and DeepMind’s AlphaGo.

Artificial intelligence and the rise of the robots in China

Gordon Watts, Asia Times | from the August 13th edition

Asia Times has a fascinating article about the adoption of robots in China, including a robotic teaching assistant for preschools and a package delivery robot. This complements a recent piece by the New York Times showing that China may be diving head first into consumer robotics.

The poet in the machine: Auto-generation of poetry directly from images through multi-adversarial training – and a little inspiration

Microsoft Research Blog | from November 5th edition

The point of this research is not to have AI replace poets. It’s about the myriad applications that can augment creative activity and achievement that the existence of even mildly creative AI could represent. Although the researchers acknowledge achieving truly creative AI is yet very far away, the boldness of their project and the encouraging results have been inspiring.

A team of researchers at Microsoft Research Asia attempted to train a research model that generates poems from images directly using an end to end approach. The task of generating accurate captions from images is still an “unsolved” NLP problem but the researchers attempted to do something even harder to pave way for future research in this domain. In the process, the researchers also managed to assemble two poem datasets using living annotators.

Germany plans 3 billion in AI investment: government paper

Holger Hansen, Reuters | from November 19th edition

Germany is planning to invest 3 billion euros in AI R&D until 2025, as outlined in an new “AI made in Germany” strategy paper. Governments around the world are paying more attention to the development of AI, noting its broad applications in many sectors across industry, healthcare, and the military. While the U.S. has yet to draft a national AI strategy, China released its own in 2017 and so did France in early 2018. An AI strategy is especially urgent for Germany as its car industry, an important engine for German economic growth, is falling behind in the development of self-driving (and electric) vehicles to their counterparts in the U.S. and China.

Montezuma’s Revenge can finally be laid to rest as Uber AI researchers crack the classic game

Katyanna Quach, The Register | from December 10th edition

You probably don’t remember the 1984 Atari platformer Montezuma’s Revenge, but the game’s difficulty continues to haunt AI researchers 38 years later. The game provides a fierce challenge for AI algorithms because of the delayed nature of its rewards, often requiring many intermediate steps before the play can advance. Last week, Uber announced that they’ve beaten the game using a new reinforcement learning algorithm called Go-Explore, which does not rely on the computationally expensive neural networks favored by DeepMind and OpenAI.

Concerns & Hype

Why AI will not replace radiologists

Dr. Hugh Harvery, Medium | from June 18th edition

Popular press is abuzz with proclamations that AI will consume all human jobs starting with ones that involve labor and repetition; experts suggest this would create severe problems for people who cannot afford to transition out of those positions. In the medical field, AI experts praise the ability for machines to perform faster and better diagnostics than their human radiologist counterparts. But will AI fully replace them? One doctor thinks otherwise.

Emergency Braking Was Disabled When Self-Driving Uber Killed Woman, Report Says

Daisuke Wakabayashi, NYTimes | from June 18th edition

This story camed out when the National Transportation Safety Board had released its initial report on the pedestrian fatality in Uber’s self-driving car program. Uber’s AI had a tendency to detect non-existent obstacles, so they disabled two emergency braking features. They also operated self-driving cars with only a single human safety driver, requiring that driver to simultaneously watch the road and monitor the self driving system. The incident illustrates the importance of redundant safety features in safety-critical AI systems.

‘The discourse is unhinged’: how the media gets AI alarmingly wrong

Oscar Schwartz, The Guardian | from July 30th edition

Oscar Schwartz, a freelance writer and researcher with PhD for a thesis unconvering the history of machines that write literature, has written a nice summary of the current state of hype-infused AI coverage and the issues with it (a sentiment we here at Skynet Today can cleary get behind). He suggests journalists should be more cautious in their reporting and seek the aid of researchers, but also cautions incentives and popular culture make this a difficult problem to solve:

While closer interaction between journalists and researchers would be a step in the right direction, Genevieve Bell, a professor of engineering and computer science at the Australian National University, says that stamping out hype in AI journalism is not possible. Bell explains that this is because articles about electronic brains or pernicious Facebook bots are less about technology and more about our cultural hopes and anxieties.

Strategic Competition in an Era of Artificial Intelligence

Michael Horowitz, Elsa B. Kania, Gregory C. Allen and Paul Scharre, Center for a New American Security | from August 13th edition

Andrew Ng received some flak for saying that AI is the “new electricity,” but the Center for a New American Security treats this idea seriously with a detailed exploration of past industrial revolutions. We are personally not totally sold on the idea, but it’s very much worth the read.

UK cops run machine learning trials on live police operations. Unregulated. What could go wrong?

Rebecca Hill, The Register | from September 24th edition

“We need to be very careful that if these new technologies are put into day-to-day practices, they don’t create new gaming and target cultures,”

The Royal United Services Institute (RUSI) a defense and security think tank published a report on the use of machine learning in police decision making. The report says that it is hard to predict the impact of ML-driven tools and algorithmic bias. It seems, however, that police in the UK continue to use these tools.

In Advanced and Emerging Economies Alike, Worries About Job Automation

Richard Wike and Bruce Stokes, Pew Research Center | from October 10th edition

This article reports the pessimistic results of a Pew opinion survey on the future impact of automation on the job market, conducted in 9 countries. In all countries, most people believe that in 50 years robots will do much of the work currently done by humans, while few believe that new jobs will be created by advances in automation (we would like to point out that this last opinion does not seem confirmed by historical data, as both factory machines and computers did create a substantial part of what we think as “modern jobs”). People are also worried that automation will worsen inequalities, and do not believe that it will improve the economy.

In the Age of A.I., Is Seeing Still Believing?

Joshua Rothman, The New Yorker | from November 19th edition

Image forgery has a long history. Will algorithmically assisted fakes change anything to our media landscape? The New Yorker delivers a level-headed analysis of what will probably change, in good and in bad.

Analysis & Policy

An Overview of National AI Strategies

Tim Dutton, Medium | from the July 16th edition

The race to become the leading country in AI is on. Russian president Vladimir Putin has said “Whoever becomes the leader in this (AI) sphere will become the ruler of the world”. Various countries have developed a national strategy for AI. Tim Dutton, an AI Policy researcher at CIFAR summarizes the key policies and goals of each national strategy. It makes for an interesting read to look at and compare the various perspectives of different countries regarding AI.

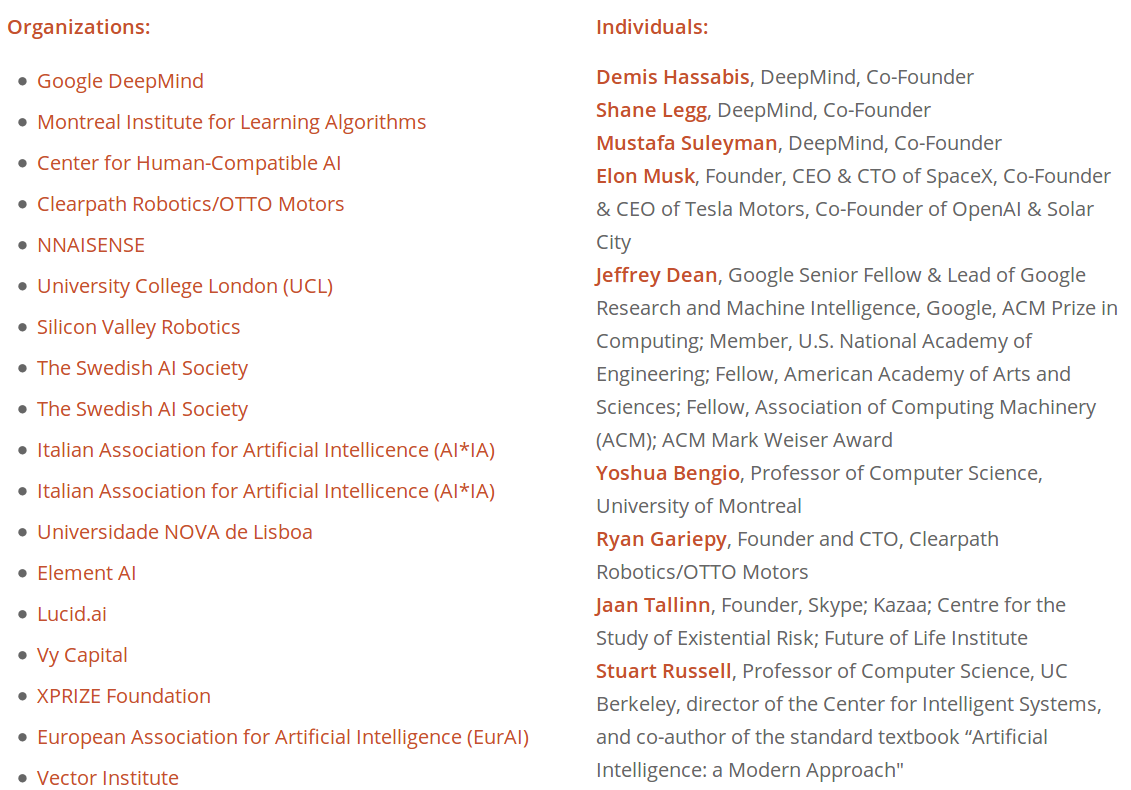

Lethal Autonomous Weapons Pledge

Multiple authors, Future of Life Institute | from the July 30th edition

A large number of top AI researcher and institutions stating that

“Artificial intelligence (AI) is poised to play an increasing role in military systems. There is an urgent opportunity and necessity for citizens, policymakers, and leaders to distinguish between acceptable and unacceptable uses of AI. In this light, we the undersigned agree that the decision to take a human life should never be delegated to a machine.”

and furthermore:

“We, the undersigned, call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons. These currently being absent, we opt to hold ourselves to a high standard: we will neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons. We ask that technology companies and organizations, as well as leaders, policymakers, and other individuals, join us in this pledge.”

The pledge has already been signed by 223 organizations and 2852 individuals, with notable figures in AI such as DeepMind’s founders, Yoshua Bengio, and many more:

What Algorithmic Art can teach us about Artificial Intelligence

James Vincent, The Verge | from the August 27th edition

Tom White, a lecturer in computational design at the University of Wellington in New Zealand, uses a “Perception Engine” to make abstract shapes that computer vision systems identify as objects from the ImageNet dataset. James Vincent from The Verge lookx at the ways in which White’s algorithmic art is being perceived by researchers, artists and the general public.

Defense Department pledges billions toward artificial intelligence research

Drew Harwell, Washington Post | from the September 10th edition

DARPA has announced plans to invest $2 Billion towards various programs aiming to advance artificial intelligence. These programs will be in addition to the existing 20 plus programs dedicated to AI, and will focus on logistical, ethical, safety, privacy problems and explainable AI. This move will put the US at odds with Silicon Valley companies and academics who have been vocal about not developing AI for military use.

“This is a massive deal. It’s the first indication that the United States is addressing advanced AI technology with the scale and funding and seriousness that the issue demands,” said Gregory C. Allen, an adjunct fellow specializing in AI and robotics for the Center for a New American Security, a Washington think tank. “We’ve seen China willing to devote billions to this issue, and this is the first time the U.S. has done the same.”

Women Breaking Barriers in A.I.

Jocelyn Blore, OnlineEducation.com | from the October 10th edition

This article discusses current numbers, data-based conclusions and statistics about women in AI-related fields and ends up with case studies of tech institutions that successfully brought their ratios around 50% women or more. The systematic justification of every claim with backing studies and the final practical advice are particularly useful.

Fed Scours Data for Signs of a Robot Takeover

Jeanna Smialek, Bloomberg | from the November 5th edition

Despite worries about AI and automation leading to widespread unemployment being common, the precursor to that – current workers becoming more productive and able to do more work aided by AI-powered tools – has been curiously missing. The Fed has been looking into this, and has found that the effects of AI on productivity may be tricky to find in the numbers and may also take a while to be fully felt:

“In the footnotes of his speech last week, Clarida cited “Artificial Intelligence and the Modern Productivity Paradox: A Clash of Expectations and Statistics,” a study by Massachusetts Institute of Technology economist Brynjolfsson and co-authors Daniel Rock and Chad Syverson. In it, the trio suggest that the “most impressive” capabilities of AI haven’t yet diffused widely.”

Facial recognition technology: The need for public regulation and corporate responsibility

Brad Smith, Microsoft Blog | from November 19th edition

The article highlights how Artificial Intelligence has embedded in our professional and personal lives today and presents both negative and positive possible implications of it. One such technology is facial recognition which on one hand, could help locate a missing child and on the other, invade the privacy of millions of people by tracking them at all times. It discusses the role of government in regulating these technologies and the responsibility of the tech sector to put effort into mitigating bias and developing ethical products.

The Impact of Artificial Intelligence on the World Economy

Irving Wladawsky-Berger, The Wall Street Journal | from November 19th edition

“Artificial intelligence has the potential to incrementally add 16 percent or around $13 trillion by 2030 to current global economic output– an annual average contribution to productivity growth of about 1.2 percent between now and 2030, according to a September, 2018 report by the McKinsey Global Institute on the impact of AI on the world economy.”

A nice summary of recent projections about the worldwide economic impacts of AI over the next decade. The summary highlights key takeaways, the biggest of which are that AI will have a relatively large impact on the world economy but that it is also widen inequality among those with a starting lead and those who do not yet have much expertise in AI:

“The economic impact of AI is likely to be large, comparing well with other general-purpose technologies in history,” notes the report in conclusion. “At the same time, there is a risk that a widening AI divide could open up between those who move quickly to embrace these technologies and those who do not adopt them, and between workers who have the skills that match demand in the AI era and those who don’t. The benefits of AI are likely to be distributed unequally, and if the development and deployment of these technologies are not handled effectively, inequality could deepen, fueling conflict within societies.”

Expert Opinions & Discussion within the field

AI researchers allege that machine learning is alchemy

Science, from May 14th edition

While the media and public discussion about AI is gearing up spiced with the occasional bitter criticism, it seems like the controversies don’t stop at the doors of AI researchers. A fervent internal discussion is happening at the same time about the scientific rigor and standards in the field as notable AI experts voice concerns about the quality of research being published. An article in Science featured part of this discussion, pointed to a talk given by Ali Rahimi, a Google AI researcher, who alleged that AI has become a form of ‘alchemy’. A couple of weeks ago, he and a few colleagues presented a paper on the topic at the International Conference on Learning Representations in Vancouver. The allegations - that researchers can’t tell why certain algorithms work and others don’t, for example - have sparked much debate in the field. And a worthy debate it is - including substantial come-backs, e.g. by Yann LeCun, who pointed out AI is not alchemy, just Engineering, which is by definition messy. Check out the links for more information.

AI researchers should help with some military work

Gregory C. Allen, Nature, from June 18th edition

When news leaked that Google was working with the military to analyze drone footage using AI there was plenty of uproar, with thousands of employees signing a petition demanding Google to stop. Subsequently, Google released a set of principles for developing AI systems, outlining that they would not develop AI systems for the military. Greg Allen argues that such an all-or-nothing attitude towards working with the military is not only a security risk but could prevent progress in AI being used as a defensive measure. He proposes a more nuanced approach that will help the military use AI in ethical and moral ways.

I am pretty much in agreement with the nuanced article by Greg Allen of @CNASdc about AI research and national security. https://t.co/hPg931Qe2O

— Andrew Moore (@awmcmu) June 8, 2018

What Happens if AI Doesn’t Live Up to the Hype?

Jeremy Kahn, Bloomberg from the June 18th edition

Jeremy Kahn covers the increasing commentary in the artificial intelligence community on the limits of deep learning, including recent articles by Filip Piekniewski, Gary Marcus, and John Langford. On one hand, the critique of deep learning that it is unlikely to lead to artificial general intelligence doesn’t detract from its ability to solve many smaller problems. On the other hand, lofty unmet expectations could foretell disillusionment and future funding problems for the field.

Machine learning will be the engine of global growth

Erik Brynjolfsson, Financial Times | from the July 30th edition

Erik Brynjolfsson, a professor at MIT and co-author of ‘The Second Machine Age’, briefly summarizes the conclusions of research that predicts Machine Learning will lead to “not only higher productivity growth, but also more widely shared prosperity”.

“In a [paper] (https://www.nber.org/chapters/c14007.pdf) with Daniel Rock and Chad Syverson, we discuss how machine learning is an example of a “general-purpose technology”. These are innovations so profound that they trigger cascades of complementary innovations, accelerating the march of progress and growth — for example, the steam engine and electricity. When a GPT comes along, past performance is no longer a good guide to the future.”

Steps Toward Super Intelligence I, How We Got Here

Rodney Brooks, Blog | from the July 30th edition

Rodney Brooks writes a typically insightful look at past attempts at General AI and significant problems that require solving before General AI may be achieved.

“Some things just take a long time, and require lots of new technology, lots of time for ideas to ferment, and lots of Einstein and Weiss level contributors along the way.”

“I suspect that human level AI falls into this class. But that it is much more complex than detecting gravity waves, controlled fusion, or even chemistry, and that it will take hundreds of years.”

“Being filled with hubris about how tech (and the Valley) can do whatever they put their mind to may just not be nearly enough.”

Q&A with Yoshua Bengio on building a research lab

Graham Taylor, CIFAR | from the August 13th edition

A nice retrospective on the career trajectory of Yoshua Bengio, with a lot of useful advice on how to progress well as a PhD candidate and a professor.

Economics Nobel Prize Winner Sees No Singularity on the Horizon

Philip E. Ross, IEEE Spectrum | from the October 22nd edition

William Nordhaus, who won the Nobel Prize in economics this month, does not believe that the singularity is near. This brief article summarizes his argument: to the extent that a event like the singularity would be predictable, nothing indicates that it is about to happen.

The compute and data moats are dead

Stephen Merity, smerity.com | from the November 5th edition

A great piece that discusses large compute and data being significant advantages in the long run in machine learning.

“For machine learning, history has shown compute and data advantages rarely matter in the long run. The ongoing trends indicate this will only become more true over time than less. You can still contribute to this field with limited compute and even data. It is especially true that you can get almost all the advances of the field with limited compute and data. Those limits may even be to your advantage.”

Artificial Intelligence Hits the Barrier of Meaning

Melanie Mitchell, The New York Times | from November 19th edition

In this opinion piece, Pr. Mitchell points out that despite recent advances in fields like image recognition or machine translation, researchers have not yet solved the issue of making algorithms that understand the task that they are solving: this is why machine translation regularly gives nonsensical results, and why many algorithms cannot deal with trivial modifications in the data they are processing.

“The bareheaded man needed a hat” is transcribed by my phone’s speech-recognition program as “The bear headed man needed a hat.” Google Translate renders “I put the pig in the pen” into French as “Je mets le cochon dans le stylo” (mistranslating “pen” in the sense of a writing instrument).

Explainers

NLP’s ImageNet moment has arrived

Sebastian Ruder, The Gradient | from the July 16th edition

A nice summary of exciting recent NLP research for learning better representations.

The man who invented the self-driving car (in 1986)

Janosch Delcker, Politico EU | from the July 30th edition

A nice summary on how “decades before Google, Tesla and Uber got into the self-driving car business, a team of German engineers led by a scientist named Ernst Dickmanns had developed a car that could navigate French commuter traffic on its own.“

Yann LeCun: An AI Groundbreaker Takes Stock

Insights Team, Forbes | from the July 30th edition

Forbes nicely summarized Yann LeCun’s 4 decades of significant contributions to the progress of AI and in particular neural net research.

The Great AI Paradox

Brian Bergstein, MIT Technology Review | from the October 22nd edition

This article is a level-headed summary of what machines currently can do, what they cannot do, and what risks are are more urgent than others.

That second idea [we’d better hope that machines don’t eliminate us altogether], despite being an obsession of some very knowledgeable and thoughtful people, is based on huge assumptions. If anything, it’s a diversion from taking more responsibility for the effects of today’s level of automation and dealing with the concentration of power in the technology industry.

Machine Translation. From the Cold War to Deep Learning

Vasily Zubarev, vas3k blog | from the October 22nd edition

A great explainer of Machine Translation covering its history and the progression to current state of the art.

This quirky experiment highlights AI’s biggest challenges

Rachel Metz, CNN Business | from November 19th edition

CNN Business interviewed Janelle Shane, a scientist who has been delighting the internet by sharing the strange outputs of her neural networks trained to reproduce data like ice cream flavors, cooking recipes and halloween costumes. The results make for a good crash course in neural networks’ limitations.

Inside the world of AI that forges beautiful art and terrifying deepfakes

Karen Hao, MIT Technology Review

Another explainer on Generative Adversarial Networks. This articles includes good contextualisation and a simple but accurate explanation about how these networks work and discusses some recent applications.

Favourite Videos

Favourite Tweets

"The people who take Mr. Musk’s side are philosophers, social scientists, writers — not the researchers who are working on A.I., he [Oren Etzioni] said. Among A.I. scientists, the notion that we should start worrying about superintelligence is “very much a fringe argument.”"

— Skynet Today 🤖 (@skynet_today) June 10, 2018

Yes https://t.co/RmBcZBWOOP

I agree with @math_rachel that tech companies and AI researchers who overhype their work are just as culpable as journalists for the AI Misinformation Epidemic.

— Abigail See (@abigail_e_see) July 27, 2018

Got AI expertise? Want to be part of the solution? Write for @skynet_today! More info here: https://t.co/TzB3pVFVMO https://t.co/ErVTCuFBCe

We need a Goldilocks Rule for AI:

— Andrew Ng (@AndrewYNg) August 17, 2018

- Too optimistic: Deep learning gives us a clear path to AGI!

- Too pessimistic: DL has limitations, thus here's the AI winter!

- Just right: DL can’t do everything, but will improve countless lives & create massive economic growth.

Me: Wait... @elonmusk a global influencer in #AI and #MachineLearning?

— Dagmar Monett (@dmonett) September 8, 2018

Me: Oh...

Me: Really????

Me: Since when?

Me: Terminator pics, misleading headlines, #AI dystopia, #AI hype, and now this... :-/ https://t.co/kuRzj8aYXF

If there's one thing you could change about the media and public conversation on AI, what would it be? Here's mine: stop talking about AI as if it has agency, because it doesn't — it's the people building and deploying it who do.

— Arvind Narayanan (@random_walker) October 14, 2018

Unit 365 of layer4 of a church progressive GAN draws trees. Unit 43 draws domes. Unit 14 draws grass. Unit 276 draws towers.

— Janelle Shane (@JanelleCShane) November 29, 2018

...and Unit 231 draws the eldritch abominations pic.twitter.com/6mVMN7tfbm

You: "AI powered deep fakes will herald a new era where we cannot tell what is true and false on the internet"

— jongo dorts (@alexhern) November 15, 2018

Me: Drew used the power of "right click" to edit the headline on a piece of mine and I've had people asking me if I know what 'hunger' is for twelve hours https://t.co/GXClzog6eo

Favorite memes

— James Bradbury (@jekbradbury) July 20, 2018

Name a more iconic trio, I'll wait. pic.twitter.com/pGaLuUxQ3r

— Vicki Boykis (@vboykis) August 23, 2018

RL agorithms' performance on Atari, ranked:

— Miles Brundage (@Miles_Brundage) August 30, 2018

16. Evaluation

15. Conditions

14. Vary

13. Across

12. Papers

11. There's

10. No

9. Single

8. Metric

7. Picking

6. A

5. Single

4. Algorithm

3. Is

2. Misleading

1. Ape-X DQN

"This paper looks amazing! I will definitely read it very soon." pic.twitter.com/fLMaE35Oat

— Michael Hendricks (@MHendr1cks) October 3, 2018

nEuRal nEtWOrKs ArE moDeLs of tHe BrAIn pic.twitter.com/8cUP6lzBUf

— AI Memes for Artificially Intelligent Teens (@ai_memes) October 20, 2018

Ladies, if he:

— Alex Champandard ✈️ #NeurIPS2018 (@alexjc) November 28, 2018

- requires lots of supervision

- yet always wants more power

- can't explain decisions

- optimizes for the average outcome

- dismisses problems as edge cases

- forgets things catastrophically

He's not your man, he's a deep neural network.

It's time to stop pic.twitter.com/alZjuINyDg

— AI Memes for Artificially Intelligent Teens (@ai_memes) November 8, 2018

Ladies, if he:

— Alex Champandard ✈️ #NeurIPS2018 (@alexjc) November 28, 2018

- requires lots of supervision

- yet always wants more power

- can't explain decisions

- optimizes for the average outcome

- dismisses problems as edge cases

- forgets things catastrophically

He's not your man, he's a deep neural network.

That’s all for this digest! If you are not subscribed and liked this, feel free to subscribe below!