Skynet This Week #11: top AI news from 10/8/18 - 10/22/18

Biased recruiting tools, explainable AI, AI pop music and more!

Our bi-weekly quick take on a bunch of the most important recent media stories about AI for the period 8th October 2018 - 22nd October 2018.

Advances & Business

Gill Pratt of Toyota: Safety Is No Argument for Robocars

Philip E. Ross, IEEE Spectrum

There is a clear conflict of interest in this piece, as Gill Pratt is trying to sell his own system in competition with self-driving cars. He has a rock solid argument though: his driving assistance system promises as much safety as self-driving cars, much earlier.

Waymo’s cars drive 10 million miles a day in a perilous virtual world

Will Knight, MIT Technology Review

A look at how Waymo tries to trip up it’s self-driving cars in a virtual world so that they don’t trip up in the real world. Testing in a virtual world is seen as a vital part of the validation process for software updates to self-driving cars.

Concerns & Hype

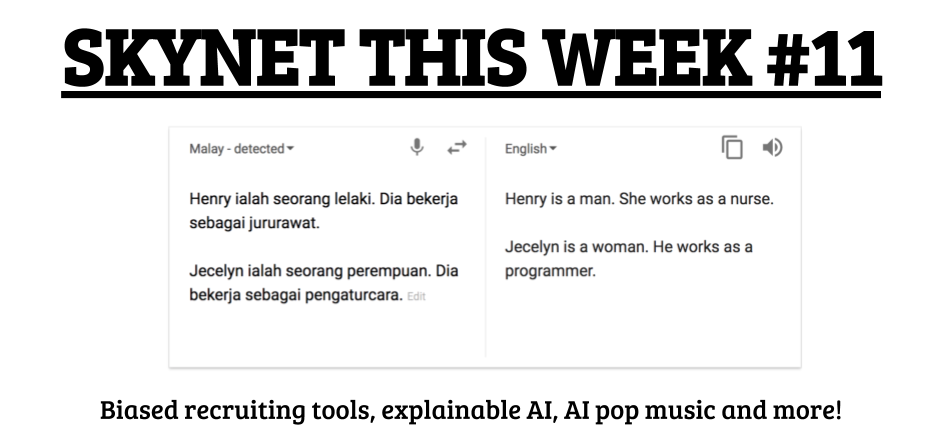

Amazon scraps secret AI recruiting tool that showed bias against women

Jeffrey Dastin, Reuters

Amazon has been developing an AI recruiting tool that rates eligible candidates on a scale of one to five. Amazon’s researchers found that the tool, was biased against women, scoring resumes with words like “women’s” lower. Even forcing the tool to ignore words that indicate gender, the biases continued to be seen. Amazon eventually shut down the experiment and a new team has been tasked with employment screening, with a focus on diversity. For a general explanation of bias in the field of AI, take a look at our recent editorial.

Amazon’s computer models were trained to vet applicants by observing patterns in resumes submitted to the company over a 10-year period. Most came from men, a reflection of male dominance across the tech industry.

AI’s first pop album ushers in a new musical era

Melissa Avdeeff, The Conversation

The group Skygge has released a pop album using parts of tracks generated using a probabilistic model. We think that it is far too early to talk about a “new era” that would see machine generated music dominate the market; algorithms and machine learning have been used before to generate music, and there is no indication yet that this will become a generality.

New App Lets You ‘Sue Anyone By Pressing a Button’

Caroline Haskins, Motherboard

Despite the alarming headline, the idea behind the machine learning powered app is to help people fill specific lawsuits at the Small Claims court (US) against abusive behavior by various big corporations. Rather than “suing anyone”, this looks like a step forward in helping people who cannot afford a lawyer win small fights against existing power structures.

Analysis & Policy

Litigating Algorithms: Challenging Government Use of Algorithmic Decision Systems

AI Now Institute, AI Now Institute*

The AI Now Institute reports on their workshop hosted at NYU Law’s Center on Race, Inequality, and the Law about algorithm-based decisions in criminal justice, health care, education and employment. The discussions on specific cases are especially enlightening. A must read not only for anyone involved in decision making, but for those who have been confronted to algorithm-based decisions.

Surveying the perception of AI at SingularityU Czech Summit 2018

GoodAI Blog, Medium

Unlike the Pew survey covered in our previous brief, this article is about a survey that took place at a summit for proponents of the “singularity” (an hypothesised point in time where AI will surpass humans in terms of general intelligence). Unsurprisingly, the participants were overwhelmingly positive in their view of the future of AI. The surprise rather comes from the difference in perception between the general public and people who participate to these events.

Economics Nobel Prize Winner Sees No Singularity on the Horizon

Philip E. Ross, IEEE Spectrum

William Nordhaus, who won the Nobel Prize in economics this month, does not believe that the singularity is near. This brief article summarizes his argument: to the extent that a event like the singularity would be predictable, nothing indicates that it is about to happen.

The Great AI Paradox

Brian Bergstein, MIT Technology Review

This article is a level-headed summary of what machines currently can do, what they cannot do, and what risks are are more urgent than others.

That second idea [we’d better hope that machines don’t eliminate us altogether], despite being an obsession of some very knowledgeable and thoughtful people, is based on huge assumptions. If anything, it’s a diversion from taking more responsibility for the effects of today’s level of automation and dealing with the concentration of power in the technology industry.

US consortium for safe AI development welcomes Baidu as first Chinese member

James Vincent, The Verge

The Partnership on AI (PAI), which is a US based consortium for the development of safe AI added it’s first Chinese member, Baidu. It will be interesting to see how companies from different parts of the world deal with the core tenets of the PAI.

“We cannot have a comprehensive and global conversation on AI development unless China has a seat at the table.”

Expert Opinions & Discussion within the field

Five Artificial Intelligence Insiders in Their Own Words

New York Times

The New York Times as part of their AI special report, spoke to five different experts from different fields about AI. It offers an interesting look into how experts from different backgrounds regard AI.

Interpretability and Post-Rationalization

Vincent Vanhoucke, Medium

Vincent Vanhoucke, Principal Scientist at Google offers his take on building interpretable and explainable AI systems. He argues that evidence from neuroscience should guide how the issue of explainability should be tackled for AI systems.

Explainers

The singularity isn’t here yet. Biased AI is.

Limor Gultchin, Skynet Today

Our latest editorial that gives a summary of what AI bias is, why we should care, and what we can and are doing about it.

A new course to teach people about fairness in machine learning

Sanders Kleinfeld, Google Blog

Along with their recent Inclusive Images Kaggle competition, Google has launched a new course to help everyone better understand fairness in machine learning.

Delighted to announce Google's launch of Introduction to Fairness in Machine Learning! A collaboration between many of us, including my team. =) Also features a short video (with me =P) speaking to human biases in the ML cycle, and many other resources. https://t.co/u1fta2lrfY

— MMitchell (@mmitchell_ai) October 18, 2018

Machine Translation. From the Cold War to Deep Learning

Vasily Zubarev, vas3k blog

A great explainer of Machine Translation covering its history and the progression to current state of the art.

Awesome Videos

Artificial Intelligence needs all of us

Rachel Thomas, TEDxSanFrancisco

MIT AGI: Deep Learning (Yoshua Bengio)

Lex Fridman, MIT

Favourite Tweet

If there's one thing you could change about the media and public conversation on AI, what would it be? Here's mine: stop talking about AI as if it has agency, because it doesn't — it's the people building and deploying it who do.

— Arvind Narayanan (@random_walker) October 14, 2018

Favorite meme

nEuRal nEtWOrKs ArE moDeLs of tHe BrAIn pic.twitter.com/8cUP6lzBUf

— AI Memes for Artificially Intelligent Teens (@ai_memes) October 20, 2018

That’s all for this digest! If you are not subscribed and liked this, feel free to subscribe below!