Skynet This Week #14: top AI news from 11/19-12/03

Robot caregivers & hands, tinkering & art, and more!

Image credit: Photo from this tweet

Our bi-weekly quick take on a bunch of the most important recent media stories about AI for the period November 19th - December 3rd.

Advances & Business

Meet Zora, the Robot Caregiver

Adam Satariano, Elian Peltier, Dmitry Kostyukov, The New York Times

A French nursing facility is currently experimenting with Zora, a small humanoid robot, as a companion to its elderly patients. Zora is quite limited to what it can do - its movements and verbal responses are controlled by an unseen human operator. Still, the nursing home reports that many of its patients have grown emotionally attached and dependent on Zora’s daily visits. With the number of people over 60 more than doubling worldwide by 2050, the healthcare industry faces a significant labor shortage. Companies like Zora Bots and Toyota, which began trials of its Human Support Robot (HSR) in Japanese hospitals earlier this year, are trying to capitalize on this opportunity.

Robotic hands which could spell the end for fruit pickers developed by Stanford scientists

James Cook, The Telegraph

The article reports a force-sensitive electronic glove, recently developed by Stanford researchers, and its potential to aid robot manipulators to become more sensitive to pressure while grasping objects such as crops. While the premise of the article is true, its title and landing picture are misleading - this technology has yet to be used on a real robot gripper and it’s unclear what algorithmic advances are needed to leverage the extra sensory modality.

The DIY Tinkerers Harnessing the Power of Artificial Intelligence

Tom Simonite, Wired

Now that playing with neural networks is not limited to people with the material, financial, and human support of a big laboratory or company, self-taught coders apply the technology to their own passions. Wired profiles the new faces of the democratisation of machine learning.

Montezuma’s Revenge can finally be laid to rest as Uber AI researchers crack the classic game

Katyanna Quach, The Register

You probably don’t remember the 1984 Atari platformer Montezuma’s Revenge, but the game’s difficulty continues to haunt AI researchers 38 years later. The game provides a fierce challenge for AI algorithms because of the delayed nature of its rewards, often requiring many intermediate steps before the play can advance. Last week, Uber announced that they’ve beaten the game using a new reinforcement learning algorithm called Go-Explore, which does not rely on the computationally expensive neural networks favored by DeepMind and OpenAI.

Concerns & Hype

AI Mistakes Bus-Side Ad for Famous CEO, Charges Her With Jaywalking

Tang Ziyi, Caixin Global

China has deployed facial recognition systems in its mass surveillance networks across many cities, and one of its popular uses is identifying jaywalkers and displaying their photos, caught in-action, on a large billboard by the intersection. This time however, the system confused a bus-side ad containing a person’s face for the person instead. The police department has reportedly fixed this bug. While this incident seems rather whimsical, it highlights the pervasiveness of China’s use of AI technologies in surveillance as well as the potential for their unintended consequences.

Fearful of bias, Google blocks gender-based pronouns from new AI tool

Paresh Dave, Reuters

The headline is voluntarily misleading, but the contents of the article are less incendiary. Part of a long term, global effort to avoid repeating historical biases such as racism, antisemitism and sexism in its predictive tools, Google has decided to avoid suggesting pronouns in its autocomplete when the gender of the subject is unknown. Other tools from Google and other companies already avoid autocomplete suggestions in cases when abuse is likely, for example queries like “are jews…” or sentences containing brand names.

Breaking with tradition, AI research group goes radio silent

Kaveh Waddell, Axios

The Machine Intelligence Research Institute has decided to keep its research results secrets from now on. The official reason is the fear of “malicious super intelligent AI”, a reason difficult to take seriously knowing what will be the real consequences of that decision. Since misuse of machine learning by humans is a current, real issue (unlike “superintelligent AI”), a research organisation gatekeeping research results is everything but a path towards safety. Hiding research with potentially highly malicious uses from the rest of the world is not equivalent to doing safe research.

Google is taking it slow when it comes to the rollout of its Duplex AI

Andy Meek, BGT

After facing some backlash over its AI-powered appointment-booking service Duplex, Google is proceeding with a slow rollout of the service to Google Pixel users in a small number of cities. This seems like a sensible decision given the uncharted territory here: How will restaurant owners respond to calls from an AI chatbot? Can the service be abused by competitors? How well does Duplex handle the complexities of English speech in practice? Google, and a small number of Pixel owners, are about to find out.

Analysis & Policy

The US could regulate AI in the name of national security

Dave Gershgorn, Max de Haldevang, Quartz

In a somewhat surprising move the Department of Commerce published a list of types of AI software that may be regulated and limited for exports due to national security concerns. The list is quite comprehensive, covering areas from neural networks to microprocessors, and the DoC offered a short 30-day window for public comments. While the national security motivation may have some merits, much of the developments in AI happen in academia where the research, often with code and data, is openly published.

Artificial Intelligence Technologies Facing Heavy Scrutiny at the USPTO

Kate Gaudry, Samuel Hayim, IPWatchdog

From 2016 to 2018, the percentage of AI-related patent applications approved by the U.S. Patent and Trademark Office (USPTO) has fallen by half from ~40% to ~20%. The increased scrutiny over AI patent applications may be due to a Federal Circuit decision in 2016, holding that methods merely directed to generating, collecting and analyzing information are ineligible for patent protection. This poses a potential issue for innovators in AI, as many AI systems only generate, collect and analyze information. The crucial difference with AI however, is that these systems don’t perform those tasks in a pre-defined way, and rather they are learned and can adapt from new data. Thus the article argues that it is important for USPTO to encourage patent protection for AI, and not view these innovations through the lens of older technologies.

Microsoft sounds an alarm over facial recognition technology

Casey Newton, The Verge

Microsoft is worried about facial recognition. Although China is by far the biggest deployer of this technology with its over 200 million surveillance cameras, the US Secret Service is already piloting its use near the White House, and more applications are sure to follow. The researchers from Microsoft and NYU point out that although the technology can do good, like (finding missing children in India)[https://timesofindia.indiatimes.com/city/delhi/delhi-facial-recognition-system-helps-trace-3000-missing-children-in-4-days/articleshow/63870129.cms], it should also be regulated by the government to avoid perpetuating human biases.

Welcome to the Machine: Law, Artificial Intelligence and the Visual Arts

Louise Carron, Center for Art Law

A detailed overview of the state of AI for art generation, and what that means for copyright protections of art.

Expert Opinions & Discussion within the field

Garry Kasparov is surprisingly upbeat about our future AI overlords

Steve Ranger, ZDNet

In this interview, Kasparov lambasts AI fear-mongering and explains the view he has been defending consistently during his whole life: AI is a tool, not inherently good or bad. For Kasparov, computer-assisted technology has too much to offer to be stopped, feared, or ignored.

Artificial Intelligence Technologies Facing Heavy Scrutiny at the USPTO

Kate Gaudry & Samuel Hayim, IPWatchdog

This piece examines the state of patent protection for AI innovations in the US, and ponders the future of AI and patenting.

From a policy perspective – should artificial-intelligence innovations be eligible for patent protection? We propose that the answer is ‘yes’, provided that other statutory requirements (e.g., novelty and non-obvious) are satisfied.

Joseph Stiglitz on artificial intelligence: ‘We’re going towards a more divided society’

Ian Sample, The Guardian

Opinions on how AI will affect the world by Nobel laureate and former chief economist at the World Bank -

““Artificial intelligence and robotisation have the potential to increase the productivity of the economy and, in principle, that could make everybody better off,” he says. “But only if they are well managed.”

Explainers

Why machines dream of spiders with 15 legs

BBC future, BBC

The BBC proposes a gallery of images generated by a type of generative algorithm that is much discussed in the AI community recently: Generative Adversarial Networks. The article is very superficial but might be a good introduction the abilities and limits of these algorithms. The article below is deeper and more nuanced.

Inside the world of AI that forges beautiful art and terrifying deepfakes

Karen Hao, MIT Technology Review

Another explainer on Generative Adversarial Networks. This articles includes good contextualisation and a simple but accurate explanation about how these networks work and discusses some recent applications.

Amazon’s own ‘Machine Learning University’ now available to all developers

Dr. Matt Wodd, Amazon

Amazon is sharing its internal Machine Learning (ML) courses for free with the general public. The courses contain more than 45 hours of videos and specialized labs designed for “developers, data scientists, data platform engineers, and business professionals.” Upon completion of the courses students can receive an Amazon Web Services (AWS) Certification. As the courses’ labs are hosted on AWS’s ML services, this move may help Amazon acquire many future AWS customers, especially at a time when its competition with Microsoft Azure and Google Cloud is heating up.

Helena Sarin: Why Bigger Isn’t Always Better With GANs And AI Art

Jason Bailey, Artnome

An overview of the work of artist Helena Sarin and her views on GAN art -

“Even with the limitations imposed by not having a lot of compute and huge data sets, GAN is a great medium to explore precisely because the generative models are still imperfect and surprising when used under these constraints. Once their output becomes as predictable as the Instagram filters and BigGAN comes pre-built in Photoshop, it would be a good time to switch to a new medium.”

Awesome Videos

Montezuma’s Revenge Solved by Go-Explore (Sets Records on Pitfall too)

Uber AI Labs

The 1000 ImageNet Categories inside of BigGAN

Mario Klingemann

Favourite Tweet

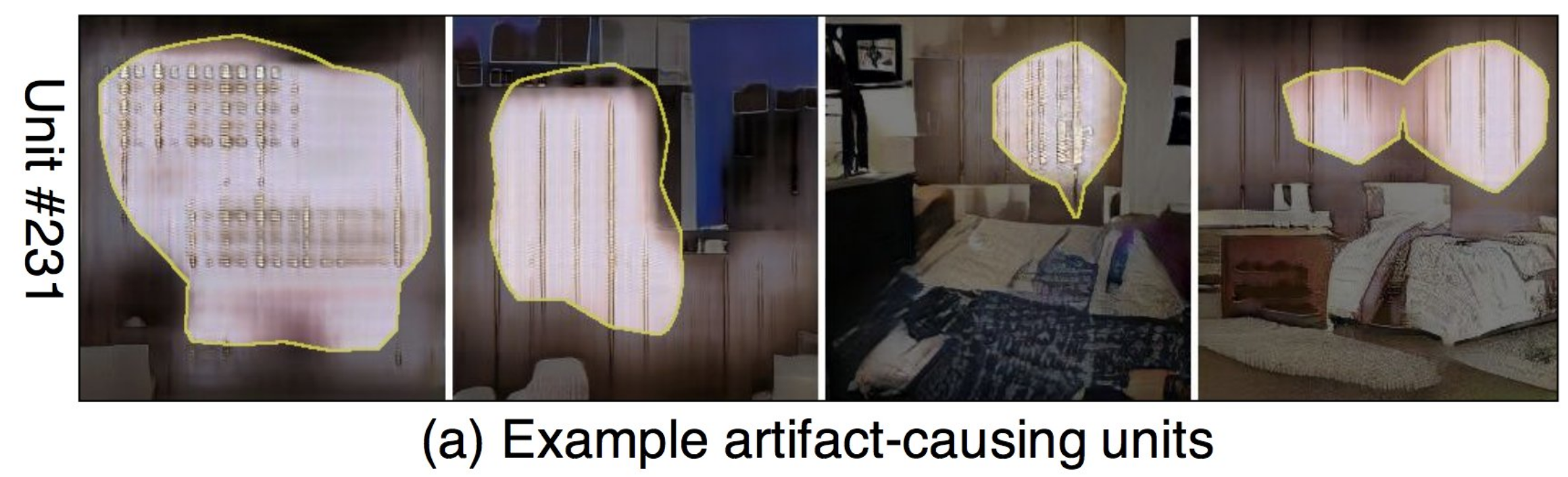

Unit 365 of layer4 of a church progressive GAN draws trees. Unit 43 draws domes. Unit 14 draws grass. Unit 276 draws towers.

— Janelle Shane (@JanelleCShane) November 29, 2018

...and Unit 231 draws the eldritch abominations pic.twitter.com/6mVMN7tfbm

Favorite goof

Ladies, if he:

— Alex Champandard ✈️ #NeurIPS2018 (@alexjc) November 28, 2018

- requires lots of supervision

- yet always wants more power

- can't explain decisions

- optimizes for the average outcome

- dismisses problems as edge cases

- forgets things catastrophically

He's not your man, he's a deep neural network.

That’s all for this digest! If you are not subscribed and liked this, feel free to subscribe below!