'Do You Trust This Computer’ Gets a Whole Lot Wrong About AI Risks

A Chris Paine documentary about AI promoted by Elon Musk conflates real AI risks with imaginary ones

What Happened

Chris Paine, who previously directed “Revenge of the Electric Car”, recently produced a documentary on AI risks1 titled ‘Do You Trust This Computer’.

Prior to its release, it gained a large amount of attention when the space entrepreneur Elon Musk shared it with his 20+ million Twitter followers:

tweet.

— Elon Musk (@elonmusk) April 6, 2018

Besides sponsoring free online viewing of the documentary for several days and promoting the film on Twitter, Musk features in the documentary alongside Stuart Russell (co-author of Artificial Intelligence: A Modern Approach), Eric Horvitz (Director at Microsoft Research Labs), Andrew Ng (co-founder of Google Brain), Shivon Zilis (a Director of OpenAI), and a variety of AI entrepreneurs. Although the documentary covers a combination of both positive and negative possible impacts of AI, on the whole its message is very pessimistic; the main thesis is that the technology is quickly getting beyond our control and we should be very worried. The film actually opens with a quote from Mary Shelley’s Frankenstein:

You are my creator, but I am your master…

In order to address these concerns, the documentary proposes the following solutions:

- In the short term, AI must be regulated in order to manage its potential impact on the economy and autonomous weapons.

- In the long term, humans must ‘merge with AI’ using a brain-computer interface of some kind in order to address the problem of controlling the next phase of development: super-intelligent AI.

Elon Musk, who co-founded Neuralink, a brain-computer interface company, explains further in the movie:

I think it’s incredibly important that AI not be other, it must be us. And I could be wrong about what I’m saying, I’m certainly open to ideas if anybody can suggest a path that’s better. But I think we’re either going to have to either merge with AI or be left behind.

The current fascination with the topic of AI, as well as Elon Musk’s involvement in and promotion of the documentary, helped it gain much attention from both AI experts and non-experts, and reactions were decidedly different between the two groups.

The Reactions

The AI research community, already tired of unrealistic coverage of recent AI progress, was seemingly unanimous in its disapproval. Many notable experts took to Twitter to voice their opinions on the film:

- Denny Britz, a former Google Brain researcher:

tweet

— Denny Britz(@dennybritz) April 6, 2018

- Miles Brundage, AI Policy Research Fellow at Oxford’s Future of Humanity Institute:

tweet

— Miles Brundage(@Miles_Brundage) April 6, 2018

- Timnit Gebru, an AI researcher at Microsoft Research known for her work on AI ethics and algorithmic bias:

tweet.

— Timnit Gebru (@timnitGebru) April 6, 2018

- François Chollet, an AI researcher at Google (and lead developer of the Keras framework):

twitter.

— François Chollet (@fchollet) April 6, 2018

In contrast, the coverage by journalists without expertise in AI was also critical but at times less willing to pass conclusive judgement:

The breadth of the film sacrifices any chance of engaging with the question of artificial intelligence and its implications at anything deeper than the absolute surface level. The film mostly comes off as a montage of soundbites from leading AI researchers, who undoubtedly have profound insights to offer about AI, if only Paine had given them the space to do so in his film.

It’s also notable that while much of this important work (on AI ethics and safety) is being done by women — people like Kate Crawford of the AI Now institute and Joy Buolamwini of the Algorithmic Justice League — the cast of talking heads in Do You Trust This Computer? is overwhelmingly male. Of the 26 experts featured in the film, 23 are men.

Documentarian Chris Paine follows up “Who Killed the Electric Car?” and “Revenge of the Electric Car” with a movie far more skeptical about technological progress. “Do You Trust This Computer?” covers the major talking points about the benefits and dangers of artificial intelligence, assembling them into something engaging and alarming — if not exactly in-depth.

The contrast between AI experts’ confident dissaproval with the media’s more mixed and often neutral evaluation reflects a problematic information-asymmetry and highlights the need for better public understanding of AI.

Our Perspective

Although Chris Paine may have intended to inform people about the genuinely important topic of AI risks, his documentary does more harm than good by misinforming the audience in many egregious ways. Rather than objectively informing about the current state of the technology, the movie promotes an unrealistic belief that superhuman AI is imminent and seeks to evoke a sense of panic and anxiety about where we are headed.

The documentary misleads and creates panic primarily in three distinct ways:

-

Omission and implication: the documentary creates a sense of panic by introducing present-day AI risks without acknowledging the immense efforts to address them 2. Granted, it is true that a lot more has to be done, but many have already stepped up to the challenge. Furthermore, key dialogues with Elon Musk, Stuart Russell and others imply drastic ideas that are not remotely true3 and easily debunked.

-

Mixing fact with fiction: worse yet, the documentary conflates real and fictional AI risks. Throughout the film, we are offered dystopian scenes from the Matrix, Terminator and Ex Machina, which are nowhere near real present day AI risks. The solutions it presents to these risks are also often very far from practical in the present day. The idea of ‘merging with AI’ is especially far from practical today and almost out of science fiction 4.

-

Manipulative emotional appeals: the first 5 minutes, after opening with a quote from Frankenstein, feature a fast paced montage made up of foreboding music and short sound-bites which is clearly meant to evoke a sense of distrust and fear about AI. Similar cinematic techniques are used all throughout the film to maintain this emotional influence 5.

It is because of the usage of these techniques that François Chollet was spot on in describing this film as ’gratuitous fear-mongering dressed up as a documentary’. This egregious combination encourages viewers to form an incorrect understanding of real news concerning AI with the utterly false belief that AI is growing quickly beyond our control rather than just being developed by programmers much like other computer algorithms.

TLDR

Chris Paine and his collaborators (Elon Musk notably among them) produced a documentary on AI which conflates real risks with imaginary ones. Considering that AI technology, like any technology, may be used for both good and evil it is essential to focus on the real issues of today and not be distracted by little understood science-fiction inspired ideas about the future.

-

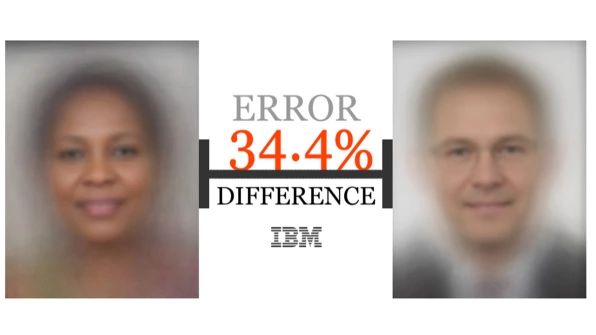

Present day real AI risks include technological unemployment, biased algorithms, unethical use of user data, algorithmic promotion of fake news, and military robots. ↩

-

There are numerous unmentioned initiatives coming together to tackle many of the issues presented in the documentary, in fact too many to name them all, but here is a very partial attempt to acknowledge but a few: fake news is being addressed by various organisations and individuals, e.g.Factmata; the General Data Protection Regulation (GDPR) is designed to protect European citizens from unfettered Capitalism in the age of Big Data and is an example of how responsible regulation can mitigate technological implications; there is also no mention of the work of Kate Crawford, Timnit Gebru,Arvind Narayan and many others on AI ethics and algorithmic bias. ↩

-

To list just a few: Elon Musk states that “A neural network is very close to how a simulation of the brain works”, another interviewee stating that “Deep Learning is totally different [from older AI techniques] - more like a toddler” (which is utterly false), a statement that “Baxter can do anything we can do with our hands” (again, utterly wrong), and even a direct implication that algorithms that Google is developing for very specific applications could take control of its data centers and do anything with all its data. ↩

-

It is by far the most scientifically unsolved challenge Elon Musk has proposed to tackle, with immensely challenging problems of Digital Signal Processing, Brain-computer interfacing, Neuroscience, and AI itself. The interfacing of AI algorithms with human brains has never even been attempted, so it is currently entirely hypothetical. Moreover, it is strange to both posit that superintelligent AI may be malicious and propose we should go ahead and implant such AI in our brains. ↩

-

All films and documentaries use these emotional devices, but this movie relies on them much more heavily than is normal. Last year’s documentary “AlphaGo” is a good example of a documentary that also uses cinematic techniques without being downright manipulative in their usage or distorting factual information. ↩