Artificial Intelligence: Think Again

AI has a public relations problem, and AI researchers should do something about it

Image credit: Aliekor at English Wikipedia

The following opinion piece originally appeared in the Communications of the ACM, Vol. 60, No. 1, Pages 36-38 (https://dl.acm.org/citation.cfm?doid=3028256.2950039).

The content has been cross-posted from the public LinkedIn version with approval.

The dominant public narrative about artificial intelligence is that we are building increasingly intelligent machines that will ultimately surpass human capabilities, steal our jobs, possibly even escape human control and kill us all. This misguided perception, not widely shared by AI researchers, runs a significant risk of delaying or derailing practical applications and influencing public policy in counterproductive ways. A more appropriate framing—better supported by historical progress and current developments—is that AI is simply a natural continuation of longstanding efforts to automate tasks, dating back at least to the start of the industrial revolution. Stripping the field of its gee-whiz apocalyptic gloss makes it easier to evaluate the likely benefits and pitfalls of this important technology, not to mention dampen the self-destructive cycles of hype and disappointment that have plagued the field since its inception.

At the core of this problem is the tendency for respected public figures outside the field, and even a few within the field, to tolerate or sanction overblown press reports that herald each advance as startling and unexpected leaps toward general human-level intelligence (or beyond), fanning fears that “the robots” are coming to take over the world. Headlines often tout noteworthy engineering accomplishments in a context suggesting they constitute unwelcome assaults on human uniqueness and supremacy. If computers can trade stocks and drive cars, will they soon outperform our best sales people, replace court judges, win Oscars and Grammys, buy up and develop prime parcels of real estate for their own purposes? And what will “they” think of “us”?

The plain fact is there is no “they.” This is an anthropomorphic conceit borne of endless Hollywood blockbusters, reinforced by the gratuitous inclusion of human-like features in public AI technology demonstrations, such as natural-sounding voices, facial expressions, and simulated displays of human emotions. Each of these techniques has valuable application to human-computer interfaces, but not when their primary effect is to fool or mislead. Attempts to dress up significant AI accomplishments with human-oid flourishes does the field a disservice by raising inappropriate questions and implying there is more there than meets the eye. Was IBM’s Watson pleased with its “Jeopardy!” win? It sure looked like it. This made for great television, but it also encouraged the audience to overinterpret the actual significance of this important achievement. Machines don’t have minds, and there is precious little evidence to suggest they ever will.

The recent wave of public successes, remarkable as they are, arise from the application of a growing collection of tools and techniques that allow us to take better advantage of advances in computing power, storage, and the wide availability of large datasets. This is certainly great computer science, but it is not evidence of progress toward a superintelligence that can outperform humans at any task it may choose to undertake. While some of the new tools—most notably in the field of machine learning—can be broadly applied to classes of tasks that may appear unrelated to the non-technical eye, in practice they often rely upon certain common attributes of the problem domains, such as enormous collections of examples in digital form. High-speed trading algorithms, tracking objects in videos, and predicting the spread of infectious diseases all rely on techniques for finding subtle patterns in noisy streams of real-time data, and so many of the tools applied to these apparently diverse tasks are similar.

We are certainly using machines to perform all sorts of real-world tasks that people perform using their native intelligence, but this does not mean the computers are intelligent. It merely means there are other ways to solve these problems. People and computers can play chess, but it is far from clear that they do it the same way. Recent advances in machine translation are remarkably successful, but they rely more on statistical correlations gleaned from large bodies of concorded texts than on fundamental advances in the understanding of natural language.

Machines have always automated tasks that previously required human effort and attention—both physical and mental—usually by employing very different techniques. And they often do these tasks better than people can, at lower cost, or both—otherwise they would not be useful. Factory automation has replaced myriad highly skilled and highly trained workers, from sheet metal workers to coffee tasters. Arithmetic problems that used to be the exclusive domain of human “calculators” are now performed by tools so inexpensive they are given away as promotional trinkets at trade shows. It used to take an army of artists to animate Cinderella’s hair, but now CGI techniques render Rapunzel’s flowing locks. These advances do not demean or challenge human capabilities; instead they liberate us to perform ever more ambitious tasks.

Machines don't have minds, and there is precious little evidence to suggest they ever will.

Some pundits warn that computers in general, and AI in particular, will lead to widespread unemployment. What will we do for a living when machines can perform nearly all of today’s jobs? A historical perspective reveals a potential flaw in this concern. The labor market constantly evolves in response to automation. Two hundred years ago, more than 90% of the U.S. labor force worked on farms. Now, barely 2% produce far more food at a fraction of the cost. Yet, everyone isn’t out of work. In fact, more people are employed today than ever before, and most would agree their jobs are far less taxing and more rewarding than the backbreaking toil of their ancestors. This is because the benefits of automation make society wealthier, which in turn generates demand for all sorts of new products and services, ultimately expanding the need for workers. Our technology continually obsoletes professions, but our economy eventually replaces them with new and different ones. It is certainly true that recent advances in AI are likely to enable the automation of many or most of today’s jobs, but there is no reason to believe the historical pattern of job creation will cease.

That’s the good news. The bad news is that technology-driven labor market transitions can take considerable time, causing serious hardships for displaced workers. And if AI accelerates the pace of automation, as many predict, this rapid transition may cause significant social disruption.

But which jobs are most at risk? To answer this question, its useful to observe that we don’t actually automate jobs, we automate tasks. So whether a worker will be replaced or made more productive depends on the nature of the tasks they perform. If their job involves repetitive or well-defined procedures and a clear-cut goal, then indeed their continued employment is at risk. But if it involves a variety of activities, solving novel challenges in chaotic or changing environments, or the authentic expression of human emotions, they are at far lower risk.

So what are the jobs of the future? While many people tend to think of jobs as transactional, there are plenty of professions that rely instead on building trust or rapport with other people. If your goal is to withdraw some spare cash for the weekend, an ATM is as effective as a teller. But if you want to secure an investor to help you build your new business, you won’t be pitching a machine anytime soon.

This is not to say that machines will never sense or express emotions; indeed, work on affective computing is proceeding rapidly. The question is how these capabilities will be perceived by users. If they are understood simply as aids to communication, they are likely to be broadly accepted. But if they are seen as attempts to fake sympathy or allay legitimate concerns, they are likely to foster mistrust and rejection—as anyone can attest who has waited on hold listening to a recorded loop proclaim how important their call is. No one wants a robotic priest to take their confession, or a mechanical undertaker to console them on the loss of a loved one.

Then there are the jobs that involve demonstrations of skill or convey the comforting feeling that someone is paying attention to your needs. Except as a novelty, who wants to watch a self-driving racecar, or have a mechanical bartender ask about your day while it tops up your drink? Lots of professions require these more social skills, and the demand for them is only going to grow as our disposable income increases. There’s no reason in principle we can’t become a society of well-paid professional artisans, designers, personal shoppers, performers, caregivers, online gamers, concierges, curators, and advisors of every sort. And just as many of today’s jobs did not exist even a few decades ago, it is likely a new crop of professions will arise that we can’t quite envision today.

So the robots are certainly coming, but not in the way most people think.

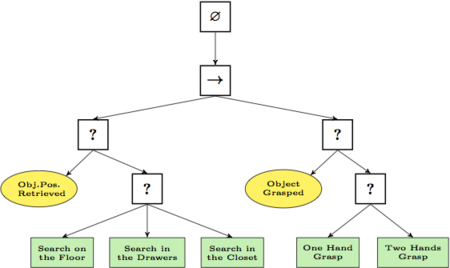

So the robots are certainly coming, but not quite in the way most people think. Concerns that they are going to obsolete us, rise up, and take over, are misguided at best. Worrying about superintelligent machines distracts us from the very real obstacles we will face as increasingly capable machines become more intricately intertwined with our lives and begin to share our physical and public spaces. The difficult challenge is to ensure these machines respect our often-unstated social conventions. Should a robot be permitted to stand in line for you, put money in your parking meter to extend your time, use a crowded sidewalk to make deliveries, commit you to a purchase, enter into a contract, vote on your behalf, or take up a seat on a bus? Philosophers focus on the more obvious and serious ethical concerns—such as whether your autonomous vehicle should risk your life to save two pedestrians—but the practical questions are much broader. Most AI researchers naturally focus on solving some immediate problem, but in the coming decades a significant impediment to widespread acceptance of their work will likely be how well their systems abide by our social and cultural customs.

Science fiction is rife with stories of robots run amok, but seen from an engineering perspective, these are design problems, not the unpredictable consequences of tinkering with some presumed natural universal order. Good products, including increasingly autonomous machines and applications, don’t go haywire unless we design them poorly. If the HAL 9000 kills its crewmates to avoid being deactivated, it is because its designers failed to prioritize its goals properly.

To address these challenges, we need to develop engineering standards for increasingly autonomous systems, perhaps by borrowing concepts from other potentially hazardous fields such as civil engineering. For instance, such systems could incorporate a model of their intended theater of operation, (known as a Standard Operating Environment, or SOE), and enter a well-defined “safe mode” when they drift out of bounds. We need to study how people naturally moderate their own goal-seeking behavior to accommodate the interests and rights of others. Systems should pass certification exams before deployment, the behavioral equivalent of automotive crash tests. Finally, we need a programmatic notion of basic ethics to guide actions in unanticipated circumstances. This is not to say machines have to be moral, simply that they have to behavemorally in relevant situations. How do we prioritize human life, animal life, private property, self-preservation? When is it acceptable to break the law?

None of this matters when computers operate in limited, well-defined domains, but if we want AI systems to be broadly trusted and utilized, we should undertake a careful reassessment of the purpose, goals, and potential of the field, as least as it is perceived by the general public. The plain fact is that AI has a public relations problem that may work against its own interests. We need to tamp down the hyperbolic rhetoric favored by the popular press, avoid fanning the flames of public hysteria, and focus on the challenge of building civilized machines for a human world.