Skynet This Week #6: top AI news from 07/30/18 - 08/13/18

Dota defeats, DeepFakes discoveries, rising robots, and more!

Image credit: https://www.twitch.tv/videos/293517383

Our bi-weekly quick take on a bunch of the most important recent media stories about AI for the period of 07/30/18 - 08/13/18.

AI Advances & Business

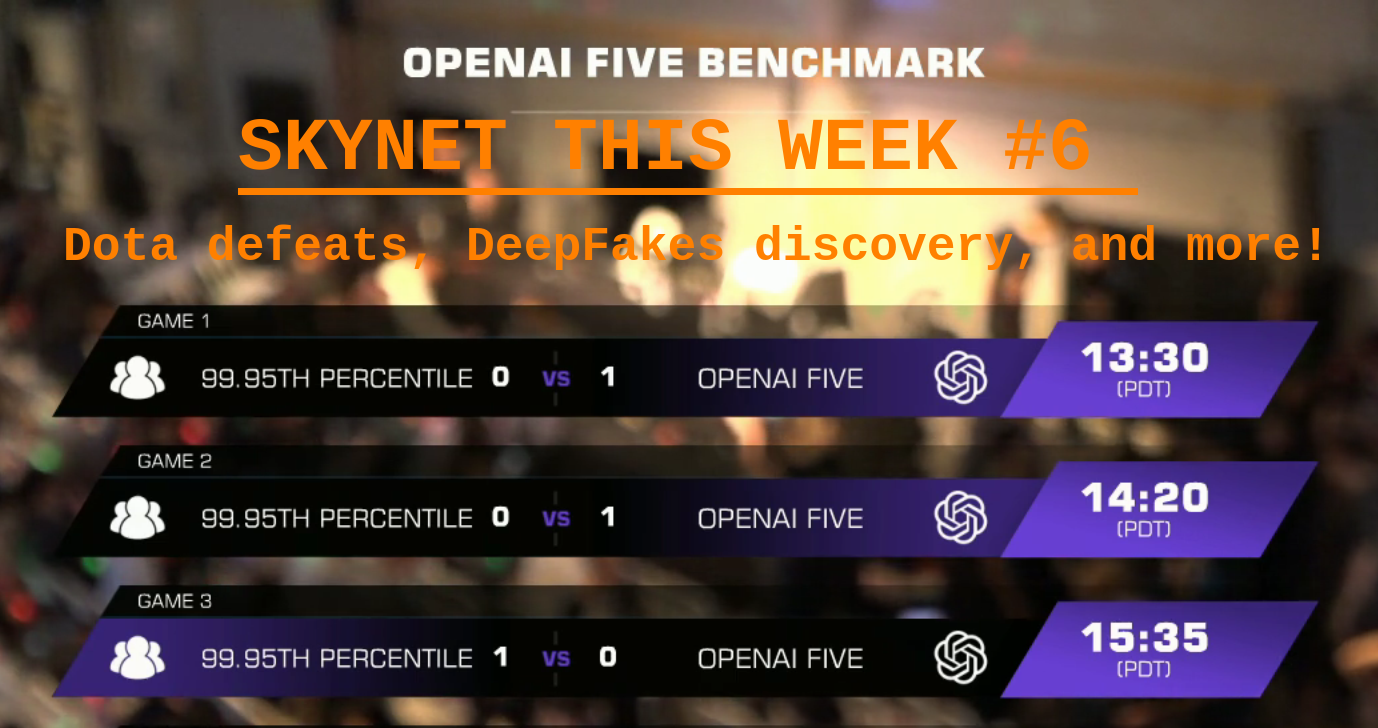

The OpenAI Dota 2 bots just defeated a team of former pros

Vlad Savov, The Verge

OpenAI has showcased impressive progress on their AI bots trained to play the complex game Dota, as nicely summarized in the above article:

“A month and a half ago, OpenAI showed off the latest iteration of its Dota 2 bots, which had matured to the point of playing and winning a full five-on-five game against human opponents. Those artificial intelligence agents learned everything by themselves, exploring and experimenting on the complex Dota playing field at a learning rate of 180 years per day. Today, though, the so-called OpenAI Five truly earned their credibility by defeating a team of four pro players and one Dota 2 commentator in a best-of-three series of games.”

OpenAI has a good detailed write up of the games. Just about everyone agreed tackling this complex a problem with pure learning was an impressive achievement, though various caveats were only noted on Hacker News and Twitter:

“The AI was essentially pursuing a “death-ball” strategy of grouping as 4 or 5 and pushing right down a lane, which can be countered by picking mobile and fast heroes that can put pressure on different parts of the map and slow down the “death-ball” by forcing reactions. However, none of those heroes were draft-able, and so the humans were forced to play the AI’s strategy. The AI’s strategy favors team fight coordination (laying stuns correctly, and correctly calculating whether damage through nukes is sufficient to kill any particular target) and reaction times, at which the AI was clearly superior to the human team.”

Similarly, once the bots were taken out of their comfort zone in the showmatch, they completely broke down. You can see the brittle nature of the models as they’re pushed further outside of what they have experience with, and know how to respond to.

— mike cook (@mtrc) August 6, 2018

The Defense Department has produced the first tools for catching deepfakes

Will Knight, MIT Tech Review

As we’ve previous covered, DeepFakes is a controversial application that creates videos with one person’s face substituted for another’s. A project funded the Defense Department has produced the first tools for catching these fakes, which rely on surprisingly simple techniques like watching eye movements.

Artificial Intelligence Continues Its Fundraising Tear In 2018

Mary Ann Azevedo, Crunchbase

A nicely brief look at four promising companies selling AI powered products (Drishti, Alloy, Avaamo, SparkCognition) that has recently raised fresh funding. It seems no AI winter is in sight:

“Funding in the AI sector has climbed steadily—and impressively—over the past decade. In 2017, investors pumped nearly $5 billion in U.S.-based AI startups. And trends point to 2018 being another great year for venture investing in AI and machine learning startups.”

AI Concerns & Policy

Strategic Competition in an Era of Artificial Intelligence

Michael Horowitz, Elsa B. Kania, Gregory C. Allen and Paul Scharre, Center for a New American Security

Andrew Ng received some flak for saying that AI is the “new electricity,” but the Center for a New American Security treats this idea seriously with a detailed exploration of past industrial revolutions. We are personally not totally sold on the idea, but it’s very much worth the read.

JAIC: Pentagon debuts artificial intelligence hub

Jade Leung, Sophie-Charlotte Fischer, Bulletin of the Atomic Scientists

The US Defense Department is centralizing its AI efforts with the new Joint Artificial Intelligence Center (JAIC). The mission admirably includes “ethics, humanitarian considerations, and both short- and long-term AI safety.” The move comes late, and it is unclear whether it will tilt the balance in AI research between private companies, academia, and government. However, with tech companies resisting defense projects, the government may have to go at it alone.

AI for cybersecurity is a hot new thing—and a dangerous gamble

Martin Giles, MIT Tech Review

AI is spreading to the world of cybersecurity and some people are worried about the consequences. The concerns given by the MIT Tech Review, including adversarial examples and the explainability, also apply to other fields.

AI Overviews

AI bots are getting better than elite gamers

Oscar Schwartz, The Outline

The Outline has an accessible piece on Open AI’s Dota victories. It does a great job positioning the achievement relative to others like IBM’s Watson and DeepMind’s AlphaGo.

Artificial intelligence and the rise of the robots in China

Gordon Watts, Asia Times

Asia Times has a fascinating article about the adoption of robots in China, including a robotic teaching assistant for preschools and a package delivery robot. This complements a recent piece by the New York Times showing that China may be diving head first into consumer robotics.

For AI, a real-world reality check

Robert Ito, Google Blog

A nice summary of ongoing efforts to increase diversity and inclusiveness within AI and computer science by programs such as AI4ALL, Code Next, DIY Girls, Project Include and Black Girls Code. It also nicely summarizes the need for such efforts within the context of AI:

“With AI becoming ever more ubiquitous, the need for gender balance in the field grows beyond just the rightness of the cause—diversity is a crucial piece of AI due to the nature of machine learning. A goal of AI is to prod machines to complete tasks that humans do naturally: recognize speech, make decisions, tell the difference between a burrito and an enchilada. To do this, machines are fed vast amounts of information—often millions of words or conversations or images—just as all of us absorb information, every waking moment, from birth (in essence, this is machine learning). The more cars a machine sees, the more adept it is at identifying them. But if those data sets are limited or biased (if researchers don’t include, say, images of Trabants), or if the folks in AI don’t see or account for those limits or biases (maybe they’re not connoisseurs of obscure East German automobiles), the machines and the output will be flawed. It’s already happening. In one case, image recognition software identified photographs of Asian people as blinking.”

Expert Opinions & Discussion within the field

You Cannot Serve Two Masters: The Harms of Dual Affiliation

Ben Recht, David A. Forsyth, and Alexei Efros, argmin blog

An opinion piece expressing opposition to the notion that “dual affiliation” – the practice of professors being paid to spend 80% of their time working in industry and only 20% in their university and lab – is not ultimately harmful to academic research.

Q&A with Yoshua Bengio on building a research lab

Graham Taylor, CIFAR

A nice retrospective on the career trajectory of Yoshua Bengio, with a lot of useful advice on how to progress well as a PhD candidate and a professor.

Explainers

Title

When Recurrent Models Don’t Need to be Recurrent

John Miller, BAIR Blog (reposted from Off the Convex Path)

A nice overview of the recent trend towards implementing models that traditionally use Recurrent Neural Nets without the recurrent bit.

OpenAI & DOTA 2: Game Is Hard

Michael Cook, Games by Angelina

A great summary of the details of the OpenAI Five match and a dissection of the bots’ performance, and summary of how we should think about the upcoming match at the International

“In a week or two, OpenAI will walk onto a similar stage at this year’s International, and play a team of world-class professionals. Like the Alliance, they’re coming off the back of a run of spectacular successes, and like the Alliance, they seem to only have one very powerful strategy. I don’t know whether a human team will be able to upset the bots enough to give us as dramatic a series as Alliance had in 2013, but I’m fairly sure that regardless of the outcome, it’s a series that will be remembered as incredibly significant in its own right.”

”A lot of people still joke that the Alliance didn’t really play DOTA 2 – they won because the current state of the game favoured them, because of the way certain mechanics worked at the time, or because they played a particularly reviled style of DOTA that teams found hard to deal with. The same could be said of OpenAI’s bots – we’ve seen clear evidence of their weaknesses, we’ve seen them make mistakes, we’ve seen them act strangely when they’re pushed out of their comfort zone. But ultimately, a win is a win, and while OpenAI might not have a perfect understanding of every aspect of DOTA 2, whatever understanding it does have has created an extremely formidable team.”

Inside an AI Conference - Robotics Science and Systems

Andrey Kurenkov, Skynet Today

A fun summary of the

Awesome Videos

An AI Researcher’s Life: the RSS Conference

Andrey Kurenkov, YouTube

DeepDribble: Simulating Basketball with AI

DeepMotion, YouTube

Favourite Tweet

my meditation regime - stare at visdom ui and breath in sync with the loss function#om pic.twitter.com/tCAbB9EHKO

— helena sarin (@glagolista) August 5, 2018

Favorite meme

I wanted to do be productive by programming today, but alas duty calls - watching the #openai5 match instead. pic.twitter.com/GHh2S7NQ5b

— Andrey Kurenkov 🤖 (@andrey_kurenkov) August 5, 2018

That’s all for this digest! If you are not subscribed and liked this, feel free to subscribe below!