Deepfakes - Is Seeing Still Believing?

Has widespread misuse of AI arrived? Not quite yet...

The first high-profile example of state of the art AI techniques causing large scale harm, and likely far from the last. But it may not be as bad as it seems.

What Happened

In late September 2017, a reddit user by the name of “deepfakes” appeared. By February 2018, the activity of this deepfakes had been covered by Forbes, the BBC, the NYT, and a multitude of tech news sources.

The cause of this coverage? Deepfakes, as the name implies, used deep neural networks to generate fake (but at a glance, convincing) images and videos of porn in which the faces of the pornstars was swapped with the faces of various celebrities.

The images and videos look highly realistic. Other people, like one named “derpfakes” used the same methods for more amusing results things like pasting Angela Merkel’s face onto Donald Trump, or sticking Nicholas Cage into every film role ever.

It’s important to discuss the research vs. the application in this case. The original deepfakes appears to be based on a simplified version of this paper from NVIDIA. It however doesn’t use Generative Aversarial Networks (GANs), instead just taking advantage of a dual autoencoder approach.1 More recent versions of the Deepfakes code do take advantage of GANs2. Of course, the methods outlined in these papers were not developed with these uses in mind, but the availibility of papers and code does enable such misuses.

The Reactions

Vice’s motherboard broke the story of deepfakes with a headline reading “We’re All Fucked”, in December. It focused on a worrying video where the face of Gal Gadot, star of DC’s Wonder Woman, was superimposed onto the body of a pornstar.

It took another month for the coverage to really explode, though. Motherboard again broke the story that deepfakes had been made into an accessible app by a tool called “FakeApp”; this time the headline read “We are Truly Fucked, Everyone Is Making AI-Generated Fake Porn Now”. While previously creation of deepfakes content was limited to programmers and experts, FakeApp heavily automates the process and so allows laypeople to create the faceswapped videos with relative ease.

In that second article, Vice says:

It isn’t difficult to imagine an amateur programmer running their own algorithm to create a sex tape of someone they want to harass.

Lawfare goes further, saying

The spread of deep fakes will threaten to erode the trust necessary for democracy to function effectively

And other sources added their own opinions, with The Verge, Forbes, The Outline, and the BBC describing ways that deepfakes could lead to revenge porn, blackmail, and worstening fake news.

What about blackmail? Find a girl with suitably conservative and perhaps deeply religious parents and fake her into a porn video.

The fake news crisis, as we know it today, may only just be the beginning.

the “nightmare situation of somebody creating a video of Trump saying ‘I’ve launched nuclear weapons against North Korea,’

In the wake of this coverage, on February 7, Reddit updated its rules about involuntary pornography, outlawing “any person in a state of nudity […] posted without their permission, including depictions that have been faked”. This caused discussion across Reddit and Hacker News about questions of everything from ethics to speech to intellectual property law. Those changes seem to have served their purpose: deepfakes appears to have left the spotlight, at least for now.

Our Perspective

So how bad is this - are we all fucked, really?

It’s not good, and for a change the media coverage about this story is largely solid. Still, there are a few places where non-techincal journalists get carried away and overestimate what the tools are capable of now.

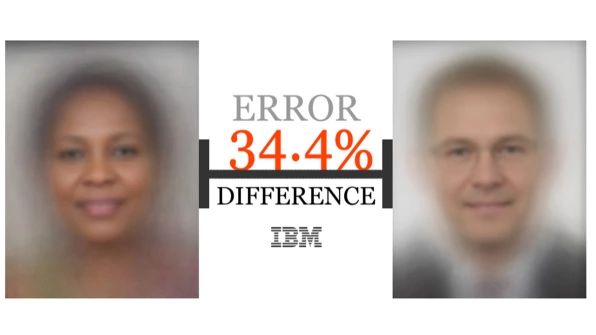

While the ability of generative tools to create realistic fakes has vastly improved over the past 5 or so years, they aren’t yet all the way there, and currently, are either relatively easy to spot as fake, or not able to produce the kinds of images deepfakes demands.

4 years of GAN progress (source: https://t.co/hlxW3NnTJP ) pic.twitter.com/kmK5zikayV

— Ian Goodfellow (@goodfellow_ian) March 3, 2018

What’s more, state of the art methods for video generation rely on a paired set of models, one that generates fakes, and one that recognizes fakes (the aforementioned “GAN”). If GANs continue to be the state of the art in fake generation, we know that it is at least possible for other ML systems to differentiate between fake and real images.3 This will continue to be true even if it becomes impossible for expert humans to distinguish the fakes from real video; and at least for now, deepfakes are straightforward to spot.

Many of the articles also suggest faked revenge porn or blackmail as possible outcomes. While that shouldn’t be wholly discounted, that specific application is not as easy as claimed. Deepfakes, like most machine learning models, requires a lot of data. Making a fake requires a significant number of good quality images of a person. For celebrities and politicians, this is easy to come by. But for a use case like personal blackmail, it’s less likely that there will be a lot of good images or videos availible. 4

A few examples: it took thousands of professional-quality headshots for a NYT reporter to get decent results with FaceApp , and nearly one thousand for Sven Charlier, an HCI researcher, to get reasonable results at a relatively low resolution. That implies that at least for the forseeable future, most deepfaked blackmail will be low resolution and grainy. Perhaps more importantly, because of these limitations, right now it is possible to detect many celebrity deepfakes since they use existing video and celebrity photos.

Another important thing to note about how deepfakes may affect fake news is that there are already many ways to create convincing fake videos. One exceptionally silly example from lawfare was that deepfakes could be used to “falsely depict a white police officer shooting an unarmed black man while shouting racial epithets”. Creating such a video is already possible, no machine learning necessary.

These corrections aside though, for the most part the coverage has been correct and this is indeed a worrying turn in the Deep Learning-initiated AI boom we are still in the middle of.

TLDR

Pornography with the use of AI techniques is certainly an ethical concern and bringing it to light is important. However, media outlets probably overreach when they suggest that you might be next.

Josh is currently employed by Google, but these opinions are his own.

-

In short, this approach works by taking two autoencoders and forcing them to share the same “latent space”. In other words, you train an autoencoder to compress and then decompress your face, and train an autoencoder to compress and then decompress a celebrity face. By being clever and sharing the encoder between both models, you make sure that the compressed representations are similar, so then you can attach the decoder from the celebrity face to the shared encoder, and then pass in your face and get out a celebrity face. ↩

-

CycleGAN, and pix2pix are two examples of GAN models modified for faceswapping. In general, GANs work by training a pair of models, a discriminator and a generator. The Generator tries to trick the discriminator, and the discriminator in turn tries to detect the fakes produced by the generator. ↩

-

Since the GAN works by pairing a discriminator and a generator, the existence of a good generator is only possible due to an equally good discriminator. The problem with this is in practice is that there is no reason for a deepfake creator to publish the discriminator used as part of training. ↩

-

Machine learning with a small amount of training data is an active area of research known as “one-shot learning”. Current one-shot learning techniques can’t generate the types of images that are required for convincing deepfakes. ↩