Skynet This Week #13: top AI news from 11/05/18-11/19/18

Investments & projections, Believing & Trusting, and more!

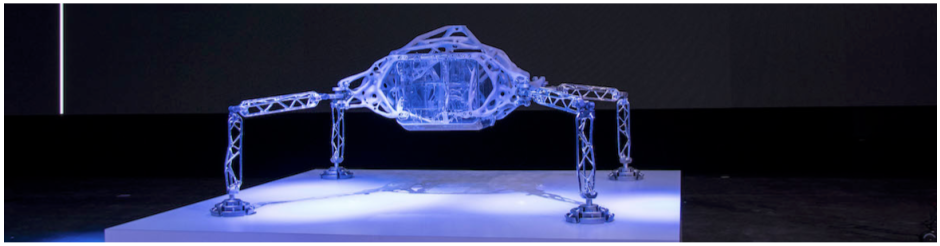

Image credit: Photo from Autodesk’s blog post

Our bi-weekly quick take on a bunch of the most important recent media stories about AI for the period November 5th 2018 to November 19th 2018.

Advances & Business

Reduced holiday temp hiring is a sign Amazon is turning to more automation and robots

Tyler Clifford,CNBC

Amazon is hiring 100,000 temporary workers this holiday season, 20,000 fewer than last year. The decrease may be due to Amazon’s increasing use of robots in its fulfillment centers. As the article quotes however, Amazon has hired more full-time fulfillment center employees this year, so it’s unclear to what extent the decrease in temp workers is actually due to automation, or perhaps even Amazon’s new minimum $15 per hour wage.

Germany plans 3 billion in AI investment: government paper

Holger Hansen, Reuters

Germany is planning to invest 3 billion euros in AI R&D until 2025, as outlined in an new “AI made in Germany” strategy paper. Governments around the world are paying more attention to the development of AI, noting its broad applications in many sectors across industry, healthcare, and the military. While the U.S. has yet to draft a national AI strategy, China released its own in 2017 and so did France in early 2018. An AI strategy is especially urgent for Germany as its car industry, an important engine for German economic growth, is falling behind in the development of self-driving (and electric) vehicles to their counterparts in the U.S. and China.

AI software helped NASA dream up this spider-like interplanetary lander

Loren Grush, The Verge

A refreshingly non neural-net based story about the uses of AI. NASA’s Jet Propulsion Laboratory (JPL) partnered with Autodesk after the latter showcased an impressive technique to design lighter spacecrafts:

“To make the lander, Autodesk used its own artificial intelligence software, which can develop hundreds of different designs in short periods of time. Known as generative design, it’s a technique that allows engineers to come up with computer-generated concepts for a project by inputting a set of constraints that the software must adhere to. For the lander, Autodesk and JPL input the types of temperatures and forces a lander might experience when traveling through deep space. They also input variables like the kinds of materials that the software should experiment with, such as titanium and aluminum. And they asked the software to explore different types of manufacturing methods, including casting and 3D printing.”

Concerns & Hype

In the Age of A.I., Is Seeing Still Believing?

Joshua Rothman, The New Yorker

Image forgery has a long history. Will algorithmically assisted fakes change anything to our media landscape? The New Yorker delivers a level-headed analysis of what will probably change, in good and in bad.

Google ‘betrays patient trust’ with DeepMind Health move

Alex Hern, The Guardian

When DeepMind began its work with UK’s National Health Service (NHS) in 2017, gaining access to the NHS records of 1.6 million patients, DeepMind pledged to not share this data with Google, a sibling company under Alphabet. The recent restructure to move Streams, DeepMind’s flagship health app, under Google puts this pledge in question and rightfully raises concerns about patient privacy and inappropriate access to this data for the use by other products in Google. For now however, Google insists all data access guidelines and restrictions remain the same, and that this restructure is only for the purpose of scaling Streams globally beyond the UK.

China’s state-run press agency has created an ‘AI anchor’ to read the news

James Vincent, The Verge

Following recent and controversial developments in machine-generated human voices and facial expressions (see DeepFakes), Xinhua, China’s state-run media outlet, has deployed an “AI” news anchor to read news. This anchor isn’t an “AI” but rather a synthesized video of a real-life anchor reading scripts written by a human. While the “AI” anchor is not completely realistic and can be easily distinguished by a human to be fake, the dissemination of this technology in Chinese media can be disconcerting to many, given the country’s records on press censorship.

China’s brightest children are being recruited to develop AI ‘killer bots

Stephen Chen, South China Morning Post

“The 27 boys and four girls, all aged 18 and under, were selected for the four-year “experimental programme for intelligent weapons systems” at the Beijing Institute of Technology (BIT) from more than 5,000 candidates, the school said on its website.”

Chinese high school graduates have been selected highly competitive college program to work on AI-enabled weaponry. This event continues the trend of AI expertise becoming relevant to the national defense strategies of big countries across the globe.

Analysis & Policy

Should a self-driving car kill the baby or the grandma? Depends on where you’re from.

Karen Hao, MIT Technology Review

The Trolley Problem is one discussed in philosophy and ethics classes for years. Now, when AI agents are the ones making decisions for us like in the case of self driving cars, these decisions become even more important. Researchers at MIT Media Lab designed an experiment to present various versions of these questions in a game like interface called the Moral Machine which received viral reception. On analysing these results, they observed that results vary wildly based on the country but they also correlate highly with culture and economics.

Sheer number of people was surprisingly not the only dominant factor in choosing which group should be spared. The choices of participants from individualistic countries differ greatly when compared to participants from collectivist countries. The researchers discuss various possible biases and how we need to discuss these issues now more than ever.

The authors of the study emphasized that the results are not meant to dictate how different countries should act. In fact, in some cases, the authors felt that technologists and policymakers should override the collective public opinion. The results should be used by industry and government as a foundation for understanding how the public would react to the ethics of different design and policy decisions.

Facial recognition technology: The need for public regulation and corporate responsibility

Brad Smith, Microsoft Blog

The article highlights how Artificial Intelligence has embedded in our professional and personal lives today and presents both negative and positive possible implications of it. One such technology is facial recognition which on one hand, could help locate a missing child and on the other, invade the privacy of millions of people by tracking them at all times. It discusses the role of government in regulating these technologies and the responsibility of the tech sector to put effort into mitigating bias and developing ethical products.

The Impact of Artificial Intelligence on the World Economy

Irving Wladawsky-Berger, The Wall Street Journal

“Artificial intelligence has the potential to incrementally add 16 percent or around $13 trillion by 2030 to current global economic output– an annual average contribution to productivity growth of about 1.2 percent between now and 2030, according to a September, 2018 report by the McKinsey Global Institute on the impact of AI on the world economy.”

A nice summary of recent projections about the worldwide economic impacts of AI over the next decade. The summary highlights key takeaways, the biggest of which are that AI will have a relatively large impact on the world economy but that it is also widen inequality among those with a starting lead and those who do not yet have much expertise in AI:

“The economic impact of AI is likely to be large, comparing well with other general-purpose technologies in history,” notes the report in conclusion. “At the same time, there is a risk that a widening AI divide could open up between those who move quickly to embrace these technologies and those who do not adopt them, and between workers who have the skills that match demand in the AI era and those who don’t. The benefits of AI are likely to be distributed unequally, and if the development and deployment of these technologies are not handled effectively, inequality could deepen, fueling conflict within societies.”

Expert Opinions & Discussions

Artificial Intelligence Hits the Barrier of Meaning

Melanie Mitchell, The New York Times

In this opinion piece, Pr. Mitchell points out that despite recent advances in fields like image recognition or machine translation, researchers have not yet solved the issue of making algorithms that understand the task that they are solving: this is why machine translation regularly gives nonsensical results, and why many algorithms cannot deal with trivial modifications in the data they are processing.

“The bareheaded man needed a hat” is transcribed by my phone’s speech-recognition program as “The bear headed man needed a hat.” Google Translate renders “I put the pig in the pen” into French as “Je mets le cochon dans le stylo” (mistranslating “pen” in the sense of a writing instrument).

EMNLP 2018 Highlights: Inductive bias, cross-lingual learning, and more

Sebastian Ruder, AYLIEN blog

Sebastian Ruder succinctly provides the main trends and interesting research published in this year’s EMNLP, one of the most prominent conferences in natural language processing. He categorizes these trends into inductive bias, cross-lingual learning, word embeddings, latent variable models, language models, datasets, miscellaneous and shares insight to why these papers stand out. A must read for anyone looking to catch up on the latest NLP research.

Explainers

This quirky experiment highlights AI’s biggest challenges

Rachel Metz, CNN Business

CNN Business interviewed Janelle Shane, a scientist who has been delighting the internet by sharing the strange outputs of her neural networks trained to reproduce data like ice cream flavors, cooking recipes and halloween costumes. The results make for a good crash course in neural networks’ limitations.

Applying Deep Learning To Airbnb Search

Malay Haldar, Airbnb Engineering & Data Science

An introduction to the problems worked on in Airbnb’s recent Arxiv paper, in which they described methods for

Spinning up in Deep RL

OpenAI

Not quite a full curriculum, but a nice set of resources for people interested in teaching themselves the key concepts in Reinforcement Learning research.

Favourite Tweet

You: "AI powered deep fakes will herald a new era where we cannot tell what is true and false on the internet"

— jongo dorts (@alexhern) November 15, 2018

Me: Drew used the power of "right click" to edit the headline on a piece of mine and I've had people asking me if I know what 'hunger' is for twelve hours https://t.co/GXClzog6eo

Favorite goof

It's time to stop pic.twitter.com/alZjuINyDg

— AI Memes for Artificially Intelligent Teens (@ai_memes) November 8, 2018

That’s all for this digest! If you are not subscribed and liked this, feel free to subscribe below!