Biased Facial Recognition - a Problem of Data and Diversity

Flashy headlines often hijack meaningful and important conversations on this topic, even when the articles are solid - as was the case here

A great example of how misleading and click-baity article titles can cause hype and often diminish well-researched articles.

What Happened

Joy Buolamwini, a Rhodes scholar at MIT Media labs found that facial recognition systems 1 did not work as well for her as it did for others. The models just did not seem to recognize her compared to her fairer skinned friends. This led her to investigate facial recognition systems in the context of people of color. In her recently published paper and project - “Gender shades”, she evaluated commercially available gender identification systems from Microsoft, IBM, and the chinese company Mobvii.

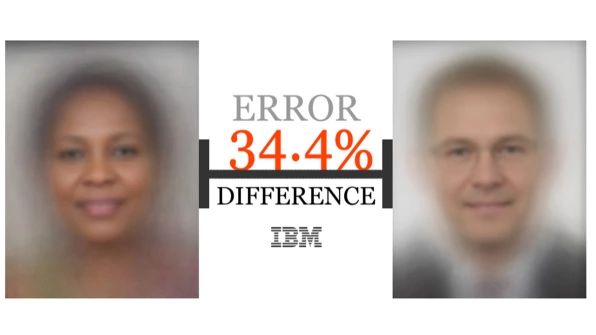

The results showed that all three systems performed poorly for people of color compared to fairer skinned subjects. To make things worse, the systems performed abysmally for darker-skinned women, failing to correctly identify them up to 34% of the time. Looking for what might be the cause of this performance disparity led Joy to examine two commonly used datasets 2 of faces. She found both datasets to be composed of a higher number of lighter skinned subjects that led to an imbalance 3 in the datasets, which correspondingly caused the disparity in the systems’ performance. To help correct the lack of balanced datasets she has put together a more ethnically-diverse one for the community to use.

The Reactions

The media reacted to the results of the paper with headlines like “Photo algorithms ID white men fine—black women, not so much” (WIRED) and “Facial Recognition Is Accurate, if You’re a White Guy” (NYT).

- Contrary to the Wired headline’s explicit claim the algorithms themselves are biased, the article itself rightfully explains that

The skewed accuracy appears to be due to underrepresentation of darker skin tones in the training data used to create the face-analysis algorithms.

- The Verge put it even more strongly, saying

The algorithms aren’t intentionally biased, but more research supports the notion that a lot more work needs to be done to limit these biases.

- And the Atlantic likewise correctly points out that

An algorithm trained exclusively on either African American or Caucasian faces recognized members of the race in its training set more readily than members of any other race.

Microsoft and IBM were both given access to Joy Buolamwini’s research and the newly created dataset. Microsoft’s claimed to have already taken steps to improve the accuracy of the facial recognition technology. IBM went a step further and published a white paper replicating the results from the paper and showing the improvements in the systems after re-training on a balanced dataset.

.@jovialjoy sent a pre-print of their paper to the companies after #FAT2018 acceptance. Face++ didn't respond. MS sent a response. IBM had the best response -- replicated the paper internally and released a new API yesterday. Wow. New API classified darker females at 96.5%

— Alex Hanna, Data Witch (@alexhanna) February 24, 2018

While most of the NYT article focused on the research itself, it closed with this great line

“Technology”, Ms. Buolamwini said, “should be more attuned to the people who use it and the people it’s used on. You can’t have ethical A.I. that’s not inclusive,” she said. “And whoever is creating the technology is setting the standards.”

As this line suggests, the issue is more nuanced than just collecting better data; the larger and more complicated underlying problem is that of lacking diversity and inclusivity within the tech industry.

In facial analysis, "supremely white data" gave a false sense of technical progress by leaving out the majority of the world --@jovialjoy #FAT2018 #AI #MachineLearning

— Christian Sandvig🐩 (@niftyc) February 24, 2018

Our Perspective

Is it inevitable that these data-driven systems will reflect the biases and prejudices of our society? Or are the people designing the systems not trying to prevent this bias?

If we examine the technical details, neither of those statements are quite true and the situation turns out to not be so dire. As most of the media coverage correctly points out, this is a problem caused by lack of balanced data. As IBM’s white paper shows, when these systems are trained with datasets that are well balanced they are very accurate. The important question to ask is why these systems were not trained with balanced datasets to begin with. The answer seems to be (as most of the articles suggest) that balanced datasets were not considered during the design of the systems. The lack of consideration is, in significant part, due to a lack of diversity in the AI community itself.

10/ @drfeifei's 2 major kind of #bias: one is the pipeline of #AI development from bias of data to the outcome of the bias, and the second is the human bias, the people who are developing #AI and the lack of diversity.

— Beto Saavedra (@betolive) May 10, 2018

Good summary @betolive ! Thank you! https://t.co/ctBaWt6gBb

— Fei-Fei Li (@drfeifei) May 10, 2018

On a broader note, face recognition might actually be the least of our worries with regards to the bias and fairness of AI systems. There are worrying examples of bias and discrimination in criminal sentencing, hiring, and mortgage grants. To make matters worse, recent research has shown that when multiple AI systems are used together they can amplify bias, even if each of the systems is bias-free.

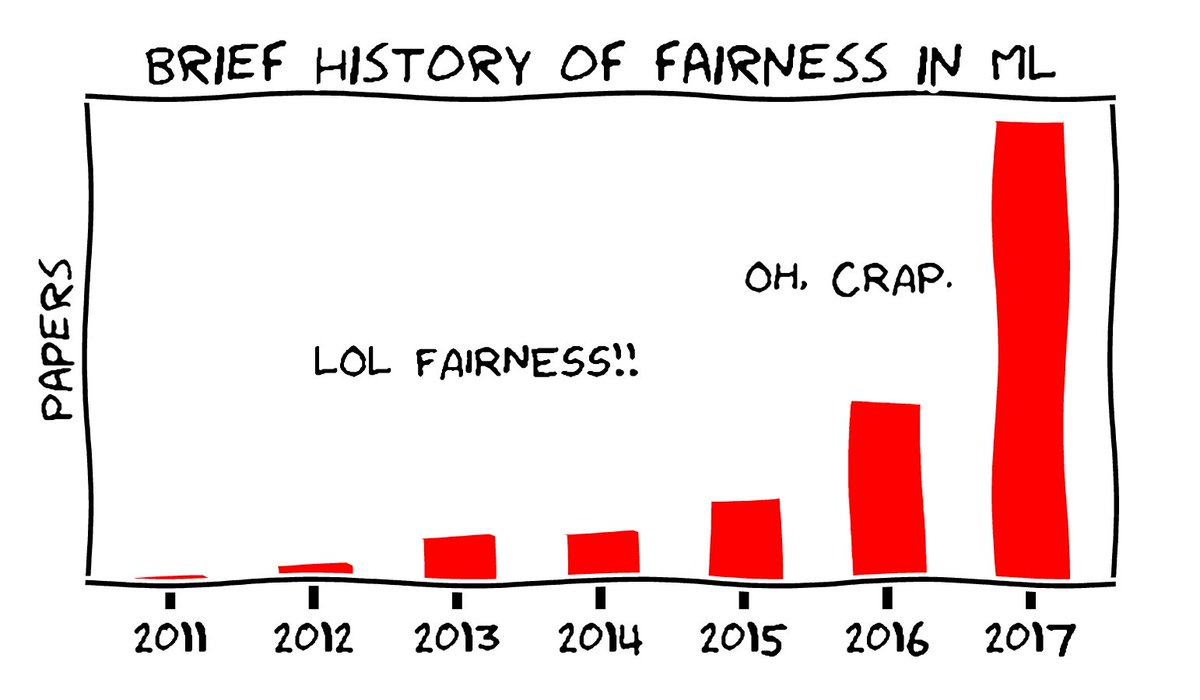

Although challenges exist, solutions to these challenges are actively being explored; a fast growing community of researchers are now hard at work on these solutions with formal approaches for ensuring Fairness, Accountability and Transparency in AI systems. It may be fair to say that some of the best minds in the field have set their sights on tackling these challenging problems!

Be woke. @mrtz's epic class on fairness in #MachineLearning is a must read for everyone using ML. https://t.co/nfoiJtoDZE pic.twitter.com/AvSrL9HduF

— Delip Rao (@deliprao) September 5, 2017

Excited to see 54 submissions to FATML 2018, up 12.5% from last year even though @fatconference just happened.

— Moritz Hardt (@mrtz) May 2, 2018

However, most of these researchers attack these problems from a technical perspective. As Kate Crawford points out in her excellent keynote at the NIPS 2017 conference, bias cannot be considered a purely technical problem.

“Bias is a highly complex issue that permeates every aspect of machine learning. We have to ask: who is going to benefit from our work, and who might be harmed? To put fairness first, we must ask this question.”

As Moustapha Cissé of Facebook AI Research says,

the datasets are a result of the problems being considered by the designers. If we focus on problems that are important to only a specific population of the world, the end result is these unbalanced datasets. These datasets then, are used for training the models that make biased and unfair decisions.

To ensure that a wide variety of problems are considered, there is also a need for a more diverse community of researchers and engineers in AI.

Looking forward to seeing @jovialjoy and @timnitgebru present their paper on bias in facial recognition systems at #FAT2018 this weekend—so much research needed to ensure AI is inclusive https://t.co/KeL0Aq6stx

— Mustafa Suleyman (@mustafasuleymn) February 24, 2018

So what of the media coverage of this problem? While the content of the news articles is well articulated and on point, hyperboles such as the ones in the headlines are harmful and misleading. In the short-term, as pointed out in this great blog post by researchers at Google, AI experts need to be aware of undesirable biases that might exist in the systems they are designing and look to mitigate them. Further, system designers should be mindful of all possible users of their system. The onus is on the AI community to be more inclusive and work towards greater diversity in datasets and in the community itself.

TLDR

Contrary to what some headlines said, the algorithms in question are not the issue. None inclusive datasets produced skewed results, but the same models showed remarkable accuracy when trained on a more racially diverse and balanced dataset. However, this is still a warning sign pointing at an underlying problem: lack of diversity in the AI community. In the short-term, researchers and engineers need to be aware of all their users and the fact that the systems they build may contain possible biases. While fast growing research in the fields of fairness, accountability and transparency provides hope for a technical solution, the AI community needs to also make significant efforts to become more inclusive and diverse.

-

A facial recognition system is a technology capable of identifying or verifying a person from a digital image or a video frame from a video source. ↩

-

A dataset is a collection of data. All Machine Learning systems are “trained” on a dataset. If you’re confused or intimidated by the term machine learning - watch this video ↩

-

When the dataset consists of more examples belonging to one group than the others it leads to an imbalance. For example, if the datasets consisted of more males than females it would be considered an imbalanced dataset. ↩