Tesla's Lethal Autopilot Crash — A Failing of UI as Much as AI

The tragedy might have been avoided if the limitations of the Autopilot were communicated more clearly

A “self-driving” car crashes, tragically killing its driver. Coverage centers on who is to blame, but seems to miss one key insight.

What Happened

On March 23, 2018, a Tesla Model X crashed into a median on Highway 101 in Mountain View California, killing its occupant. Like a similar crash in May 2016 the vehicle’s “Autopilot” was enabled at the time of the crash, and as far as we know, the driver’s hands were not on the wheel.

Soon after the National Transportation Safety Board (NTSB) and Tesla opened an investigation into the cause of the accident. This investigation is still ongoing. TechCrunch has a great writeup on the facts of the situation if you want to learn more. The current predominant theory is that the autopilot incorrectly followed road markings as a lane split in an left-lane exit/interchange.

The Reactions

The story around the crash developed gradually over weeks:

-

Tesla initially released a statement outlining a few quick points about the accident, noting that the crash was likely made more severe due to the lack of a crash attenuator (a safety device built into the highway).

-

A few days later, Tesla released a followup statement that contained a few important details: Autopilot was engaged during the accident and, according to the vehicle’s logs, the driver’s hands were not on the steering wheel at the time of the accident.

-

Weeks later, the driver’s wife took to TV to discuss the crash, saying that her husband had always been “a really careful driver”.

This interview caused a flurry of activity:

- Tesla released a response1 saying that

[The driver] was well aware that Autopilot was not perfect and, specifically, he told them it was not reliable in that exact location, yet he nonetheless engaged autopilot at that location, the crash happened on a clear day with several hundred feet of visibility ahead, which means that the only way for this accident to have occurred is if Mr. Huang was not paying attention to the road, despite the car providing multiple warnings to do so.

They also noted that Autopilot could not avoid all accidents. Tesla was then removed from the NTSB investigation.

- The family’s lawyer released a statement as well, stating that2

[The car] took [the Driver] out of the lane that he was driving in, then it failed to break [sic], then it drove him into this fixed concrete barrier

At the same time, Tesla drivers around the country were able to reproduce autopilot actions similar to the ones that caused the accident, at other interchanges, and at the original crash site. Many noted that this behavior was new, and that a recent over the air update changed the way autopilot reacted in similar cirucmstances.

Our Perspective

Tesla is unique among companies taking on the challenge of autonomous vehicles in that it doens’t use LIDAR in its technology. Magnifying this, the car that crashed appears to predate Tesla’s fully self driving hardware release and so has a limited sensor suite; it appears to have relied almost entirely on a camera for lane finding and car following.

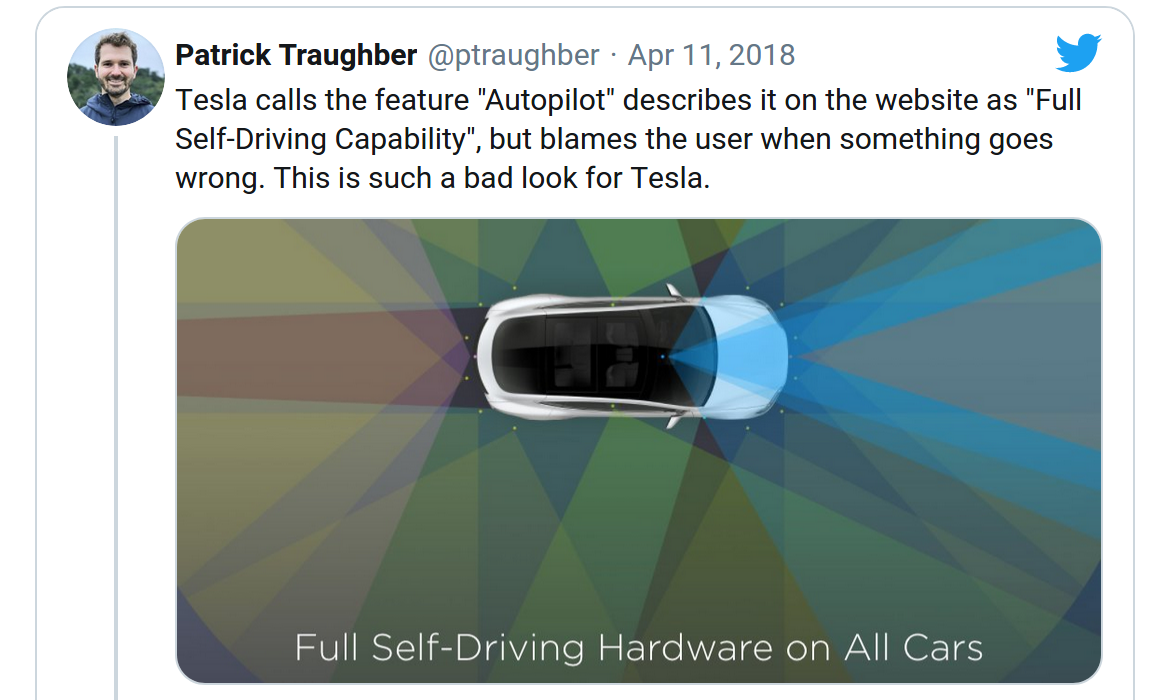

That’s similar to the adaptive cruise control that many other car manufacturers offer. Tesla, however, markets Autopilot as something more advanced,

"Tesla is extremely clear that Autopilot requires the driver to be alert and have hands on the wheel." Tesla's website features a person sitting in the driver's seat without their hands on the wheel: pic.twitter.com/38dsPzbPH3

— Patrick Traughber (@ptraughber) April 12, 2018

Tesla’s statement about the clear weather and the driver’s concern about the location also included the following

The fundamental premise of both moral and legal liability is a broken promise, and there was none here. Tesla is extremely clear that Autopilot requires the driver to be alert and have hands on the wheel.

Was there an explicit promise? Maybe not. But the hype around Tesla’s autonomous capabilities, led in no small part by the company’s CEO Elon Musk, does imply that you should trust the system. Musk originally said that Tesla would have level 4 autonomy3 by the beginning of 2018, but now says that it’s still a ways off.

People, for whatever reason, are all too trusting of autonomous systems. In the age of ever advancing AI, there’s a responsibility for experts and designers to make sure that users understand the limits of the systems they are marketing. This is especially true when, as is the case with Tesla’s vehicles, those exact capabilities may change over time.

In User Experience design, there’s a term, “friction”, for things which require the user to engage or think. Often, you want an interface to be “frictionless”, allowing users to do things without really thinking, as if by instinct. But sometimes you need friction to force users to engage with the system. For example, when confirming an irreversible action.

Tesla’s autopilot could use some more friction; currently its too easy to disengage as a driver, and that’s dangerous.

TLDR

Autopilot worked as one would expect a level 2 self driving car to work. However (given current information) this gave the driver a false sense of security. Tesla may have a responsibility to, ironically, make its users trust its product less.

*Josh works at Google, whose sibling company, Waymo, is a competitor to Tesla in the autonomous vehicle space, but the opinions expressed here are his own. The lead editor, Andrey, is a grad student at Stanford and has no affiliation with Tesla or Google. *

-

Tesla doesn’t appear to have made an official press release with their statement, but it’s reproduced in full at the end of this article. ↩

-

“Level 4” autonomy is autonomy where the vehicle can drive every part of the trip, from driveway to driveway, without human intervention. It should be capable of doing this in common environments and conditions (whereas Level 5 autonomy extends the definiton to more extreme environments and conditions). ↩