Skynet This Week #5: top AI news from 07/16/18 - 07/30/18

Company news, weapons and bias concerns, AI history, and more!

AI Advances & Business

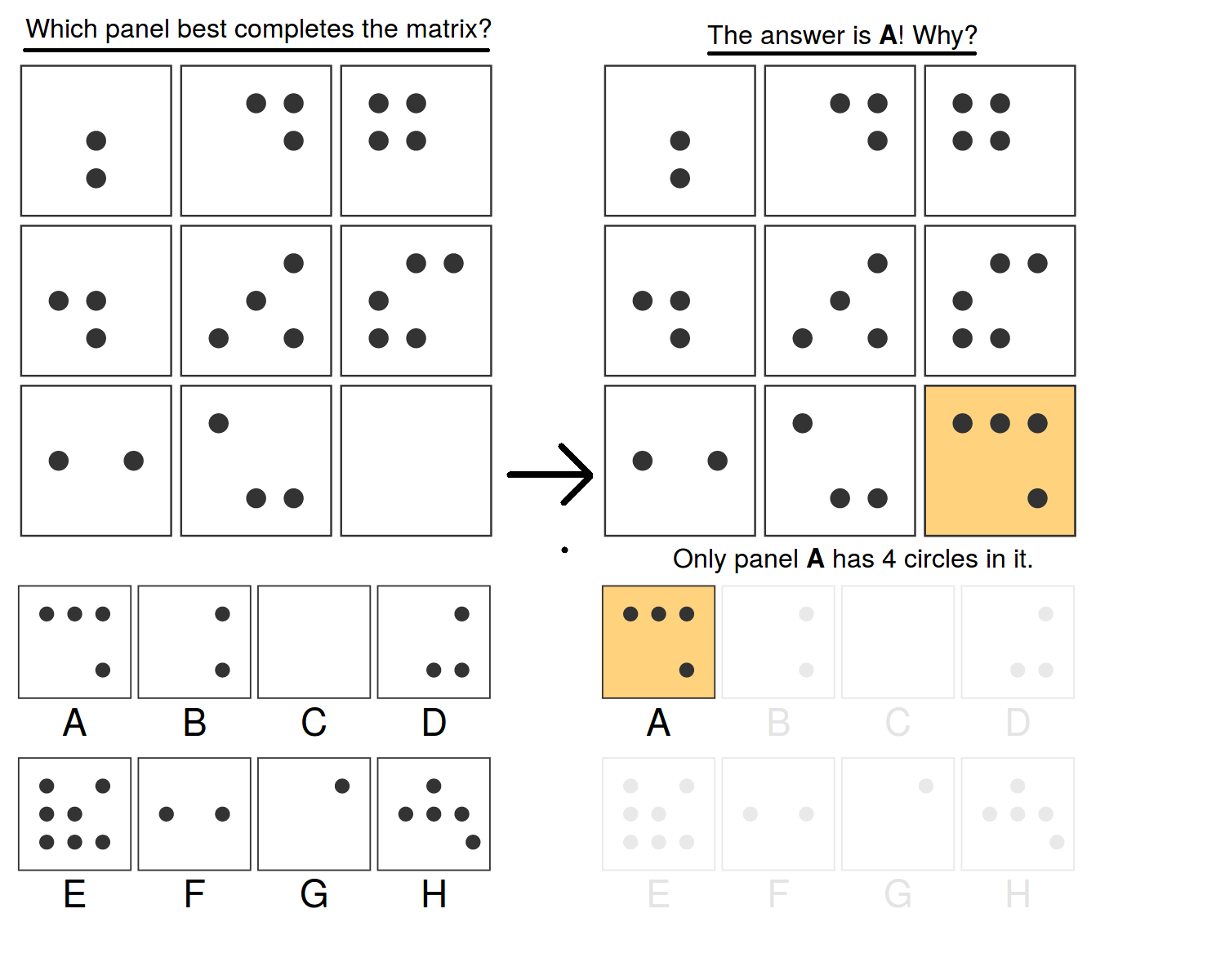

Measuring abstract reasoning in neural networks

DeepMind blog

A recent paper by DeepMind researchers suggested a new way to measure the ‘abstract reasoning’ of neural nets, or in other words to what extent they can generalize learned skills beyond just doing pattern matching. The researchers concluded recent groundbreaking AI models have limited abstract reasoning capabilities, and that researchers ought to be careful about how they evaluate the generalization capabilities of their models:

Recent literature has focused on the strengths and weaknesses of neural network-based approaches to machine learning problems, often based around their capacity or failure to generalise. Our results show that it might be unhelpful to draw universal conclusions about generalisation: the neural networks we tested performed well in certain regimes of generalisation and very poorly in others. Their success was determined by a range of factors, including the architecture of the model used and whether the model was trained to provide an interpretable “reason” for its answer choices. In almost all cases, the systems performed poorly when required to extrapolate to inputs beyond their experience, or to deal with entirely unfamiliar attributes; creating a clear focus for future work in this critical, and important area of research.

Google announces AutoML Vision, Natural Language, Translation, and Contact Center AI

Kyle Wiggers, Venture Beat

A key aspect of Google’s cloud AI strategy is to ‘democratize’ the technology – that is, make it easily usable and more importantly adaptable for different companies without requiring a lot of AI expertise. As they explain in their blog post:

“A significant gap exists between the extremes of what’s currently possible with machine learning. At one end, experienced practitioners such as data scientists use tools like TensorFlow and Cloud ML Engine to build custom solutions from the ground up. At the other end, pre-trained machine learning models like Cloud Vision API deliver immediate results with minimal investment and technical proficiency. But what about the countless customers that fall in between? Many have needs beyond what’s available with pre-trained models, but don’t have the skills or resources to build their own custom solutions.”

The new AutoML Vision, Natural Language, Translation offerings are meant to service this ‘in-between’ set of customers to adapt it to all their needs. And that’s just AutoML; Google is also making their specialized in-house AI hardware accessible to all and addressing specific needs for industries such as call centers, as they summarize at the end of their blog post:

From hardware like Cloud TPUs to software like AutoML and vertical solutions like Contact Center AI, we’re working to advance the state of the art while lowering the barrier to entry — serving customers with a wide spectrum of needs and expertise. And we’re doing this with the aim to enhance the human experience at the center of it all.

Facebook AI Research Expands With New Academic Collaborations

Yann LeCun (Chief AI Scientist), Facebook newsroom

Facebook’s AI research organization FAIR has announced that it is growing both geographically and in terms of research scope. It is collaborating with various notable professors to open new labs in Pittsburgh and Menlo Park and expand labs in London and Seattle. Notably, their research labs in Pittsburgh and Menlo Park and elsewhere are adding a new focus on robotics, which established professors such as CMU’s Jessica Hodgins and Abhinav Gupta as well as Berkley’s Jitendra Malik will help pursue. They note all these professors will retain their position at their respective universities part time:

This dual affiliation model is common across FAIR, with many of our researchers around the world splitting their time between FAIR and a university… This model allows people within FAIR to continue teaching classes and advising graduate students and postdoctoral researchers, while publishing papers regularly. This co-employment appointment concept is similar to how many professors in medicine, law, and business operate.

We’re excited to continue investing in academia, educating the next generation of researchers and engineers, and strengthening interaction across AI disciplines that can traditionally become siloed. Thank you to all the academics around the world who are collaborating with FAIR to advance AI.

AI Concerns & Policy

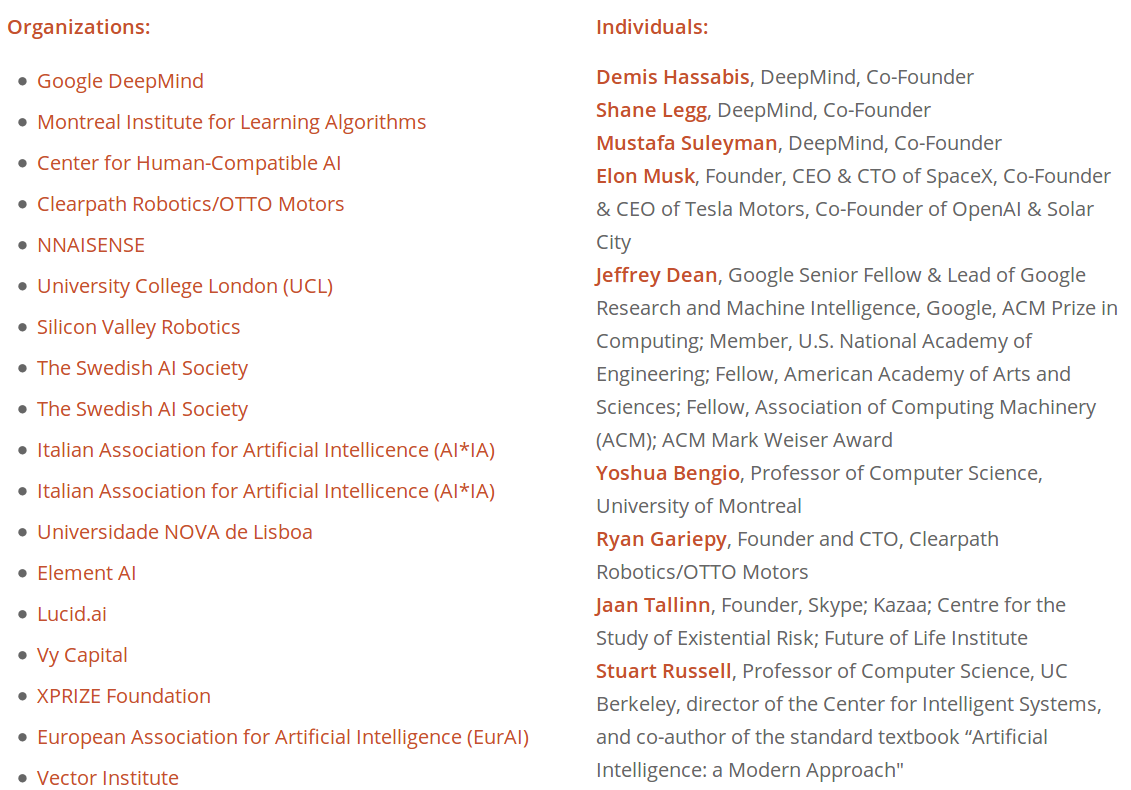

Lethal Autonomous Weapons Pledge

Multiple authors, Future of Life Institute

A large number of top AI researcher and institutions stating that

“Artificial intelligence (AI) is poised to play an increasing role in military systems. There is an urgent opportunity and necessity for citizens, policymakers, and leaders to distinguish between acceptable and unacceptable uses of AI. In this light, we the undersigned agree that the decision to take a human life should never be delegated to a machine.”

and furthermore:

“We, the undersigned, call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons. These currently being absent, we opt to hold ourselves to a high standard: we will neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons. We ask that technology companies and organizations, as well as leaders, policymakers, and other individuals, join us in this pledge.”

The pledge has already been signed by 223 organizations and 2852 individuals, with notable figures in AI such as DeepMind’s founders, Yoshua Bengio, and many more:

The tech industry doesn’t have a plan for dealing with bias in facial recognition

James Vincent, The Verge

Although major tech companies that provide facial recognition services are aware of and working on addressing bias in those systems, The Verge found none would disclose specific details about how they are going about it:

“In an informal survey, The Verge contacted a dozen different companies that sell facial identification, recognition, and analysis algorithms. All the firms that replied said they were aware of the issue of bias, and most said they were doing their best to reduce it in their own systems. But none would share detailed data on their work, or reveal their own internal metrics.”

The piece then argues for standardizing benchmarks for measuring bias, as well as placing context limitations on the systems [these companies] develop if they want to mitigate harms.””

Amazon’s Face Recognition Falsely Matched 28 Members of Congress With Mugshots

Jacob Snow, ACLU

The ACLU (American Civil Liberties Union) recently conducted a test of Amazon’s “Rekognition” facial recognition tool, and found that “the software incorrectly matched 28 members of Congress, identifying them as other people who have been arrested for a crime.” Their piece about the test goes on to say:

Matching people against arrest photos is not a hypothetical exercise. Amazon is aggressively marketing its face surveillance technology to police, boasting that its service can identify up to 100 faces in a single image, track people in real time through surveillance cameras, and scan footage from body cameras. A sheriff’s department in Oregon has already started using Amazon Rekognition to compare people’s faces against a mugshot database, without any public debate.

And that:

Congress must take these threats seriously, hit the brakes, and enact a moratorium on law enforcement use of face recognition. This technology shouldn’t be used until the harms are fully considered and all necessary steps are taken to prevent them from harming vulnerable communities.

Amazon’s Dr. Matt Wood wrote a response that noted that “The ACLU has not published its data set, methodology, or results in detail, so we can only go on what they’ve publicly said” and listed several issues with the bits of the methodology that were disclosed (such as the confidence threshold, training dataset, and more). The ACLU responded by denying were there issues with their methodology and stating that “In its five stages of grief over its dangerous face surveillance product, Amazon is clearly stuck at denial”.

‘The discourse is unhinged’: how the media gets AI alarmingly wrong

Oscar Schwartz, The Guardian

Oscar Schwartz, a freelance writer and researcher with PhD for a thesis unconvering the history of machines that write literature, has written a nice summary of the current state of hype-infused AI coverage and the issues with it (a sentiment we here at Skynet Today can cleary get behind). He suggests journalists should be more cautious in their reporting and seek the aid of researchers, but also cautions incentives and popular culture make this a difficult problem to solve:

While closer interaction between journalists and researchers would be a step in the right direction, Genevieve Bell, a professor of engineering and computer science at the Australian National University, says that stamping out hype in AI journalism is not possible. Bell explains that this is because articles about electronic brains or pernicious Facebook bots are less about technology and more about our cultural hopes and anxieties.

AI History & Overviews

Image Stylization: History and Future (Part 3)

Aaron Hertzmann, Adobe Research Blog

A great conclusion to a three part series on the topic of Image Stylization. Be sure to read parts one and two as well!

The man who invented the self-driving car (in 1986)

Janosch Delcker, Politico EU

A nice summary on how “[d]ecades before Google, Tesla and Uber got into the self-driving car business, a team of German engineers led by a scientist named Ernst Dickmanns had developed a car that could navigate French commuter traffic on its own.“

Yann LeCun: An AI Groundbreaker Takes Stock

Insights Team, Forbes

Forbes nicely summarized Yann LeCun’s 4 decades of significant contributions to the progress of AI and in particular neural net research.

Expert Opinions & Discussion within the field

Machine learning will be the engine of global growth

Erik Brynjolfsson, Financial Times

Erik Brynjolfsson, a professor at MIT and co-author of ‘The Second Machine Age’, briefly summarizes the conclusions of research that predicts Machine Learning will lead to “not only higher productivity growth, but also more widely shared prosperity”.

“In a [paper] (https://www.nber.org/chapters/c14007.pdf) with Daniel Rock and Chad Syverson, we discuss how machine learning is an example of a “general-purpose technology”. These are innovations so profound that they trigger cascades of complementary innovations, accelerating the march of progress and growth — for example, the steam engine and electricity. When a GPT comes along, past performance is no longer a good guide to the future.”

The biggest misconceptions about AI: the experts’ view

Sweitze Roffel & Ian Evans, Elsevier

A nicely brief piece listing several five experts’ views on important misconceptions about AI:

- Prof. Gary Marcus, NYU: “The biggest misconception around AI is that people think we’re close to it. We’re not anywhere near that. We’ve learned to engineer certain narrow problems like speech recognition very well. We’ve done that in ways that we couldn’t have imagined five or ten years ago. But the idea of having machines that can reason about the world in the ways that human beings can … I don’t think we’ve made any significant progress in that at all. Humans can be super flexible – they can learn something in one context and apply it in another. Machines can’t do that.”

- Prof. Max Welling, University of Amsterdam and UC Irvine: “It’s a glorified signal processing tool, but it can be super beneficial.”

- Prof. Joanna Bryson, University of Bath: “As for the algorithm that suddenly knows everything … the singularity – that’s impossible.”

- Elizabeth Ling, Elsevier: “One common misconception is that AI has suddenly happened. In reality, it’s a longstanding domain of science that’s been evolving.

- Prof. Stuart Russell, UC Berkeley: “There’s a common misunderstanding that AI presents a risk because it will magically become conscious and spontaneously hate human beings. There’s no reason to be concerned about spontaneous malevolent consciousness.”

Steps Toward Super Intelligence I, How We Got Here

Rodney Brooks, Blog

Rodney Brooks writes a typically insightful look at past attempts at General AI and significant problems that require solving before General AI may be achieved.

“Some things just take a long time, and require lots of new technology, lots of time for ideas to ferment, and lots of Einstein and Weiss level contributors along the way.”

“I suspect that human level AI falls into this class. But that it is much more complex than detecting gravity waves, controlled fusion, or even chemistry, and that it will take hundreds of years.”

“Being filled with hubris about how tech (and the Valley) can do whatever they put their mind to may just not be nearly enough.”

Explainers

Title

Has AI surpassed humans at translation? Not even close!

Sharon Zhou, Skynet Today

An article from us, going in depth on why Neural Machine Translation systems are not really comparable to human translators at present.

“But are NMT systems comparable to human translators, like these headlines imply? Not even close. As we’ll see, present day NMT systems are not quite as good as they have been purported to be. They still fail at many essential aspects of translation, and are very far from superseding humans.”

Google’s AutoML: Cutting Through the Hype

Rachel Thomas, Fast.ai

A concise and accessible argument as to why aspects of the marketing behind Google’s AutoML (discussed at the beginning of this digest) may be misleading.

The Real Problems with Neural Machine Translation

Delip Rao, Personal Blog

A slightly more technical and less detailed discussion about the failings of Neural Machine Translation systems.

Awesome Videos

Favourite Tweet

I agree with @math_rachel that tech companies and AI researchers who overhype their work are just as culpable as journalists for the AI Misinformation Epidemic.

— Abigail See (@abigail_e_see) July 27, 2018

Got AI expertise? Want to be part of the solution? Write for @skynet_today! More info here: https://t.co/TzB3pVFVMO https://t.co/ErVTCuFBCe

Favorite meme

— James Bradbury (@jekbradbury) July 20, 2018

That’s all for this digest! If you are not subscribed and liked this, feel free to subscribe below!