AI News in 2020: a Digest

An overview of the big AI-related stories of 2020

Overview

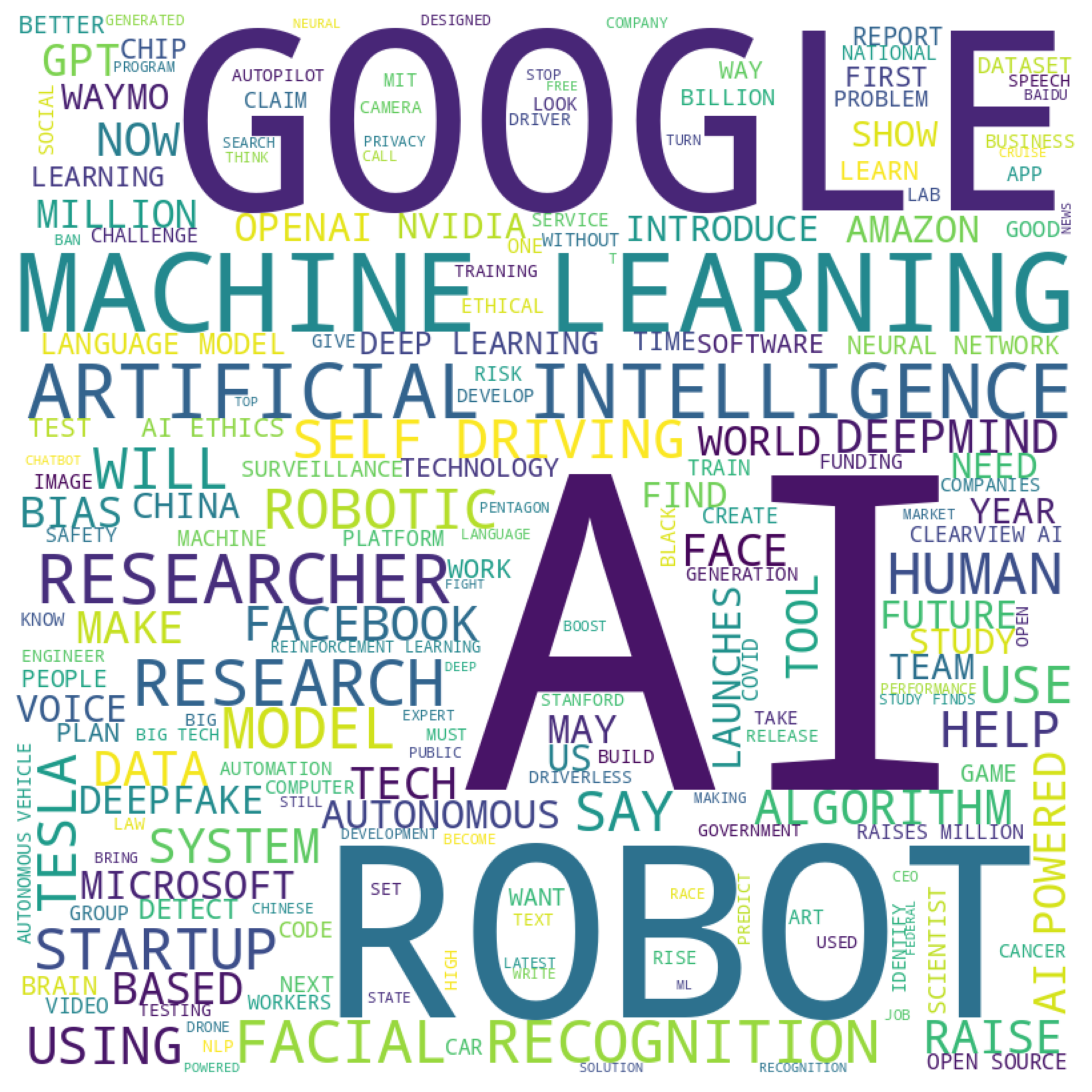

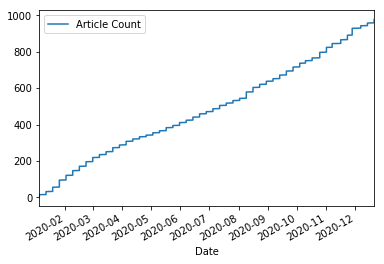

With 2020 (finally) drawing to a close, it’s a good time to reflect on what happened with AI in the this most weird year. Above is a wordcloud of the most common words used in titles of articles we’ve curated in our ‘Last Week in AI’ newsletter over this past year. This reflects just about 1000 articles that we’ve included in the newsletter in 2020:

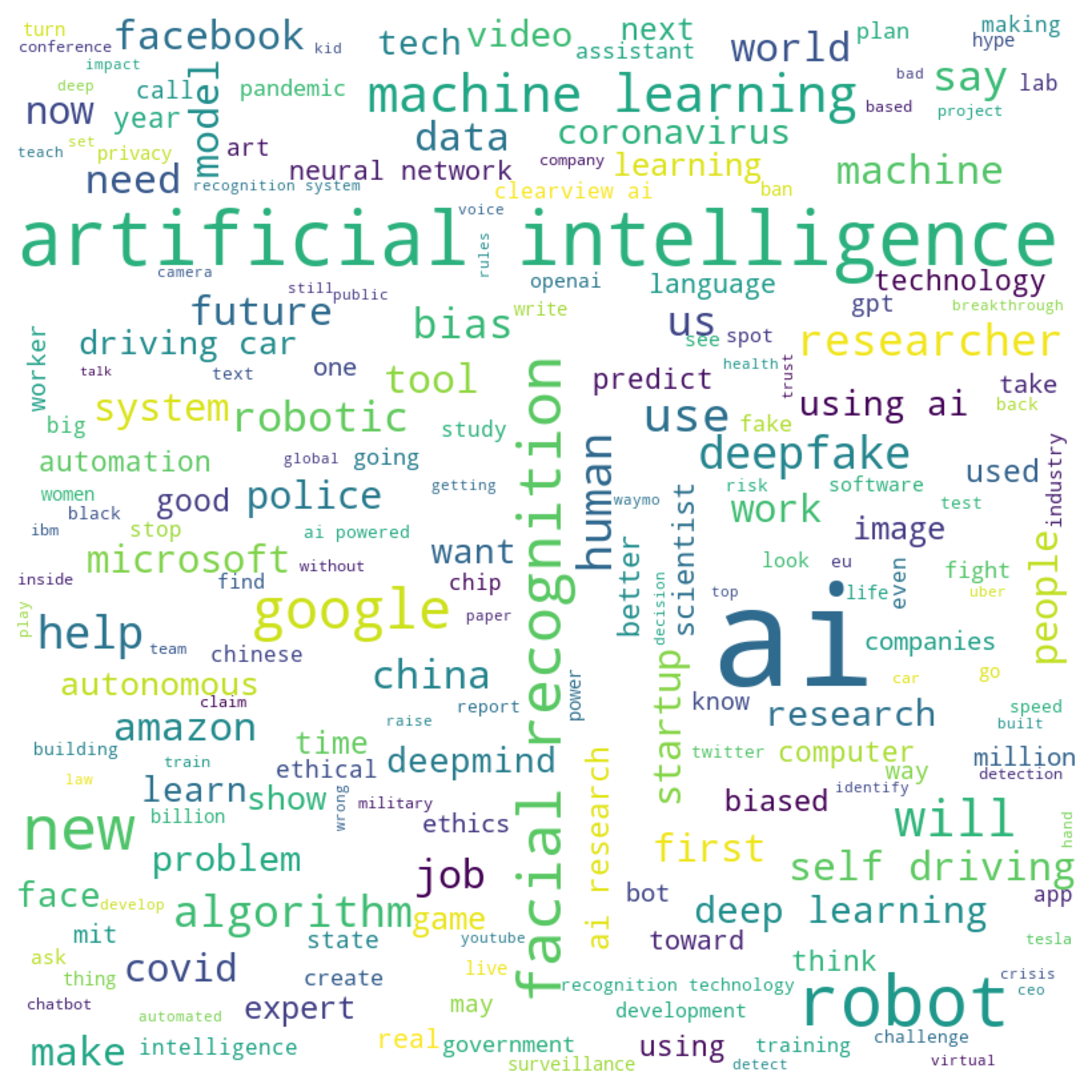

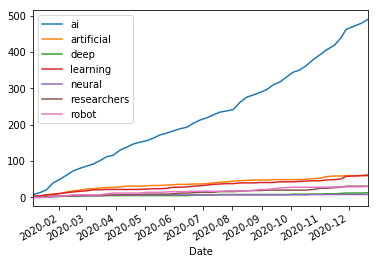

Unsurprisingly, the vague but recognizable term “AI” remained the most popular term to use in article titles, with specifics such as “Deep Learning” or “neural network” remaining comparatively rare:

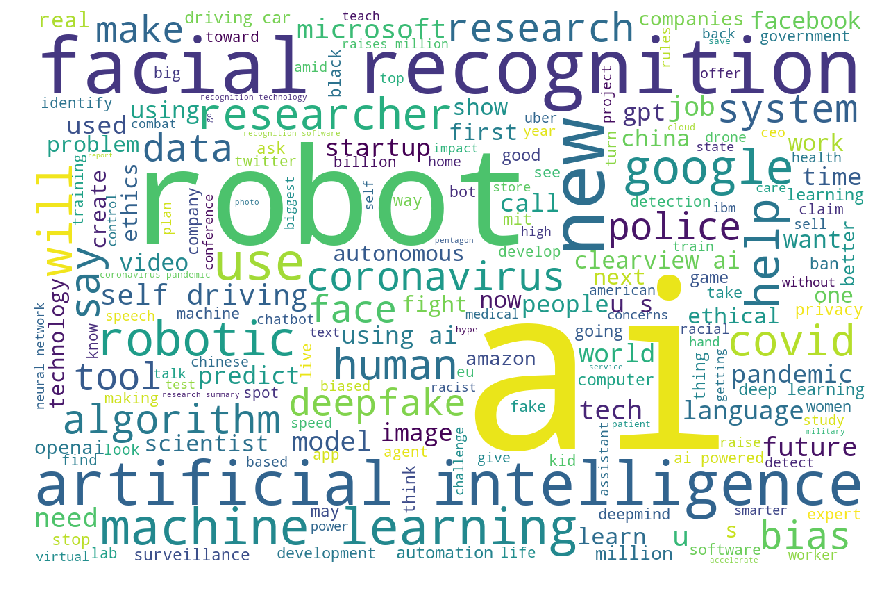

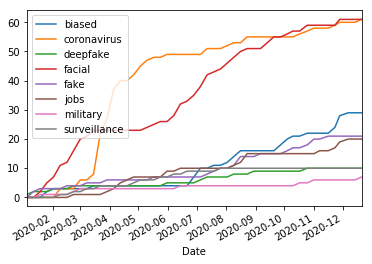

Digging a bit deeper, we find that Coronavirus and Facial Recognition were the biggest topics of the year, followed by bias, deepfakes, and other topics:

But enough overview – let’s go through the most significant articles we’ve curated from the past year, month by month. As in with our newsletter, these articles will be about Advances & Business, Concerns & Hype, Analysis & Policy, and in some cases Expert Opinions & Discussion within the field. They will be presented in chronological order, and represent a curated selection that we believe are particularly noteworthy. Click on the name of the month for the full newsletter release that started out that month.

January

Things started pretty calm in 2020, with a lot of discussion about what to expect from AI in the future, and some articles discussing issues with facial recognition and bias which will become a trend throughout the year:

- In 2020, let’s stop AI ethics-washing and actually do something

- AI shows promise for breast cancer screening

-

U.S. government limits exports of artificial intelligence software

- The Future of Politics Is Robots Shouting at One Another

- The US just released 10 principles that it hopes will make AI safer

-

We’re fighting fake news AI bots by using more AI. That’s a mistake.

- Technology Can’t Fix Algorithmic Injustice

- The Secretive Company That Might End Privacy as We Know It

- Why using AI to screen job applicants is almost always a bunch of crap

February

February saw more discussions of the negative impacts of AI, along with some pieces highlighting efforts to use it for good, and the begginings of AI being connected to the Coronavirus pandemic:

- “AI for Good” talk pushes tech usage to mitigate humans’ environmental impact

- Microsoft takes the wraps off $40 million, five-year ‘AI for Health’ initiative

- Facial Recognition Startup Clearview AI Is Struggling To Address Complaints As Its Legal Issues Mount

- YouTube’s algorithm seems to be funneling people to alt-right videos

- IEEE calls for standards to combat climate change and protect kids in the age of AI

- Artificial Intelligence Is Not Ready For The Intricacies Of Radiology

-

Artificial intelligence applications surge as China battles to contain coronavirus epidemic

- Group Asks Federal Trade Commission To Regulate Use Of Artificial Intelligence In Pre-Employment Screenings

- Emotion AI researchers say overblown claims give their work a bad name

- The messy, secretive reality behind OpenAI’s bid to save the world

- If You Drive in Los Angeles, Police Can Track Your Every Move

- It’s 2020. Where are our self-driving cars?

- We’ve Just Seen the First Use of Deepfakes in an Indian Election Campaign

March

March was a big month with three stories standing out. First is the closing of Starsky Robotics, a promising startup that worked on self-driving trucks. In a detailed blog post, the founder discussed the immense challenges in technology, safety, and economics that face the autonomous driving industry.

Second is the publicity of Clearview AI, which violated many ethical and legal norms by scraping pictures of faces on the Internet to power its facial recognition system that allows its customers, from law enforcement to retail chains, to search for anyone with a picture of their face.

- Clearview’s Facial Recognition App Has Been Used By The Justice Department, ICE, Macy’s, Walmart, And The NBA

- This Small Company Is Turning Utah Into a Surveillance Panopticon

- I Got My File From Clearview AI, and It Freaked Me Out

Lastly is the flood of reports on the fast-developing Covid-19 and the roles AI/robotics can (and cannot) play to help alleviate the pandemic.

- Coronavirus is the first big test for futuristic tech that can prevent pandemics

- AI could help with the next pandemic–but not with this one

- This is how the CDC is trying to forecast coronavirus’s spread

- Should AI help make life-or-death decisions in the coronavirus fight?

- Biotech Companies Tap AI to Speed Path to Coronavirus Treatments

- Adorable Self-Driving Vans are Disinfecting Roads in China

- COVID-19 pandemic prompts more robot usage worldwide

- The Covid-19 Pandemic Is a Crisis That Robots Were Built For

- If Robots Steal So Many Jobs, Why Aren’t They Saving Us Now?

April

April saw a continuation of many stories centered on Covid-19, with some exceptions more related to ethical AI development:

- Scientists develop AI that can turn brain activity into text

- Using AI responsibly to fight the coronavirus pandemic

- UC Berkeley scientists spin up a robotic COVID-19 testing lab

-

Debate flares over using AI to detect Covid-19 in lung scans

- AI can help with the COVID-19 crisis - but the right human input is key

- Physical Distancing Boosts AI-Powered Online Education in China

-

Stanford researchers propose AI in-home system that can monitor for coronavirus symptoms

- Even the Pandemic Doesn’t Stop Europe’s Push to Regulate AI

- Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims

- Computers Already Learn From Us. But Can They Teach Themselves?

- Robots Welcome to Take Over, as Pandemic Accelerates Automation

- MIT Cuts Ties With a Chinese AI Firm Amid Human Rights Concerns

- AI can tackle the climate emergency - if developed responsibly

May

May was much like April, with a lot of focus on Covid-19 and a mix of stories on ethics, jobs, and advancements:

- Artificial Intelligence Won’t Save Us From Coronavirus

- Meet Moxie, a Social Robot That Helps Kids With Social-Emotional Learning

- OpenAI introduces Jukebox, a new AI model that generates genre-specific music with lyrics

- How to make sense of 50,000 coronavirus research papers

- OpenAI begins publicly tracking AI model efficiency

- An AI can simulate an economy millions of times to create fairer tax policy

- How A.I. Steered Doctors Toward a Possible Coronavirus Treatment

-

Clearview AI to stop selling controversial facial recognition app to private companies

- The pandemic is emptying call centers. AI chatbots are swooping in

- Beware of these futuristic background checks

- Americans don’t know why they don’t trust self-driving cars

- Algorithms associating appearance and criminality have a dark past

- Eye-catching advances in some AI fields are not real

June

This month saw the massive protests following George Floyd’s killing, leading many to re-examine police conducts in the U.S. Within the AI community, this often meant questioning police use of facial recognition technologies and the inherent bias in the deployed AI algorithms. It is under this backdrop that companies like Amazon and IBM put a pause to selling facial recognition software to law enforcement, and many nuanced conversations followed.

- Here Are The Minneapolis Police’s Tools To Identify Protesters

- Wrongfully Accused by an Algorithm

- A Case for Banning Facial Recognition

- The two-year fight to stop Amazon from selling face recognition to the police

- ACLU Statement on Amazon Face Recognition Moratorium

- IBM Leads, More Should Follow: Racial Justice Requires Algorithmic Justice and Funding

- A deep learning pioneer’s teachable moment on AI bias

Other news included:

- The startup making deep learning possible without specialized hardware

- The World’s Biggest A.I. Conference Is Going Virtual, and Finally Becoming More Inclusive

- This startup is using AI to give workers a “productivity score”

July

This month the publicitly around OpenAI’s GPT-3, a very large and flexible language model, began to soar as the company released results from its private-beta trials. Although the GPT-3 paper was published in May, it wasn’t until now that people started to realize the extent of its potential applications, from writing code to translating legalese, as well as its limitations and potentials for abuse.

Other news included:

- How deepfakes could actually do some good

- AI’s Carbon Footprint Problem

- I Chatted With a Therapy Bot to Ease My Covid Fears. It Was Bizarre.

- Don’t ask if artificial intelligence is good or fair, ask how it shifts power

- MIT researchers warn that deep learning is approaching computational limits

- An invisible hand: Patients aren’t being told about the AI systems advising their care

- I Am a Model and I Know That Artificial Intelligence Will Eventually Take My Job

- An AI hiring startup promising bias-free results wants to predict job-hopping

August

Next, there was more discussion over GPT-3 kept poping up along with more of the usual concerns about facial recognition, bias, and jobs. Discussion of the Coronavirus has mostly dwindled.

- Face masks are breaking facial recognition algorithms, says new government study

- Rite Aid deployed facial recognition system in hundreds of U.S. stores

-

AI-Powered “Genderify” Platform Shut Down After Bias-Based Backlash

- AI is struggling to adjust to 2020

- Millions of Americans Have Lost Jobs in the Pandemic — And Robots and AI Are Replacing Them Faster Than Ever

- The Panopticon Is Already Here

- Cheap, Easy Deepfakes Are Getting Closer to the Real Thing

-

The hack that could make face recognition think someone else is you

- Pro-China Propaganda Act Used Fake Followers Made With AI-Generated Images

- ICE just signed a contract with facial recognition company Clearview AI

- Police built an AI to predict violent crime. It was seriously flawed

- The Quiet Growth of Race-Detection Software Sparks Concerns Over Bias

-

NYPD Used Facial Recognition Technology In Siege Of Black Lives Matter Activist’s Apartment

- Facebook’s AI for detecting hate speech is facing its biggest challenge yet

- AI Slays Top F-16 Pilot In DARPA Dogfight Simulation

- Hulu deepfaked its new ad. It won’t be the last.

- AI Algorithm Reaches Equivalent Accuracy of Average Radiologist

- GPT-3 Is an Amazing Research Tool. But OpenAI Isn’t Sharing the Code.

September

Concerns over bias, facial recognition, and other issues with AI really came to the fore this month, with some discussion of progress also mixed in.

- Robotics, AI, and Cloud Computing Combine to Supercharge Chemical and Drug Synthesis

- AI researchers use heartbeat detection to identify deepfake videos

- California Utilities Hope Drones, AI Will Lower Risk of Future Wildfires

- AI standards launched to help tackle problem of overhyped studies

- The medical AI floodgates open, at a cost of $1000 per patient.

- Tesla’s ‘Full Self-Driving Capability’ Falls Short of Its Name

-

The Guardian’s GPT-3-generated article is everything wrong with AI media hype

- Why kids need special protection from AI’s influence

- Face-mask recognition has arrived - for better or worse

- Controversial facial-recognition software used 30,000 times by LAPD in last decade, records show

- A sheriff launched an algorithm to predict who might commit a crime. Dozens of people said they were harassed by deputies for no reason.

- Twitter and Zoom’s algorithmic bias issues

- YouTube Removed Twice As Many Videos After Switch to AI Moderation

- Microsoft teams up with OpenAI to exclusively license GPT-3 language model

- A controversial photo editing app slammed for AI-enabled ‘blackface’ feature

October

October was a more positive month, with many more stories regarding advancements and uses of AI and fewer concerning its negative aspects.

- Toyota Research Demonstrates Ceiling-Mounted Home Robot

- AI Can Help Patients - but Only If Doctors Understand It

- The National Guard’s Fire-Mapping Drones Get an AI Upgrade

- IBM open-sources AI for optimizing satellite communications and predicting space debris trajectories

- ExamSoft’s remote bar exam sparks privacy and facial recognition concerns

-

Infighting, Busywork, Missed Warnings: How Uber Wasted $2.5 Billion on Self-Driving Cars

- A GPT-3 bot posted comments on Reddit for a week and no one noticed

- Nvidia unveils Maxine, a managed cloud AI videoconferencing service

- Microsoft and partners aim to shrink the ‘data desert’ limiting accessible AI

- A radical new technique lets AI learn with practically no data

-

Access Now resigns from Partnership on AI due to lack of change among tech companies

- Introducing the First AI Model That Translates 100 Languages Without Relying on English

- Facial recognition datasets are being widely used despite being taken down due to ethical concerns. Here’s how.

- Whisper announces $35M Series B to change hearing aids with AI and subscription model

- Facebook uses AI to forecast COVID-19 spread across the US

November

This month was much like the last, with ongoing discussions around ethics and issues with AI continuing as well as various advancements demonstrating how fast the field is moving.

- How the U.S. patent office is keeping up with AI

- Splice doubles users, teaches AI to sell Similar Sounds

- MIT researchers say their AI model can identify asymptomatic COVID-19 carriers

- AI agreement to enhance environmental monitoring, weather prediction

-

The creators of South Park have a new weekly deepfake satire show

- Who am I to decide when algorithms should make important decisions?

- AI pioneer Geoff Hinton: “Deep learning is going to be able to do everything”

-

Pope’s November prayer intention: that progress in robotics and AI “be human”

- AI is wrestling with a replication crisis

- The US Government Will Pay Doctors to Use These AI Algorithms

- Microsoft and OpenAI propose automating U.S. tech export controls

- Facebook says AI has fueled a hate speech crackdown

- Designed to Deceive: Do These People Look Real to You?

- Big pharma is using AI and machine learning in drug discovery and development to save lives

- Google: BERT now used on almost every English query

December

This month saw another impressive AI development touted as a breakthrough by academics and the press. DeepMind’s AlphaFold 2 made a significant advance with its results in the biannual protein structure prediction competition, beating competitors and the previous AlphaFold 1 by large margins. While many experts agree that protein folding hasn’t been “solved” and caution against unfounded optimism regarding the algorithm’s immediate applications, there is little doubt that AlphaFold 2 and similar systems will have big implications for the future of biology.

In the AI community, another big news story was Google’s firing of Timnit Gebru, a leading AI ethics researcher, over disagreements regrading her recent work that highlights the bias and concerns with AI language models. This sparked a number of pointed conversations in the field, from the role of race and the lack of diversity in AI, as well as corporate censorship in industry labs.

- Google Researcher Says She Was Fired Over Paper Highlighting Bias in A.I.

- Timnit Gebru: Google’s ‘dehumanizing’ memo paints me as an angry Black woman

- The Dark Side of Big Tech’s Funding for AI Research

- “I started crying”: Inside Timnit Gebru’s last days at Google—and what happens next

Other news:

- Tiny four-bit computers are now all you need to train AI

- New York City Considers Regulating AI Hiring Tools

- AI needs to face up to its invisible-worker problem

Podcast

Check out our weekly podcast covering these stories! Website | RSS | iTunes | Spotify | YouTube