AI News in 2021: a Digest

An overview of the big AI-related stories from 2021

Overview

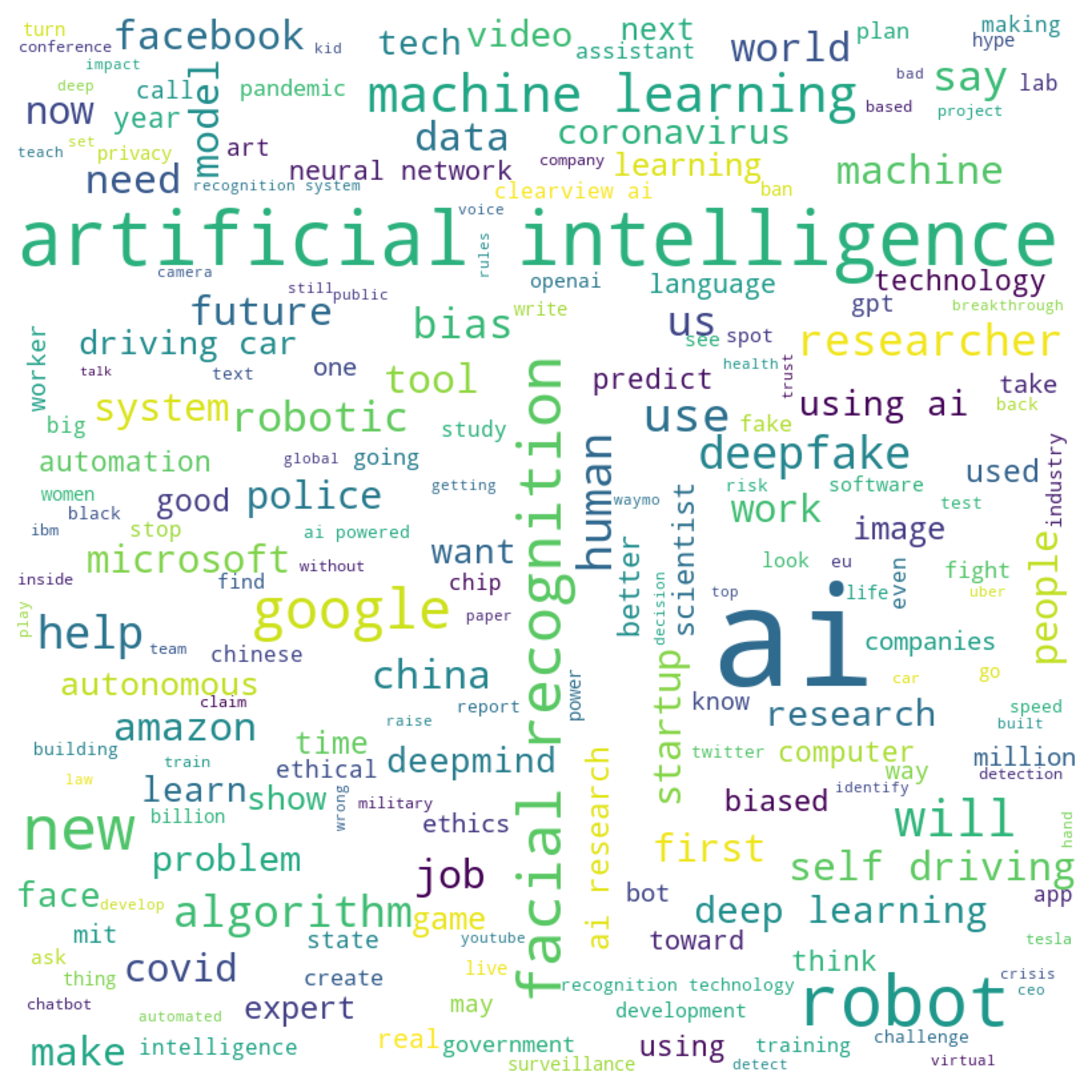

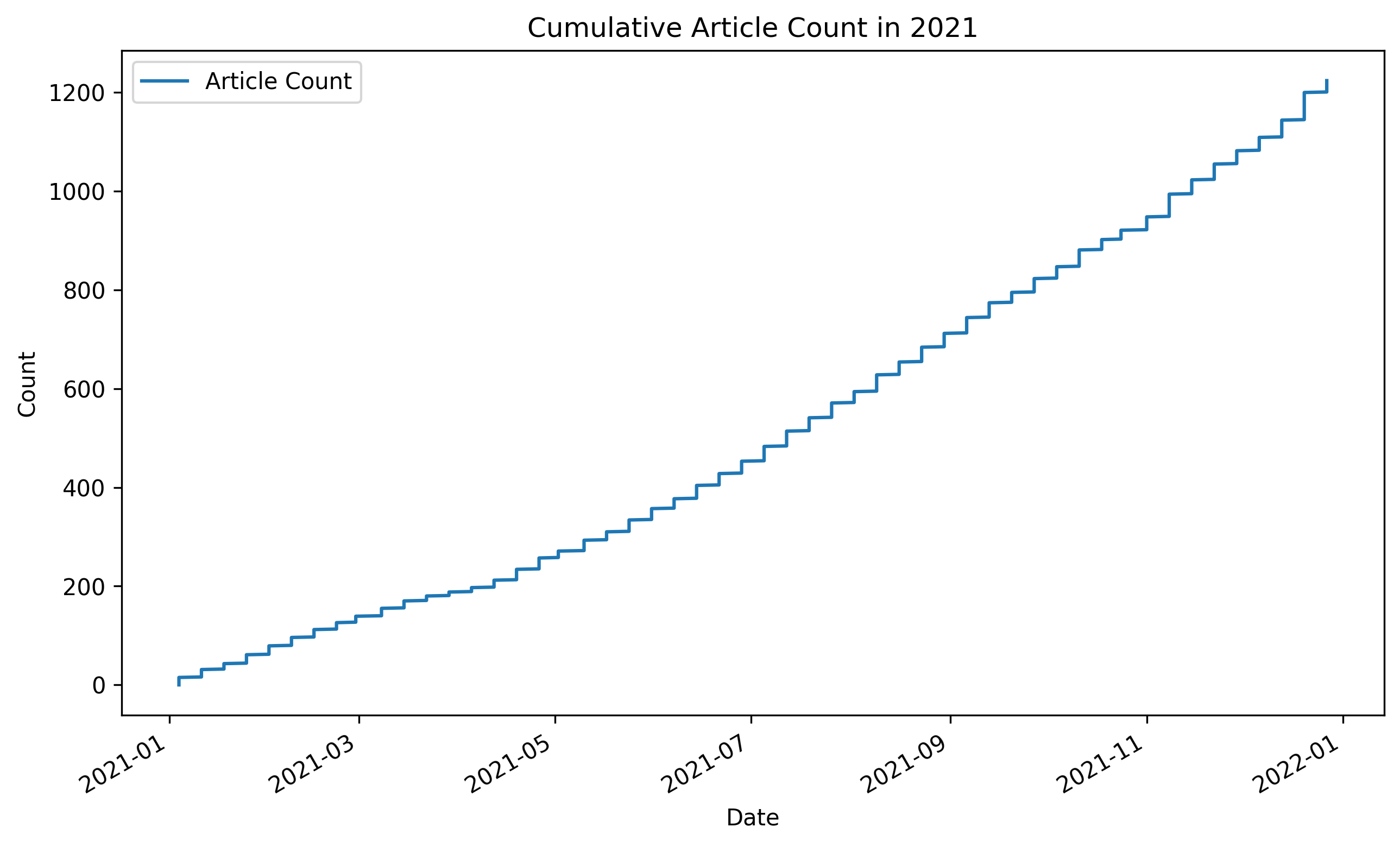

With 2021 over, we’d like to reflect on what’s happened in AI during a year that began in the midst of the pandemic, and ended still in the midst of the pandemic. Above is a wordcloud of the most common words used in titles of articles we’ve curated in our ‘Last Week in AI’ newsletter over this past year. This reflects about 1250 articles that we’ve included in the newsletter in 2021:

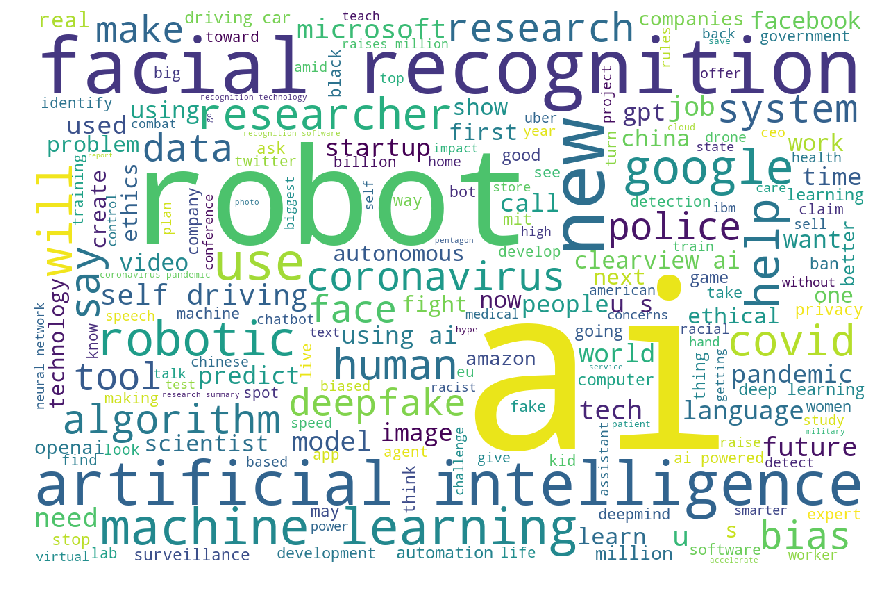

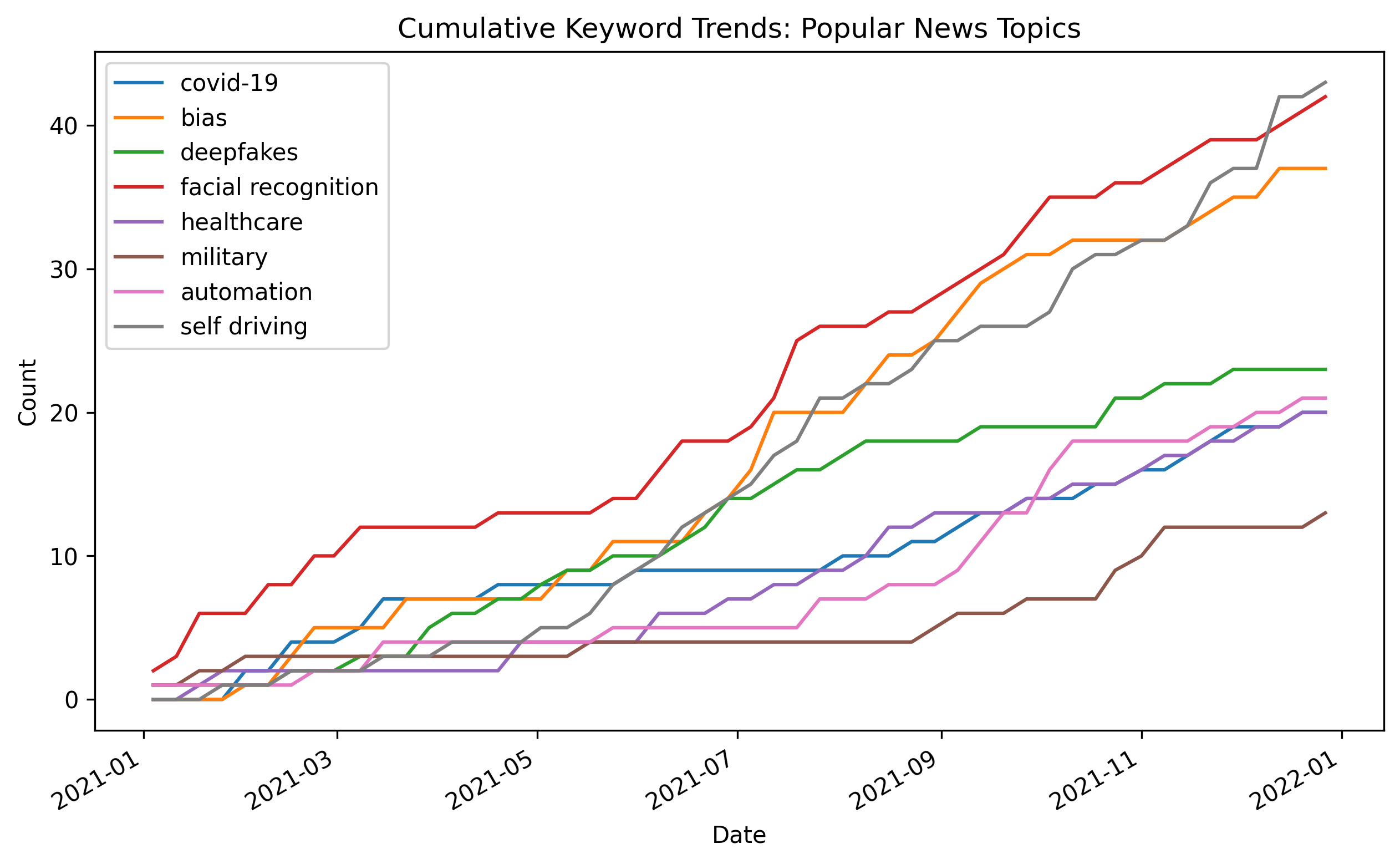

Digging a bit deeper, we can see the most popular topics covered in these news articles based on keywords in their titles:

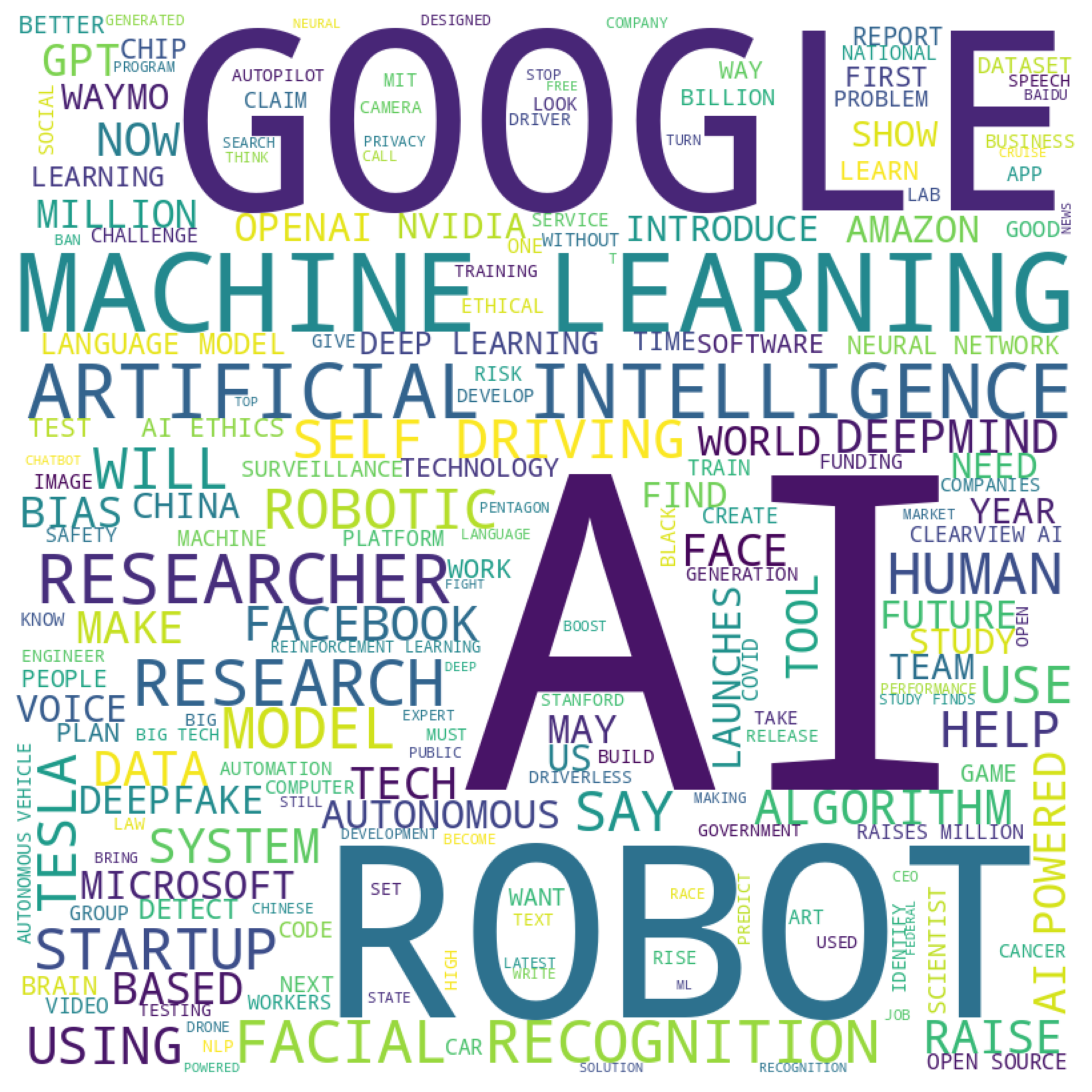

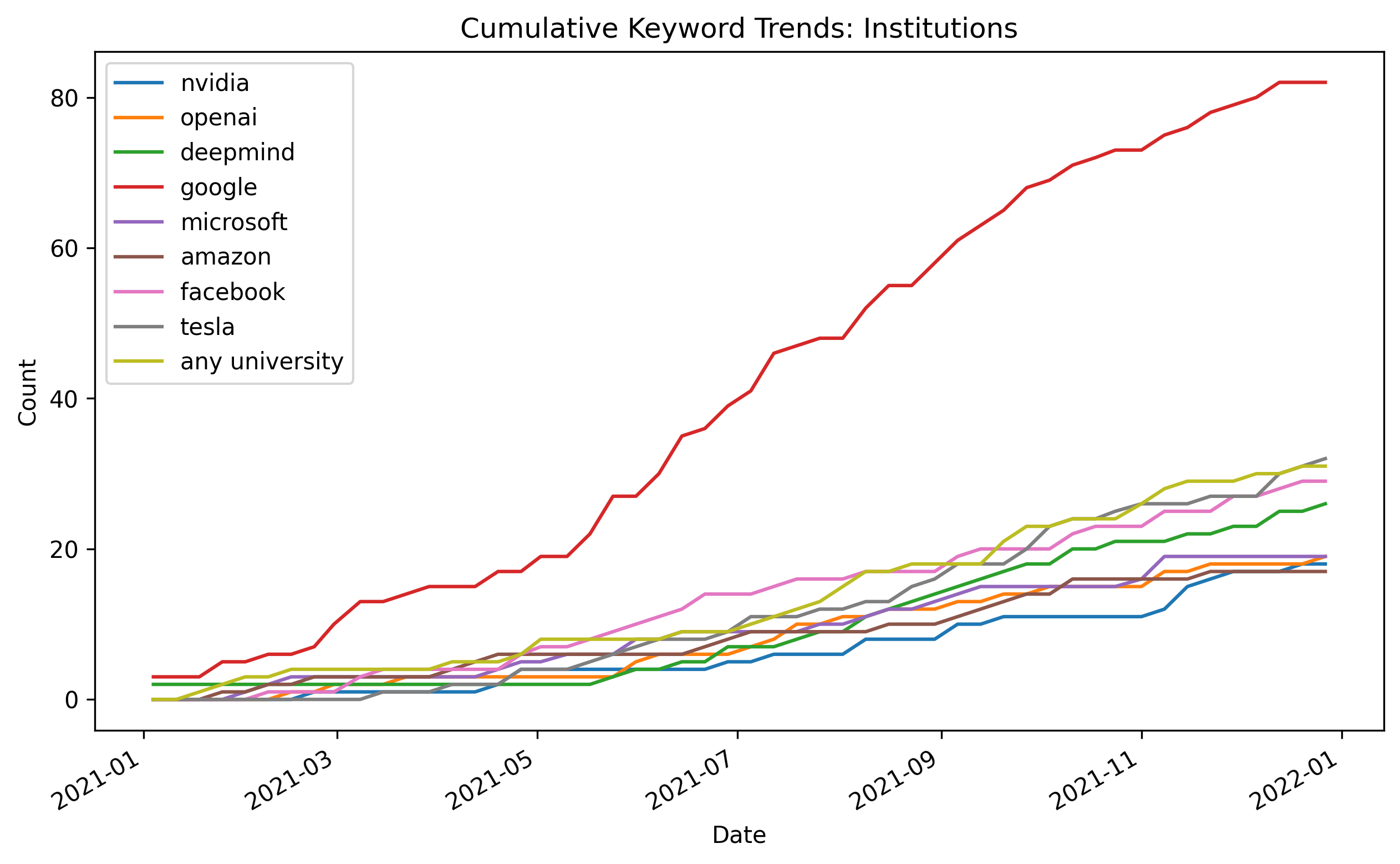

Among institutions, Google still receives by far the most coverage:

But enough overview – let’s go through the most significant articles we’ve curated from the past year, month by month.

As in with our newsletter, these articles will be about Advances & Business, Concerns & Hype, Analysis & Policy, and in some cases Expert Opinions & Discussion within the field. They will be presented in chronological order, and represent a curated selection that we believe are particularly noteworthy.

January

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4

Highlights:

- New York suspends facial recognition use in schools

- A Black man spent 10 days in jail after he was misidentified by facial recognition, a new lawsuit claims

- This avocado armchair could be the future of AI

- Why we must democratize AI to invest in human prosperity, with Frank Pasquale

- Why we must democratize AI to invest in human prosperity, with Frank Pasquale

- Use of Clearview AI facial recognition tech spiked as law enforcement seeks to identify Capitol mob

- National AI Initiative Office launched by White House

Our Short Summary: A big topic carried over from last year is the explosion of facial recognition software and the growing public pushback they are receiving, and January started off with new lawsuits and regulations on their uses. Separately, OpenAI publicized a new impressive work called CLIP that is able to generate pictures from language prompts.

February

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4 | Week 5

Highlights:

- These crowdsourced maps will show exactly where surveillance cameras are watching

- Why the OECD wants to calculate the AI compute needs of national governments

- Deepfake porn is ruining women’s lives. Now the law may finally ban it

- Band of AI startups launch ‘rebel alliance’ for interoperability

- Google fires researcher Margaret Mitchell amid chaos in AI division

Our Short Summary: Another thread carried over from last year is the turmoil at Google’s Ethical AI team. Following the firing of researcher Timnit Gebru, Google fired Margaret Mitchell, leading to more controversy in the press. Again on the topic of facial recognition, a crowdsourced map was produced by Amnesty International to expose where these cameras might be watching. An OECD task force led by former OpenAI policy director Jack Clark was formed to calculate compute needs for national governments in an effort to craft better-informed AI policy.

March

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4

Highlights:

- The 2021 AI Index: Major Growth Despite the Pandemic

- Clearview AI sued in California by immigrant rights groups, activists

- How Facebook got addicted to spreading misinformation

- Underpaid Workers Are Being Forced to Train Biased AI on Mechanical Turk

- A researcher turned down a $60k grant from Google because it ousted 2 top AI ethics leaders: ‘I don’t think this is going to blow over’

Our Short Summary: The AI Index report released in March paints an optimistic outlook on the future of AI development - we are seeing significant increases in private AI R&D, especially in the healthcare. Simultaneously, concerns about AI continue to manifest. Karen Hao of the MIT Technology Review interviewed an important player in Facebook’s AI Ethics group, and found that Facebook was over-focusing on AI bias at the expense of grappling with the more destructive features of its AI systems. In another development stemming from Google’s Ethical AI fallout, a researcher publicly rejected a grant from the behemoth.

April

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4 | Week 5

Highlights:

- Boston Dynamics unveils Stretch: a new robot designed to move boxes in warehouses

- Forget Boston Dynamics. This robot taught itself to walk

- Analysis on U.S. AI Workforce

- Google is poisoning its reputation with AI researchers

- Europe seeks to limit use of AI in society

- EU is cracking down on AI, but leaves a loophole for mass surveillance

Our Short Summary: Another report release in April, the Analysis on U.S. AI Workforce, shows how AI workers grew 4x as fast as all U.S. occupations.

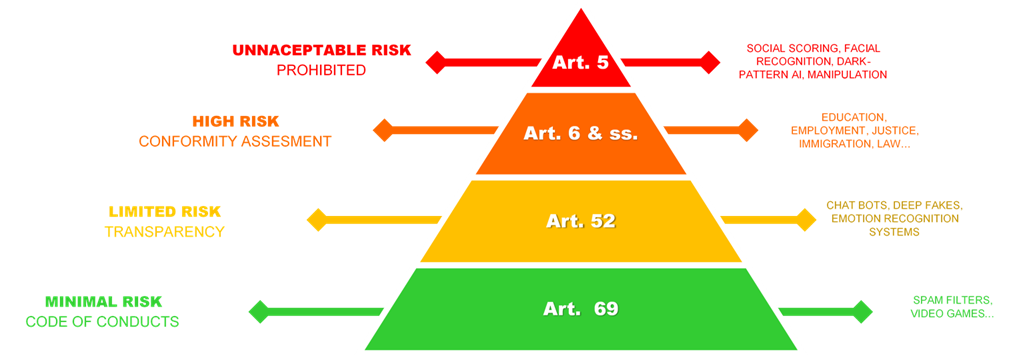

In this month we also saw more reports of EU’s growing regulations on AI commercial applications in high-risk areas.

This is an important legislative framework that may be borrowed by other governments in thef future.

May

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4

Highlights:

- Google and UC Berkeley Propose Green Strategies for Large Neural Network Training

- How to stop AI from recognizing your face in selfies

- Making AI algorithms show their work

- Google Plans to Double AI Ethics Research Staff

- Google’s plan to make search more sentient

- Sharing learnings about our image cropping algorithm

Our Short Summary: Late May saw Google announcing their new large language models that can significantly impact how search and other Google products work in the future. This builds on top of the explosion of language model sizes over the last two years. Perhaps this drive to commercialize large language models is behind the firing of its Ethical AI team leads months before, who at the time were focused on characterizing the potential harm of using such models.

June

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4 | Week 5

Highlights:

- The age of killer robots may have already begun

- King County is first in the country to ban facial recognition software

- AI still sucks at moderating hate speech

- Google is using AI to design its next generation of AI chips more quickly than humans can

- The False Comfort of Human Oversight as an Antidote to A.I. Harm

- DeepMind scientist calls for ethical AI as Google faces ongoing backlash

- LinkedIn’s job-matching AI was biased. The company’s solution? More AI.

Our Short Summary: A worrying report from the U.N. surfaced in June that describes the possibility of a drone making autonomous target and attack soldiers during last year’s Libya conflicts. The report remains to be independently verified, but proliferation of autonomous lethal weapons looms large as there are no global agreements that limit their use. Also as a sign of things to come, June also saw King County banning government use of facial recognition technology, the first of such regulations in the U.S.

July

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4

Highlights:

- AI Generated Art Scene Explodes as Hackers Create Groundbreaking New Tools

- Why Robots Can’t Be Counted On to Find Survivors in the Florida Building Collapse

- Maine Now Has the Toughest Facial Recognition Restrictions in the U.S.

- How TikTok’s hate speech detection tool set off a debate about racial bias on the app

- US sanctions a Chinese facial recognition company with Silicon Valley funding

- DeepMind’s AI for protein structure is coming to the masses

- Is GitHub Copilot a blessing, or a curse?

- Something Bothering You? Tell It to Woebot

Our Short Summary: CLIP+VQGAN is the technology developed by OpenAI that allows users to direct AI image generation with text prompts. While these tools have been publicized since January, AI-generated art using this technique has really taken off during the summer. The details of DeepMind’s AlphaFold2 were also shared this month and the technology was open sourced. As throughout the year as a whole, concerns and actions related to facial recognition were prominent this month. The GitHub CoPilot, an AI powered ‘autocomplete for programming’, was announced to much excitement.

August

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4 | Week 5

Highlights:

- China built the world’s largest facial recognition system. Now, it’s getting camera-shy.

- 100+ Standford Researchers Publish 200+ Page Paper on the AI Paradigm Shift Introduced by Large-Scale Models

- NSF partnerships expand National AI Research Institutes to 40 states

- A new generation of AI-powered robots is taking over warehouses

- The slow collapse of Amazon’s drone delivery dream

- OpenAI can translate English into code with its new machine learning software Codex

- Twitter AI bias contest shows beauty filters hoodwink the algorithm

- Elon Musk unveils ‘Tesla bot,’ a humanoid robot that would be made from Tesla’s self-driving AI

- “Flying in the Dark”: Hospital AI Tools Aren’t Well Documented

Our Short Summary: This month a team of 100+ Stanford researchers published a 200+ pages paper On the Opportunities and Risks of Foundation Models. Foundation models refer to large models trained on vast amounts of data, like GPT-3, that can be transferred to downstream tasks that don’t have a lot of data. While the name of “foundation” and how the paper was released stirred up some controversy, it is undeniable that large pre-trained models will continue to play a large role in AI moving forward, and the paper gives many insights on their capabilities and limitations. Otherwise, the typical trends of seeing many stories about facial recognition, bias, and self-driving persisted.

September

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4

Highlights:

- Only Humans, Not AI Machines, Can Get a U.S. Patent, Judge Rules

- The Fight to Define When AI Is ‘High Risk’

- Project Maven: Amazon And Microsoft Scored $50 Million In Pentagon Surveillance Contracts After Google Quit

- In the US, the AI Industry Risks Becoming Winner-Take-Most

- How AI’s full power can accelerate the fight against climate change

- The prominent Facebook AI researcher at the center of its misinformation scandals has quietly left the company

- These weird, unsettling photos show that AI is getting smarter

- First AI Pathology Program Approved: Helps Detect Prostate Cancer

- UK announces a national strategy to ‘level up’ AI

Our Short Summary: A U.S. federal had ruled that under current U.S. law, only people can be listed as inventors of patents, not AI algorithms. Supporters of this ruling cite the concern of AI-powered patent trolls, which may use AI to “generate” countless patents in the hope of benefiting from potential patent infringement lawsuits. Critics of this ruling say that AI-authored patents can incentivize the development of such AIs. Regardless, the U.S. can still allow AI-authored patents, but it will need new laws from Congress. As the highlights above show, there was a healthy mix of positive news related to AI as well as new information regarding scandals surrounding misinformation and military contracts.

October

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4

Highlights:

- DeepMind’s AI predicts almost exactly when and where it’s going to rain

- Are AI ethics teams doomed to be a facade? The women who pioneered them weigh in

- Chinese AI gets ethical guidelines for the first time, aligning with Beijing’s goal of reining in Big Tech

- Clearview AI Has New Tools to Identify You in Photos

- Singapore sends Xavier the robot to help police keep streets safe under three-week trial

- Google Researchers Explore the Limits of Large-Scale Model Pretraining

- Deepfaked Voice Enabled $35 Million Bank Heist in 2020

- Opening up a physics simulator for robotics

- Schools warned over facial recognition systems

- Google’s AI researchers say their output is being slowed by lawyers after a string of high-level exits: ‘Getting published really is a nightmare right now.’

Our Short Summary: We saw several articles relevant to one of the biggest stories in AI from the past year – the firing of Dr. Timnit Gebru from Google. The above highlights suggest that lawyers are involved in reviewing research, which is quite unusual, and include a discussion about AI ethids research in industry more broadly. We saw yet another story about Clearview AI, a constant throghought the year. On the research side, many roboticists were excited about DeepMind acquiring and open sourcing the Mujoco physics simulator. DeepMind also continued its trend of applying Machine Learning to practical problems as it had done with AlphaFold 2, this time with new research on impressively accurate rain prediction.

November

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4 | Week 5

Highlights:

- Adobe’s Project Morpheus is Actually a Deepfake Tool?

- Clearview AI finally takes part in a federal accuracy test.

- Facebook, Citing Societal Concerns, Plans to Shut Down Facial Recognition System

- Tesla vehicle in ‘Full Self-Driving’ beta mode ‘severely damaged’ after crash in California

- French AI strategy: Tech sector to receive over €2 bln in next 5 years

- Alphabet is putting its prototype robots to work cleaning up around Google’s offices

- A Utah company says it’s revolutionized truth-telling technology. Experts are highly skeptical.

- A Whopping 301 Newly Confirmed Exoplanets – Discovered With New Deep Neural Network ExoMiner

- Creepy Humanoid Robo-Artist Gives Public Performance Of Its Own AI-Generated Poetry

- China backs UN pledge to ban (its own) social scoring

- Alphabet is putting its prototype robots to work cleaning up around Google’s offices

Our Short Summary: On Oct. 28 Adobe announced a new AI-powered video editing tool called Project Morpheus. It can be used to edit people’s expressions and other facial attributes, leading to some being concerned about it being a DeepFake tool despite its capabilities being quite limited. In a surprise announcement, Facebook has stated it will no longer use facial recognition on its service, and will even delete billions of records that were used as part of it. This was hailed as a welcome step by privacy advocates. Alphabet has announced that its Everyday Robots Project team has been deploying its robots to carry out custodial tasks on Google’s Bay Area campuses, in a cool demonstration of the progress their team has made since starting to work on getting robots out of their labs and out into the real world.

December

Newsletter links: Week 1 | Week 2 | Week 3 | Week 4

Highlights:

- DeepMind AI collaborates with humans on two mathematical breakthroughs

- Ex-Googler Timnit Gebru Starts Her Own AI Research Center

- Clearview AI told to stop processing UK data as ICO warns of possible fine

- AI is making better therapists

- US blacklists SenseTime in blow to its IPO plans

- The Beatles: Get Back Used High-Tech Machine Learning To Restore The Audio

- US blacklists SenseTime in blow to its IPO plans

- U.N. talks adjourn without deal to regulate ‘killer robots’

- How TikTok Reads Your Mind

- Announcing the Transactions on Machine Learning Research

Our Short Summary: Most dramatically, exactly a year ago since announcing her being fired, Dr Timnit Gebru announced her new Distributed AI Research institute. This organization is meant to be independent of big tech funding, and therefore more effective at doing impactful AI ethics research. DeepMind once again impressed with another cross-disciplinary research work, this time with the focus being on mathematics. Yet more developments were covered with regards to Clearview, this time about it being penalized outside of the US. On the whole, the year ended without much excitement.

Conclusion

And so another year of AI news comes to an end. We obviously could not cover all the developments in this digest, so if you’d like to keep up with AI news on an on-going basis, subscribe to our ‘Last Week in AI’ newsletter!