Last Week in AI #40

Biased credit assignment, the future of work, and more!

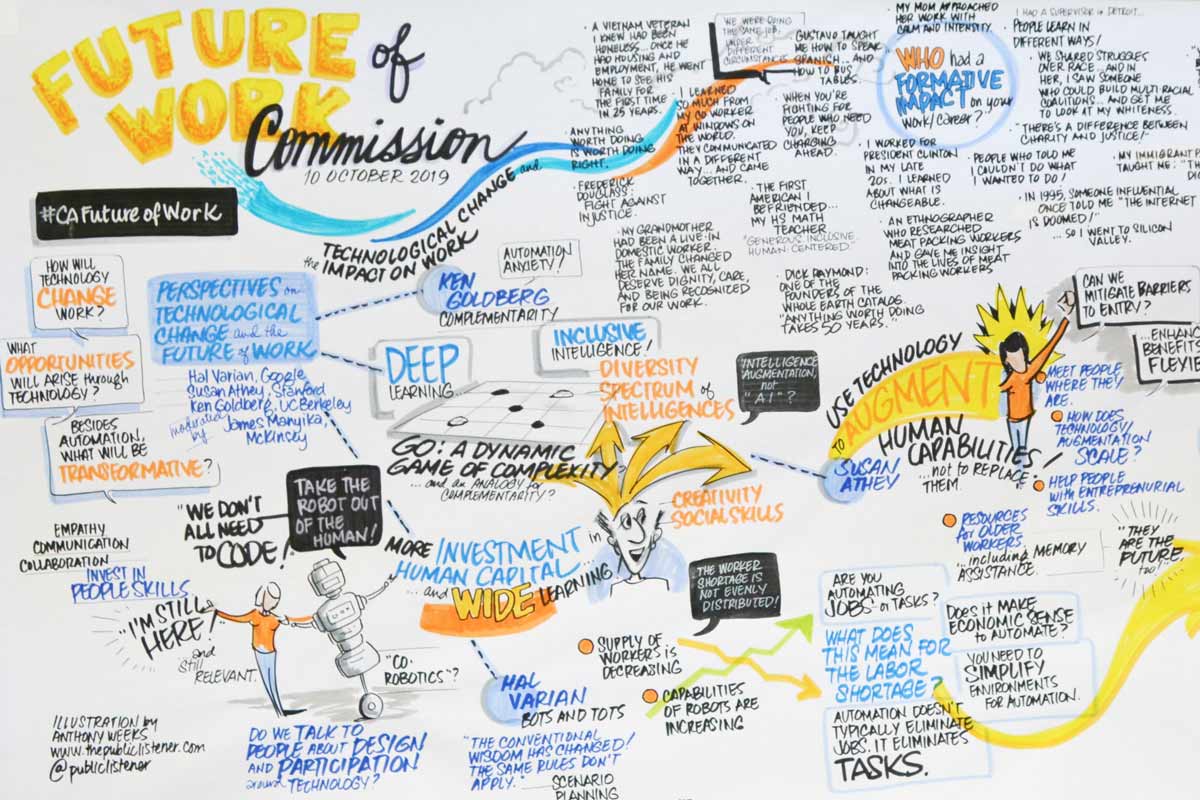

Image credit: Anthony Weeks / Stanford HAI

Mini Briefs

Apple’s ‘sexist’ credit card investigated by US regulator

A tech entreprenuer found that the Apple Card gave him 20 times the credit limit that his wife got. Turns out, he wasn’t the only one with Steve Wozniak (co-founder of Apple) saying he had the same issue.

The @AppleCard is such a fucking sexist program. My wife and I filed joint tax returns, live in a community-property state, and have been married for a long time. Yet Apple’s black box algorithm thinks I deserve 20x the credit limit she does. No appeals work.

— DHH (@dhh) November 7, 2019

While Hanson’s wife’s credit limit was increased after he raised the issue with Apple, they had no explanation apart from “It’s the Algorithm”. This particular case highlights how biased data, and lack of diversity leads to biased algorithmic decisions. Goldman Sachs provided the below statement when asked for comment by Bloomberg:

“Our credit decisions are based on a customer’s creditworthiness and not on factors like gender, race, age, sexual orientation or any other basis prohibited by law.

Research shows that even when protected factors are not used, algorithms are able to find proxies and can result in discriminatory effects. From the looks of it, it seems highly likely that bias has crept into their system.

when the algorithms involved were developed, they were trained on a data set in which women indeed posed a greater financial risk than the men. This could cause the software to spit out lower credit limits for women in general, even if the assumption it is based on is not true for the population at large.

The only good thing about this situation is that it has been uncovered. Given the companies involved (Apple, Goldman Sachs), this incident will hopefully make regulators take a more active role in tackling the issue of algorithmic bias.

Advances & Business

-

These Black Women Are Fighting For Justice In A World Of Biased Algorithms - By rooting out bias in technology, these Black women engineers, professors and government experts are on the front lines of the civil rights movement of our time.

-

The computing power needed to train AI is now rising seven times faster than ever before - In 2018, OpenAI found that the amount of computational power used to train the largest AI models had doubled every 3.4 months since 2012.

-

How Starbucks Is Using Artificial Intelligence - Using Deep Brew, the coffee chain is aiming for an improved experience for both customers and employees.

-

How artificial intelligence can transform psychiatry - Peter Foltz, a research professor at the University of Colorado Boulder Institute of Cognitive Science, has developed an app that rates mental help based on speech cues.

-

Intel Throws Down AI Gauntlet With Neural Network Chips - At this year’s Intel AI Summit, the chipmaker demonstrated its first-generation Neural Network Processors (NNP): NNP-T for training and NNP-I for inference.

-

Deep neural networks speed up weather and climate models - Environmental scientists and computational scientists from Argonne are collaborating to use deep neural networks, a type of machine learning, to replace the parameterizations of certain physical schemes in the Weather Research and Forecasting model, significantly reducing simulation time.

-

Cruise updates the AI brains behind its autonomous cars twice weekly - GM’s Cruise announced this past June that it would postpone plans for a driverless taxi service, which it previously said would debut in 2019. But the setback hasn’t discouraged its over 1,000 staffers from iterating toward a public launch.

-

Reshaping Business With Artificial Intelligence - Expectations for artificial intelligence are sky-high, but what are businesses actually doing now? This report presents a realistic baseline that allows companies to compare their AI ambitions and efforts.

-

How artificial intelligence is redefining the role of manager - AI won’t be replacing a manager’s job; it will be supplementing it. The future of work is one where robots and humans will be working side by side, helping each other get work done faster and more efficient than ever before.

Concerns & Hype

-

Yes, hyena robots are scary. But they’re also a cunning marketing ploy - There’s something unsettling about a private firm making powerful autonomous machines – but what’s scarier is who’s building them, and why.

-

AI Can Tell If You’re Going to Die Soon. We Just Don’t Know How It Knows. - Researchers found that a black-box algorithm predicted patient death better than humans. They used ECG results to sort historical patient data into groups based on who would die within a year. Although the algorithm performed better, scientists don’t understand how or why it did.

-

AI-generated fake content could unleash a virtual arms race - When it comes to AI’s role in making online content, Kristin Tynski, VP of digital marketing firm Fractl, sees an opportunity to boost creativity. But a recent experiment in AI-generated content left her a bit shaken.

-

A.I. Systems Echo Biases They’re Fed, Putting Scientists on Guard - Researchers say computer systems are learning from lots and lots of digitized books and news articles that could bake old attitudes into new technology.

-

Hikvision Markets Uyghur Ethnicity Analytics, Now Covers Up - Hikvision has marketed an AI camera that automatically identifies Uyghurs, on its China website, only covering it up days ago after IPVM questioned them on it. This AI technology allows the PRC to automatically track Uyghur people, considered one of the world’s most persecuted minorities.

-

We scanned thousands of faces in DC today to show why facial recognition surveillance should be banned - Activists working with digital rights group Fight for the Future conducted live facial recognition surveillance in the halls of Congress and the area surrounding Capitol Hill, to show why this technology is so dangerous that it should be banned.

-

Can AI Built to ‘Benefit Humanity’ Also Serve the Military? - Microsoft’s recent victory in landing a $10 billion Pentagon cloud-computing contract called JEDI could make life more complicated for one of the software giant’s partners: the independent artificial-intelligence research lab OpenAI.

-

Can the planet really afford the exorbitant power demands of machine learning? - The environmental impact of such technological advances can be huge.

-

Facebook’s latest giant language AI hits computing wall at 500 Nvidia GPUs - Facebook’s giant “XLM-R” neural network is engineered to work word problems across 100 different languages, including Swahili and Urdu, but it runs up against computing constraints even using 500 of Nvidia’s world-class GPUs.

Analysis & Policy

-

Interim Report by the National Security Commission on AI - This report represents the Commission’s initial assessment on artificial intelligence (AI) as it relates to national security, provides preliminary judgments regarding areas where the United States can do better, and suggests some interim actions the government could take now.

-

Twitter wants your feedback on its proposed deepfakes policy - A lie has always been able to travel faster than the truth, and that goes double on Twitter, where a combination of bad human choices and bad-faith bots amplifies false messaging almost instantly around the world. So what should a social media platform do about it?

-

United States should make a massive investment in AI, top Senate Democrat says - A top Democrat in the U.S. Senate wants the government to create a new agency that would invest an additional $100 billion over 5 years on basic research in artificial intelligence (AI).

Expert Opinions & Discussion within the field

-

Augmentative AI and the future of work - For AI to be embraced in the workplace, it must be developed and deployed in partnership with workers. While consumers are readily embracing automation technologies built into device apps, smart speakers and connected-home systems, the mood in the workplace is decidedly more mixed.

-

We’ve got to regulate the application of AI — not the tech itself - The World Economic Forum recently confirmed its intention to develop global rules for AI and create an AI Council that will aim to find common ground on policy between nations on the potential of AI and other emerging technologies.

-

Artificial Intelligence Is Too Important to Leave to Google and Facebook Alone - Let’s develop a public research consortium to take on useful projects that have no commercial prospects.

-

Don’t Believe the Hype - Expectations that computers are on the verge of matching or surpassing humans’ abilities may be rampant, but they aren’t new.

Explainers

-

Self-Supervised Representation Learning - Self-supervised learning opens up a huge opportunity for better utilizing unlabelled data, while learning in a supervised learning manner. This post covers many interesting ideas of self-supervised learning tasks on images, videos, and control problems.

-

Teaching a neural network to use a calculator - This article explores a seq2seq architecture for solving simple probability problems in Saxton et. al.’s Mathematics Dataset. A transformer is used to map questions to intermediate steps, while an external symbolic calculator evaluates intermediate expressions.

-

Andrey Markov & Claude Shannon Counted Letters to Build the First Language-Generation Models - As part of IEEE Spectrum’s series on the history of natural language processing, they take a look at how the first language-generation models were built.

-

Federated Learning: Challenges, Methods, and Future Directions - What is federated learning? How does it differ from traditional large-scale machine learning, distributed optimization, and privacy-preserving data analysis? What do we understand currently about federated learning, and what problems are left to explore? In this post, we briefly answer these questions, and describe ongoing work in federated learning at CMU.

That’s all for this week! If you are not subscribed and liked this, feel free to subscribe below!