Last Week in AI #28

The world's largest AI chip, OpenAI's GPT-2 update and more!

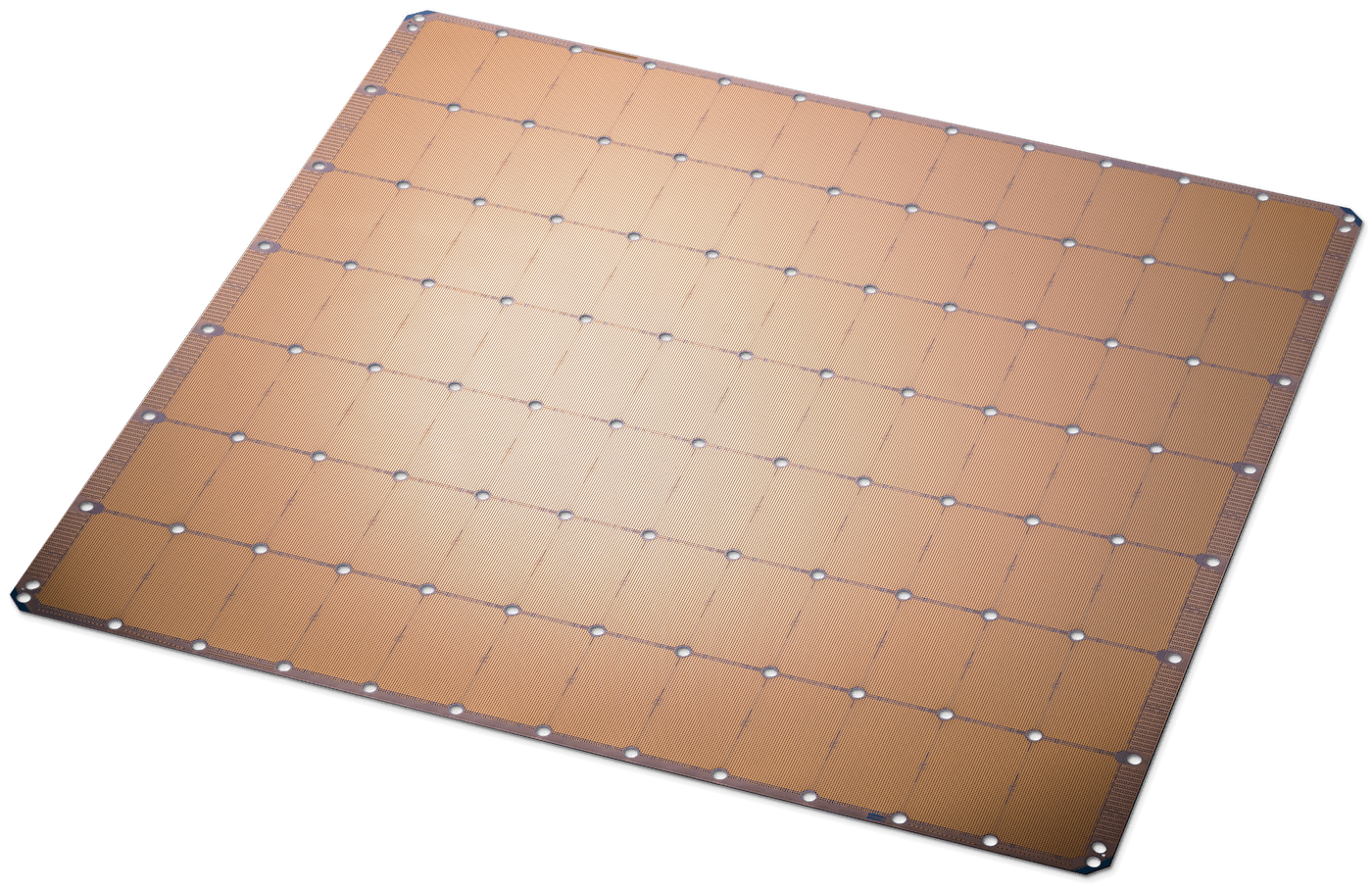

Image credit: Cerebras

Mini Briefs

To Power AI, This Startup Built a Really, Really Big Chip

Cerebras, the startup behind the 9 inch by 9 inch chip, likely the largest chip ever made, believes that future AI applications, especailly deep learning, will only increase demand in compute, and that large chips capable of parallel operations hundreds of times faster than the fastest GPU is the answer.

Application-specific chips for AI is a hot area, attracting the attention of all large tech companies as well as a swarm of startups in recent years. It remains to be seen how practical the large chip is given challenges in manufacturing, hardware and software integration, and cost.

Still, [Jim McGregor, founder of Tirias Research] expects the largest tech companies, who see their destinies riding on competing in AI—firms like Facebook, Amazon, and Baidu—to take a serious look at Cerebras’ big, weird chip. “For them it could make a lot of sense,” he says.

GPT-2: 6-Month Follow-Up

It has now been six months since OpenAI began its staged release process of GPT-2, a powerful deep learning language model capable of generating large chunks of coherent, believable text. The staged release process refers to how OpenAI first made public a very small version of GPT-2 (124M parameters), then gradually released more powerful ones weeks at a time (the current one has 774M parameters). The original GPT-2 has 1.5B parameters.

OpenAI reports that by collaborating with external partners during this release, it has learned 3 key things:

- Coordination among partners [to maintain a similar staged release process] is difficult, but possible

- Humans can be convinced by synthetic text

- Detection isn’t simple

This release process drew criticisms that having a model too powerful to release is a marketing gimmick and not releasing something that can be replicated by anyone who has enough compute only hinders security research. Still, OpenAI’s GPT-2 experiment and public reception is likely to become a valuable experience for other companies aiming to release their AI advances in the future.

Advances & Business

-

Google Assistant’s abilities dominate Siri and Alexa, research shows - Alexa and Siri aren’t nearly as capable as Google Assistant, according to new research. Research firm Loup Ventures recently published its semi-annual report on smartphone-based digital assistants, and the results once again show that Google is significantly ahead of Amazon and Apple.

-

NVIDIA AI Platform Takes Conversational User Experience To A New Level - After breaking all the records related to training computer vision models, NVIDIA now claims that its AI platform is able to train a natural language neural network model based on one of the largest datasets in a record time.

-

Deepfakes Can Help You Dance - Bruno Mars begins dancing to his hit pop song halfway through the YouTube clip, titled Everybody Dance Now, but the video’s real star is Tinghui Zhou. A gangly coder in skinny jeans, Zhou appears on the right side of the frame and, at first, flails his arms without rhythm.

-

Climate Collapse: is AI the Antidote? - Nature is declining globally at rates unprecedented in human history, and the rate of species extinction is accelerating, with grave impacts on people around the world now likely, warns a landmark new UN report. The climate crisis is biological, ecological and political.

-

Will the world’s biggest AI chip find buyers? - Computer brains are tiny rectangles that are shrinking with each new generation. Or so it used to be. These days Andrew Feldman, the boss of Cerebras, a startup, pulls a block of Plexiglas out of his backpack. Baked into it is a microprocessor the size of a letter paper.

-

Here’s China’s First Traffic Robot Police, And Its Now On Duty - China is one of the major growth markets for AI and robotics. Around 141,000 industrial robots were get sold in 2017, up 58.1 % year-on-year, and this week China unveiled its first batch of traffic robot police.

Concerns & Hype

-

Facial recognition is now rampant. The implications for our freedom are chilling - Last week, all of us who live in the UK, and all who visit us, discovered that our faces were being scanned secretly by private companies and have been for some time.

-

Meet the Researchers Working to Make Sure Artificial Intelligence Is a Force for Good - For Meredith Whittaker and Kate Crawford, who co-founded AI Now together in 2017, it’s AI’s disruption of industries that’s under scrutiny. They are two of many experts who are working to ensure that, as corporations, entrepreneurs and governments roll out new AI applications, they do so in a way that’s ethically sound.

Analysis & Policy

-

This Blockbuster AI Exhibit Highlights The First 500 Years Of Artificial Intelligence - Research on artificial intelligence in 16th Century Prague was initially deemed a phenomenal success. By combining letters of the Hebrew alphabet in a magical arrangement, Rabbi Judah Loew ben Bezalel programmed a Golem, an animated clay figure, to protect the Jewish people from assault.

-

Will China lead the world in AI by 2030? - China not only has the world’s largest population and looks set to become the largest economy, it also wants to lead the world when it comes to artificial intelligence (AI).

Explainers

-

The State of Transfer Learning in NLP - This post expands on the NAACL 2019 tutorial on Transfer Learning in NLP. The tutorial was organized by Matthew Peters, Swabha Swayamdipta, Thomas Wolf, and me. In this post, I highlight key insights and takeaways and provide updates based on recent work.

-

The Amazing Ways YouTube Uses Artificial Intelligence And Machine Learning - There are more than 1.9 billion users logged in to YouTube every single month who watch over a billion hours of video every day. Every minute, creators upload 300 hours of video to the platform. With this number of users, activity, and content, it makes sense for YouTube to take advantage of the power of artificial intelligence (AI) to help operations. Here are a few ways YouTube, owned by Google, uses artificial intelligence today.

That’s all for this week! If you are not subscribed and liked this, feel free to subscribe below!