Last Week in AI #66

Low trust in self-driving cars, dark past of crime prediction, and more!

Image credit: Catherine Stinson / aeon

Mini Briefs

Americans don’t know why they don’t trust self-driving cars

The race towards self-driving cars has been one of the hottest topics in technology in the past few years. Large companies and startups, from Google’s Waymo and Lyft’s Level 5 to Argo AI and Aurora, have all been throwing brainpower and compute at the problem of developing cars that can operate safely in traffic without a driver. The task is truly difficult and the road has been bumpy, with such hiccups as a fatal accident in Tempe, Arizona, involving a self-driving Uber car that hit and struck a woman. In the light of stories such as Uber’s, the clear technical difficulties, and the moral issues, it seems unsurprising that the general public might be less than enthusiastic about the idea of self-driving cars. But a study conducted in February and March 2020 on behalf of Partners for Automated Vehicle Education (PAVE) found that the mistrust comes from somewhere else. While 48 percent of respondents said they would never get into a self-driving taxi and 20 percent think the technology will never be safe, most respondents were not familiar with the Tempe collision or accidents involving Tesla’s Autopilot feature. Most of the mistrust, according to the study’s results, comes from respondents’ not having a chance to experience the technology first-hand.

Algorithms associating appearance and criminality have a dark past

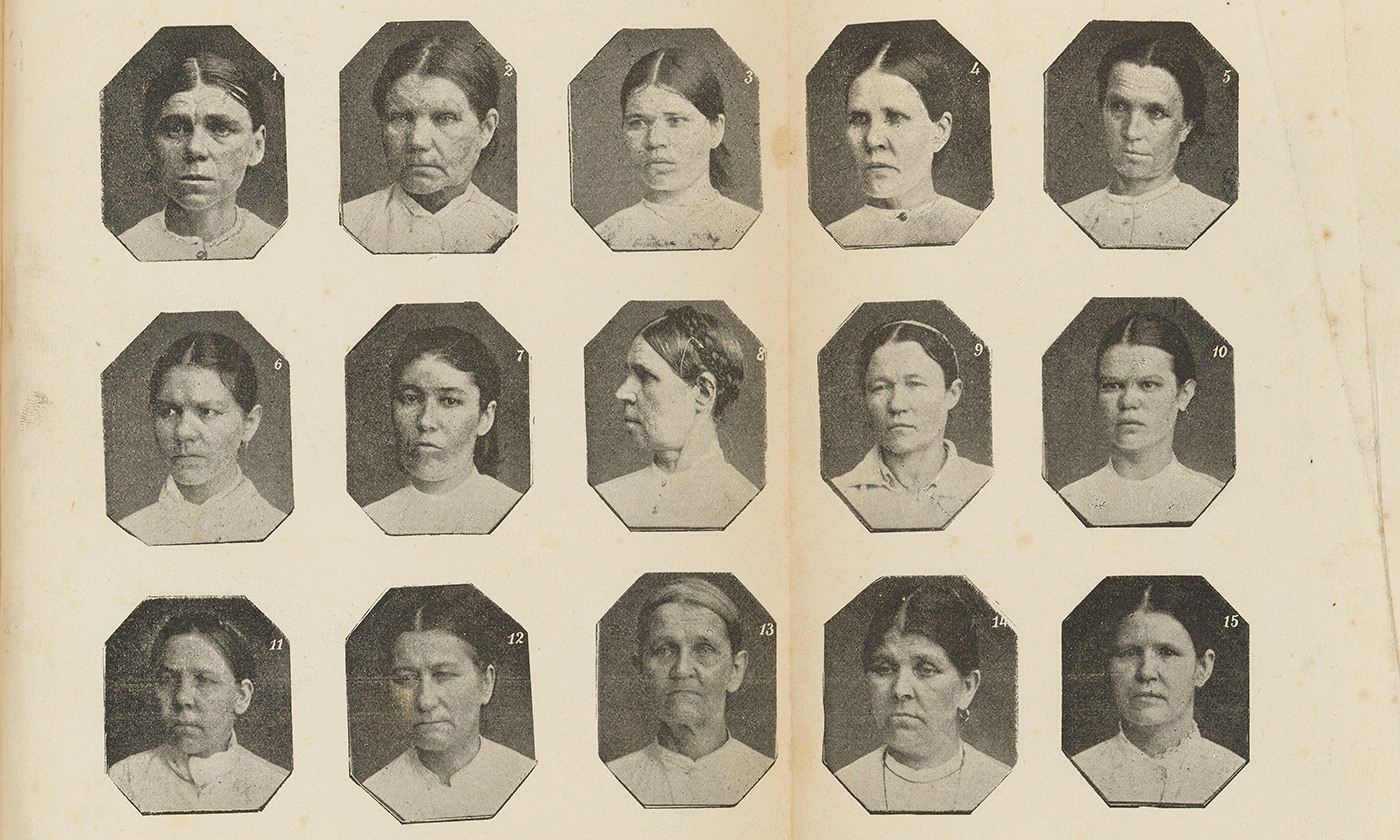

AI bias is a well-known issue, and the racial biases that underlie policing will inevitably be reflected in AI systems that are trained on policing data and used for the purpose of identifying criminals. Recently, large numbers of startups have promoted products in which AI is used to identify criminals, detect personality traits of job candidates, and perform similar tasks. But if people who have a certain skin color or look a certain way are apprehended more often than others, they will show up more often in criminal datasets–the bias towards labeling people as criminals based on their looks becomes self-reinforcing because the algorithms pick up on what humans have decided are correlations between appearance and criminality.

A notorious piece of research that invited plenty of backlash involved predicting criminality based on the shape of people’s faces–the researchers did not understand the rebuttals to their work that was meant for “pure academic discussion.” Although such facial-recognition research could be described as phrenology, merely slapping it with a label does not communicate the gravity of the issue to the researchers involved. Hopefully, spelling out the social issues more clearly will have more of an impact in forcing researchers to consider the disparate effects of their work.

Podcast

Subscribe to our weekly podcast covering these stories! Website | RSS | iTunes | Spotify | YouTube

News

Advances & Business

-

SecondHands consortium announces progress in robot for assisting maintenance workers - This month, the EU Horizon2020 SecondHands consortium announced the “completion” of its ARMAR-6 humanoid platform after five years of development. The robot is intended to help workers in spaces designed for humans in factories and warehouses.

-

No matter how sophisticated, artificial intelligence systems still need human oversight - AI and machine learning systems are only as good as the data we feed them. Witness the fallout from the COVID-19 crisis, which threw many AI algorithms out of whack: ‘If machines are to be trusted, we need to watch over them.’

-

How a Chinese AI Giant Made Chatting–and Surveillance–Easy - In China, voice-computing company iFlytek built smart assistants like Alexa and Siri beloved by users. But its tech is also helping the government listen in.

-

DeepMind claims its AI predicts macular degeneration more accurately than experts - In 2018, Google Health and Alphabet’s DeepMind released a peer-reviewed paper detailing an AI system that could recommend treatment for more than 50 eye diseases with 94% accuracy. Recently, in a follow-up study published in Nature Medicine, DeepMind claims its system can spot macular degeneration with high accuracy and predict the disease’s progression within a six-month period.

-

The Nonhuman Touch: Pandemic Hastens Push for AI, Robotics - Plus One Robotics’ artificially intelligent packaging robot, which works along with human employees to process packages for shipping to lessen the frequency of human handling, is an example of the kind of product drawing attention since the start of the coronavirus pandemic.

-

This Lab “Cooks” With AI to Make New Materials - A Toronto lab recycles carbon dioxide into more useful chemicals, using materials it discovered with artificial intelligence and supercomputers.

-

Nvidia’s AI recreates Pac-Man from scratch just by watching it being played - Nvidia is best known for its graphics cards, but the company conducts some serious research into artificial intelligence, too. For its latest project, Nvidia researchers taught an AI system to recreate the game of Pac-Man simply by watching it being played.

Concerns & Hype

-

Facial Recognition Company Clear Is Going From Airports to Your Office - Clear, a biometrics company whose face-scanning kiosks you may have seen at airports, wants to bring its technology to the wider world struggling with the coronavirus pandemic and install similar systems at the doors of reopened businesses and offices under a new initiative called Health Pass.

-

Are AI-Powered Killer Robots Inevitable? - Military scholars warn of a “battlefield singularity,” a point at which humans can no longer keep up with the pace of conflict.

Expert Opinions & Discussion within the field

- A fight for the soul of machine learning - Last Tuesday, Google shared a blog post highlighting the perspectives of three women of color employees on fairness and machine learning. But a report from NBC News broke that Google had scrapped diversity initiatives over conservative backlash, a troubling move.

That’s all for this week! If you are not subscribed and liked this, feel free to subscribe below!