The Nooscope — a visual manifesto of the limits of AI

Introducing the Nooscope, a diagram that shows how Machine Learning works and how it fails

Image credit: On the the invention of metaphors as instrument of knowledge magnification. Emanuele Tesauro, Il canocchiale aristotelico, frontispiece of the 1670 edition, Turin.

This is a shorter version of the text “The Nooscope Manifested: Artificial Intelligence as Instrument of Knowledge Extractivism” by Matteo Pasquinelli and Vladan Joler available at nooscope.ai.

Abstract

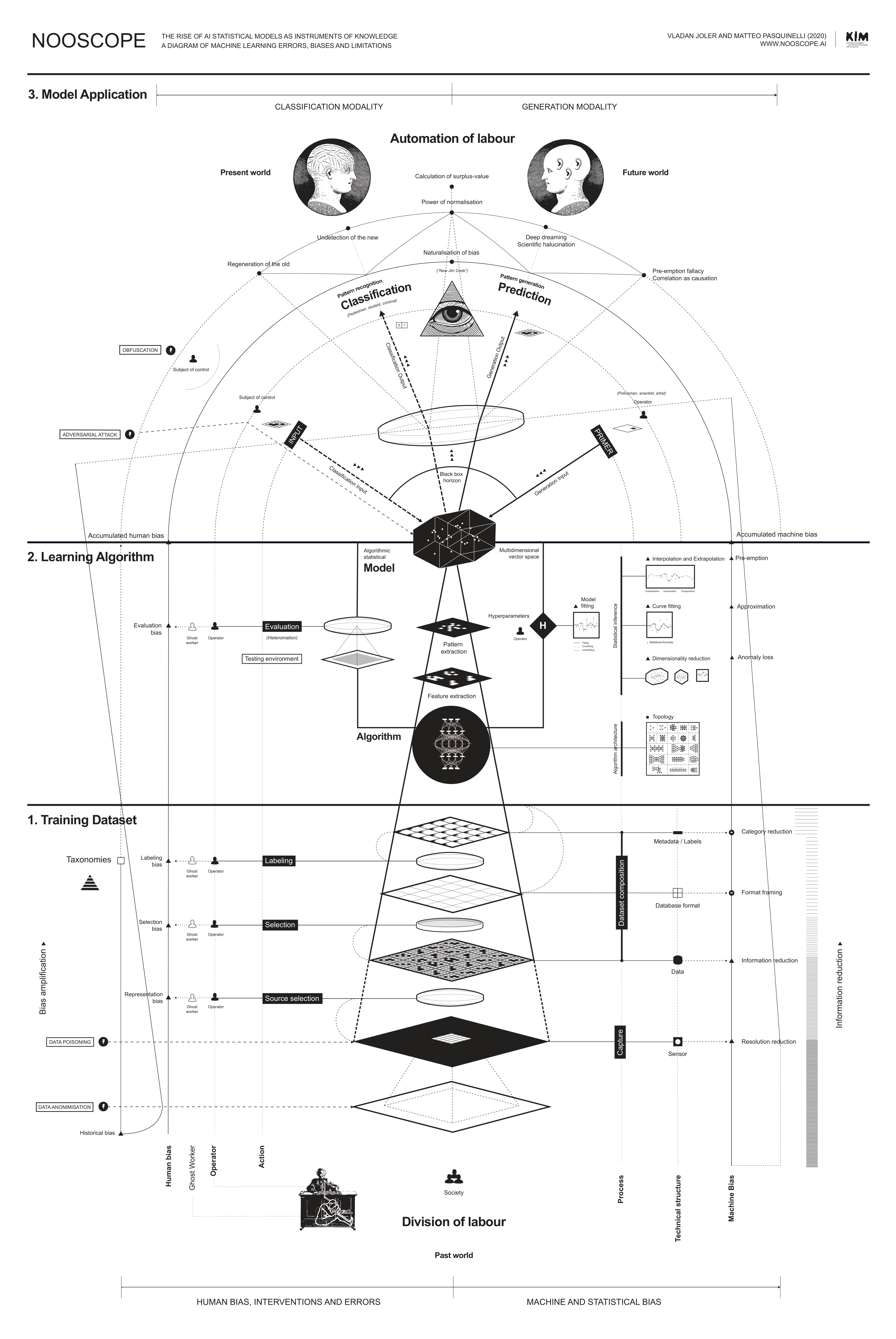

The Nooscope is a visual manifesto of the limits of AI, intended as a provocation to both computer science and the humanities. It questions the technical definition of intelligence and the autonomy from society that are implicit in the expression ‘artificial intelligence.’ It is also an attempt to the expose the role of ‘ghost work’ in the logical construction of machine learning.

Some enlightenment regarding the project to mechanise reason

The Nooscope is a cartography of the limits of artificial intelligence, intended as a provocation to both computer science and the humanities. Any map is a partial perspective, a way to provoke debate. Similarly, this map is a manifesto — of AI dissidents. Its main purpose is to challenge the mystifications of artificial intelligence. First, as a technical definition of intelligence and, second, as a political form that would be autonomous from society and the human.1 In the expression ‘artificial intelligence’ the adjective ‘artificial’ carries the myth of the technology’s autonomy: it hints to caricatural ‘alien minds’ that self-reproduce in silico but, actually, mystifies two processes of proper alienation: the growing geopolitical autonomy of hi-tech companies and the invisibilization of workers’ autonomy worldwide. The modern project to mechanise human reason has clearly mutated, in the 21st century, into a corporate regime of knowledge extractivism and epistemic colonialism.2 This is unsurprising, since machine learning algorithms are the most powerful algorithms for information compression.

The purpose of the Nooscope map is to secularize AI from the ideological status of ‘intelligent machine’ to one of knowledge instrument. Rather than evoking legends of alien cognition, it is more reasonable to consider machine learning as an instrument of knowledge magnification that helps to perceive features, patterns, and correlations through vast spaces of data beyond human reach. In the history of science and technology, this is no news: it has already been pursued by optical instruments throughout the histories of astronomy and medicine.3 In the tradition of science, machine learning is just a Nooscope, an instrument to see and navigate the space of knowledge (from the Greek skopein ‘to examine, look’ and noos ‘knowledge’).

Borrowing the idea from Gottfried Wilhelm Leibniz, the Nooscope diagram applies the analogy of optical media to the structure of all machine learning apparatuses. Discussing the power of his calculus ratiocinator and ‘characteristic numbers’ (the idea to design a numerical universal language to codify and solve all the problems of human reasoning), Leibniz made an analogy with instruments of visual magnification such as the microscope and telescope. He wrote: ‘Once the characteristic numbers are established for most concepts, mankind will then possess a new instrument which will enhance the capabilities of the mind to a far greater extent than optical instruments strengthen the eyes, and will supersede the microscope and telescope to the same extent that reason is superior to eyesight.’4 Although the purpose of this text is not to reiterate the opposition between quantitative and qualitative cultures, Leibniz’s credo need not be followed. Controversies cannot be conclusively computed. Machine learning is not the ultimate form of intelligence.

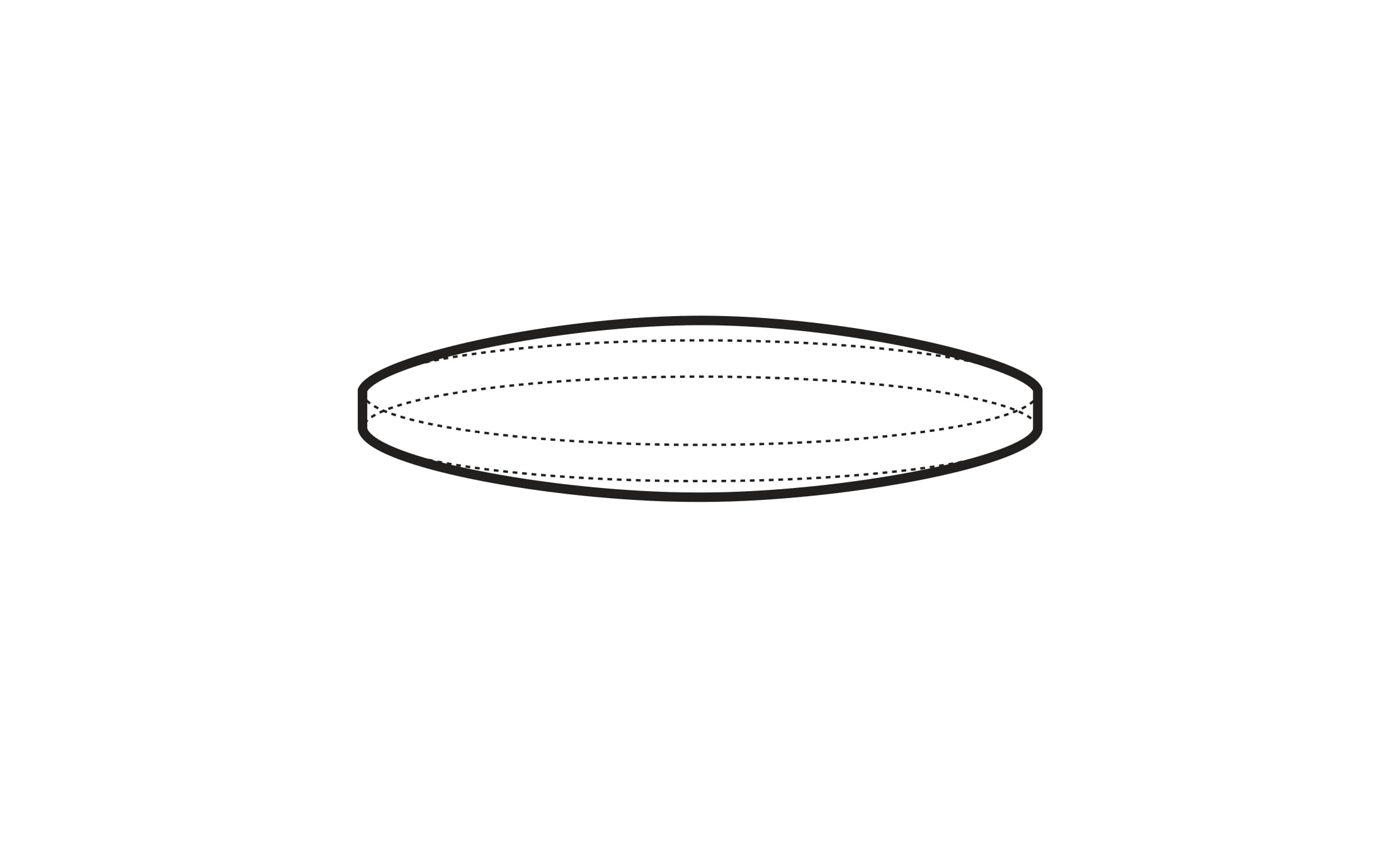

Instruments of measurement and perception always come with inbuilt aberrations. In the same way that the lenses of microscopes and telescopes are never perfectly curvilinear and smooth, the logical lenses of machine learning embody faults and biases. To understand machine learning and register its impact on society is to study the degree by which social data are diffracted and distorted by these lenses. This is generally known as the debate on bias in AI, but the political implications of the logical form of machine learning are deeper. Machine learning is not bringing a new dark age but one of diffracted rationality, in which, as it will be shown, an episteme of causation is replaced by one of automated correlations. More in general, AI is a new regime of truth, scientific proof, social normativity and rationality, which often does take the shape of a statistical hallucination. This diagram manifesto is another way to say that AI, the king of computation (patriarchal fantasy of mechanised knowledge, ‘master algorithm’ and alpha machine) is naked. Here, we are peeping into its black box.

The assembly line of machine learning: Data, Algorithm, Model

The history of AI is a history of experiments, machine failures, academic controversies, epic rivalries around military funding, popularly known as ‘winters of AI.’5 Although corporate AI today describes its power with the language of ‘black magic’ and ‘superhuman cognition’, current techniques are still at the experimental stage.6 AI is now at the same stage as when the steam engine was invented, before the laws of thermodynamics necessary to explain and control its inner workings, had been discovered. Similarly, today, there are efficient neural networks for image recognition, but there is no theory of learning to explain why they work so well and how they fail so badly. Like any invention, the paradigm of machine learning consolidated slowly, in this case through the last half-century. A master algorithm has not appeared overnight. Rather, there has been a gradual construction of a method of computation that still has to find a common language. Manuals of machine learning for students, for instance, do not yet share a common terminology. How to sketch, then, a critical grammar of machine learning that may be concise and accessible, without playing into the paranoid game of defining General Intelligence?

As an instrument of knowledge, machine learning is composed of an object to be observed (training dataset), an instrument of observation (learning algorithm) and a final representation (statistical model). The assemblage of these three elements is proposed here as a spurious and baroque diagram of machine learning, extravagantly termed Nooscope.7 Staying with the analogy of optical media, the information flow of machine learning is like a light beam that is projected by the training data, compressed by the algorithm and diffracted towards the world by the lens of the statistical model.

The Nooscope diagram aims to illustrate two sides of machine learning at the same time: how it works and how it fails — enumerating its main components, as well as the broad spectrum of errors, limitations, approximations, biases, faults, fallacies and vulnerabilities that are native to its paradigm.8 This double operation stresses that AI is not a monolithic paradigm of rationality but a spurious architecture made of adapting techniques and tricks. Besides, the limits of AI are not simply technical but are imbricated with human bias. In the Nooscope diagram the essential components of machine learning are represented at the centre, human biases and interventions on the left, and technical biases and limitations on the right. Optical lenses symbolize biases and approximations representing the compression and distortion of the information flow. The total bias of machine learning is represented by the central lens of the statistical model through which the perception of the world is diffracted.

While the social consequences of AI are popularly understood under the issue of bias, the common understanding of technical limitations is known as the black box problem. The black box effect is an actual issue of deep neural networks (which filter information so much that their chain of reasoning cannot be reversed) but has become a generic pretext for the opinion that AI systems are not just inscrutable and opaque, but even ‘alien’ and out of control.9 The black box effect is part of the nature of any experimental machine at the early stage of development (it has already been noticed that the functioning of the steam engine remained a mystery for some time, even after having been successfully tested). The actual problem is the black box rhetoric, which is closely tied to conspiracy theory sentiments in which AI is an occult power that cannot be studied, known, or politically controlled.

The history of AI as the automation of perception

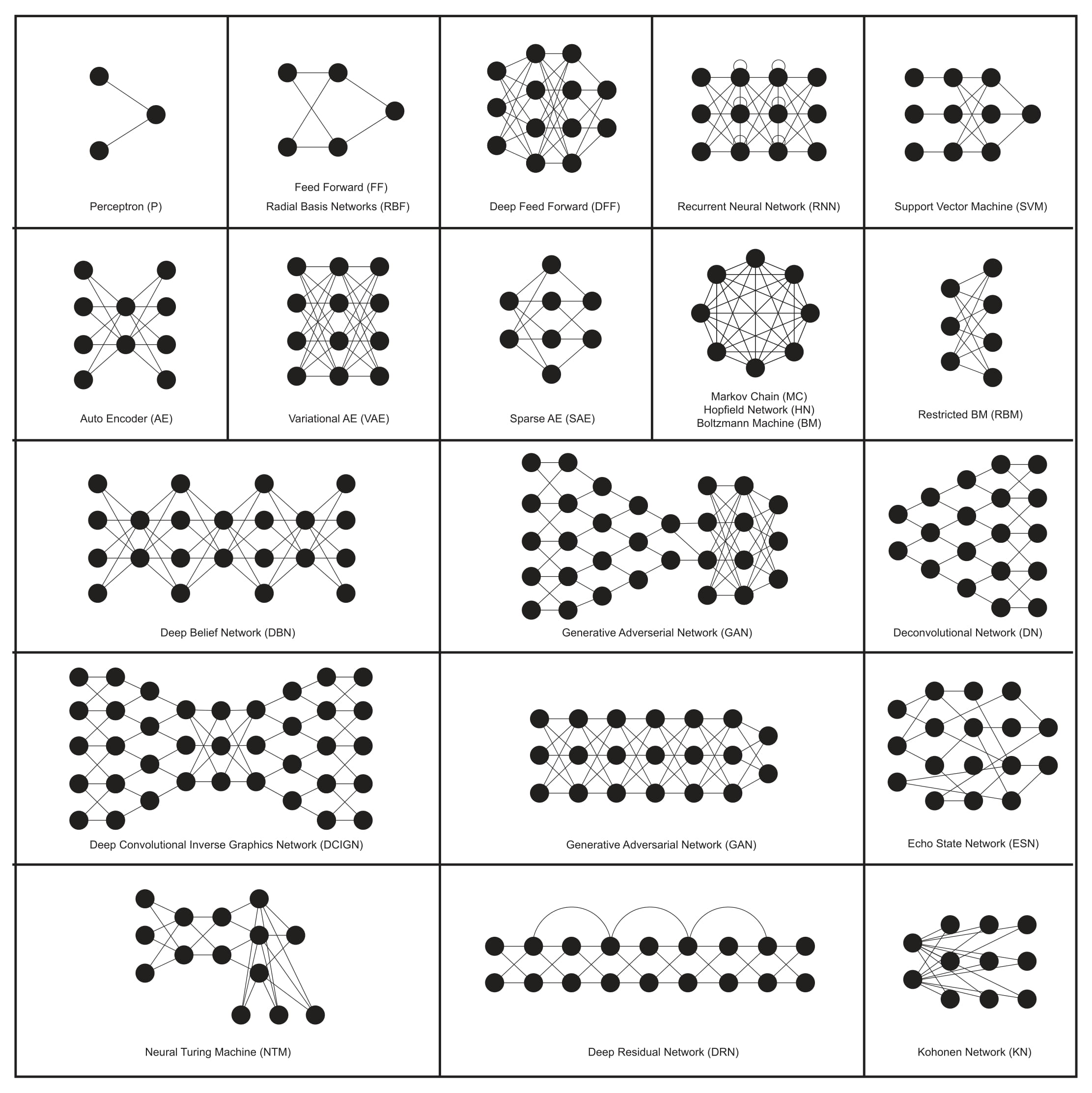

The need to demystify AI (at least from the technical point of view) is understood in the corporate world too. Head of Facebook AI and godfather of convolutional neural networks Yann LeCun reiterates that current AI systems are not sophisticated versions of cognition, but rather, of perception. Similarly, the Nooscope diagram exposes the skeleton of the AI black box and shows that AI is not a thinking automaton but an algorithm that performs pattern recognition. The notion of pattern recognition contains issues that must be elaborated upon. What is a pattern, by the way? Is a pattern uniquely a visual entity? What does it mean to read social behaviours as patterns? Is pattern recognition an exhaustive definition of intelligence? Most likely not. To clarify these issues, it would be good to undertake a brief archaeology of AI.

The archetype machine for pattern recognition is Frank Rosenblatt’s Perceptron. Invented in 1957 at Cornell Aeronautical Laboratory in Buffalo, New York, its name is a shorthand for ‘Perceiving and Recognizing Automaton.’10 Given a visual matrix of 20x20 photoreceptors, the Perceptron can learn how to recognise simple letters. A visual pattern is recorded as an impression on a network of artificial neurons that are firing up in concert with the repetition of similar images and activating one single output neuron. The output neuron fires 1=true, if a given image is recognised, or 0=false, if a given image is not recognised.

Rosenblatt’s Perceptron was the first algorithm that paved the way to machine learning in the contemporary sense. At a time when ‘computer science’ had not yet been adopted as definition, the field was called ‘computational geometry’ and specifically ‘connectionism’ by Rosenblatt himself. The business of these neural networks, however, was to calculate a statistical inference. What a neural network computes, is not an exact pattern but the statistical distribution of a pattern. Just scraping the surface of the anthropomorphic marketing of AI, one finds another technical and cultural object that needs examination: the statistical model. What is the statistical model in machine learning? How is it calculated? What is the relationship between a statistical model and human cognition? These are crucial issues to clarify. In terms of the work of demystification that needs to be done (also to evaporate some naïve questions), it would be good to reformulate the trite question ‘Can a machine think?’ into the theoretically sounder questions ‘Can a statistical model think?’, ‘Can a statistical model develop consciousness?’, et cetera.

The learning algorithm: compressing the world into a statistical model

The algorithms of AI are often evoked as alchemic formulas, capable of distilling ‘alien’ forms of intelligence. But what do the algorithms of machine learning really do? Few people, including the followers of AGI (Artificial General Intelligence), bother to ask this question. Algorithm is the name of a process, whereby a machine performs a calculation. The product of such machine processes is a statistical model (more accurately termed an ‘algorithmic statistical model’). In the developer community, the term ‘algorithm’ is increasingly replaced with ‘model.’ This terminological confusion arises from the fact that the statistical model does not exist separately from the algorithm: somehow, the statistical model exists inside the algorithm under the form of distributed memory across its parameters. For the same reason, it is essentially impossible to visualise an algorithmic statistical model, as is done with simple mathematical functions. Still, the challenge is worthwhile.

Statistical models have always influenced culture and politics. They did not just emerge with machine learning: machine learning is just a new way to automate the technique of statistical modelling. When Greta Thunberg warns ‘Listen to science.’ what she really means, being a good student of mathematics, is ‘Listen to the statistical models of climate science.’ No statistical models, no climate science: no climate science, no climate activism. Climate science is indeed a good example to start with, in order to understand statistical models. Global warming has been calculated by first collecting a vast dataset of temperatures from Earth’s surface each day of the year, and second, by applying a mathematical model that plots the curve of temperature variations in the past and projects the same pattern into the future.11 Climate models are historical artefacts that are tested and debated within the scientific community, and today, also beyond.12 Machine learning models, on the contrary, are opaque and inaccessible to community debate. Given the degree of myth-making and social bias around its mathematical constructs, AI has indeed inaugurated the age of statistical science fiction. Nooscope is the projector of this large statistical cinema.

All models are wrong, but some are useful

‘All models are wrong, but some are useful’ — the canonical dictum of the British statistician George Box has long encapsulated the logical limitations of statistics and machine learning.13 This maxim, however, is often used to legitimise the bias of corporate and state AI. Computer scientists argue that human cognition reflects the capacity to abstract and approximate patterns. So what’s the problem with machines being approximate, and doing the same? Within this argument, it is rhetorically repeated that ‘the map is not the territory’. This sounds reasonable. But what should be contested is that AI is a heavily compressed and distorted map of the territory and that this map, like many forms of automation, is not open to community negotiation. AI is a map of the territory without community access and community consent.14

It is important to recall that the ‘intelligence’ of machine learning is not driven by exact formulas of mathematical analysis, but by algorithms of brute force approximation. The shape of the correlation function between input x and output y is calculated algorithmically, step by step, through tiresome mechanical processes of gradual adjustment (like gradient descent, for instance) that are equivalent to the differential calculus of Leibniz and Newton. Neural networks are said to be among the most efficient algorithms because these differential methods can approximate the shape of any function given enough layers of neurons and abundant computing resources.15 Brute-force gradual approximation of a function is the core feature of today’s AI, and only from this perspective can one understand its potentialities and limitations — particularly its escalating carbon footprint (the training of deep neural networks requires exorbitant amounts of energy because of gradient descent and similar training algorithms that operate on the basis of continuous infinitesimal adjustments).16

Machine learning classification and prediction are becoming ubiquitous techniques that constitute new forms of surveillance and governance. Some apparatuses, such as self-driving vehicles and industrial robots, can be an integration of both modalities. A self-driving vehicle is trained to recognise different objects on the road (people, cars, obstacles, signs) and predict future actions based on decisions that a human driver has taken in similar circumstances. Even if recognising an obstacle on a road seems to be a neutral gesture (it’s not), identifying a human being according to categories of gender, race and class (and in the recent COVID-19 pandemic as sick or immune), as state institutions are increasingly doing, is the gesture of a new disciplinary regime. The hubris of automated classification has caused the revival of reactionary Lombrosian techniques that were thought to have been consigned to history, techniques such as Automatic Gender Recognition (AGR), ‘a subfield of facial recognition that aims to algorithmically identify the gender of individuals from photographs or videos.’17

Labour in the age of AI

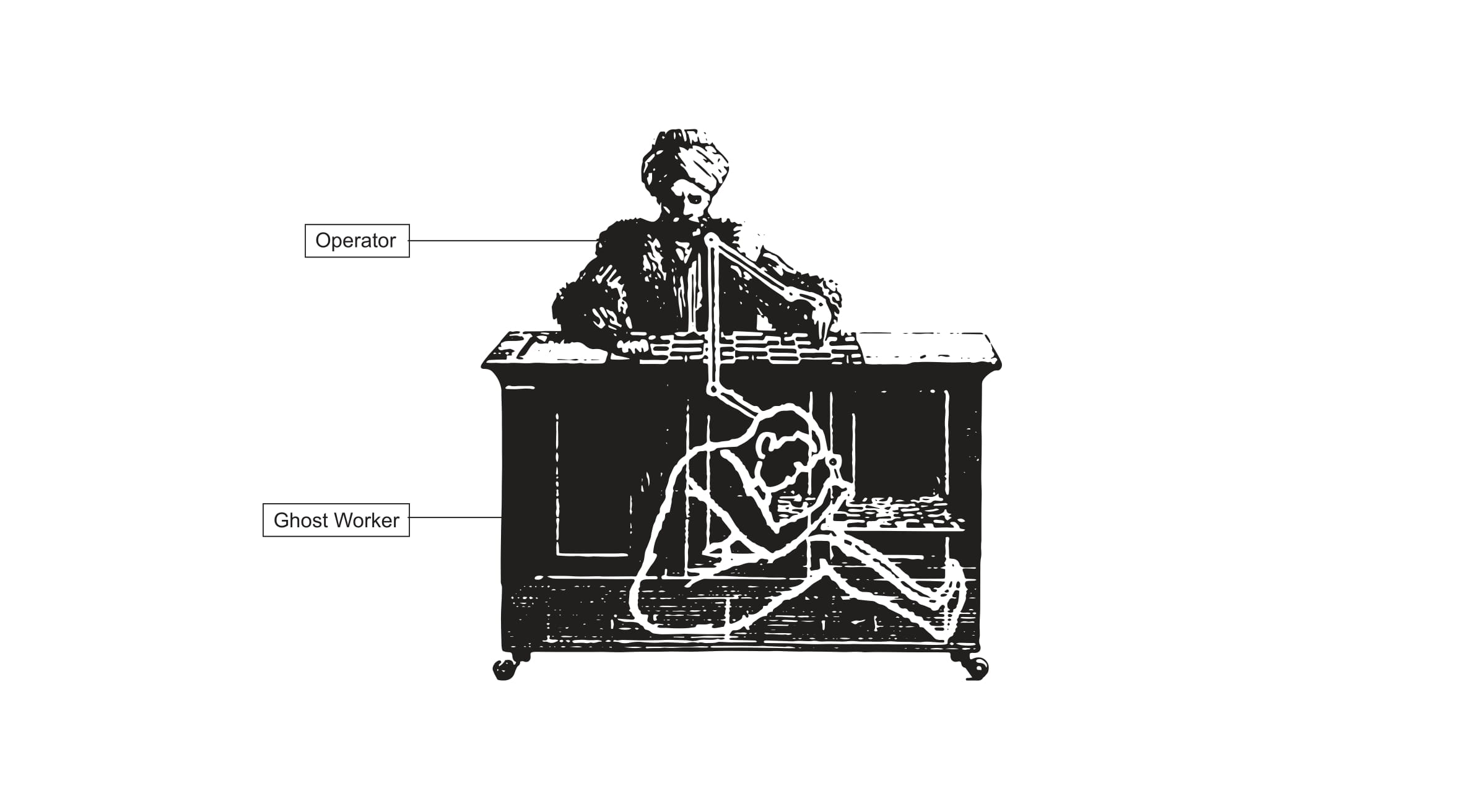

The natures of the ‘input’ and ‘output’ of machine learning have to be clarified. AI troubles are not only about information bias but also labour. AI is not just a control apparatus, but also a productive one. As just mentioned, an invisible workforce is involved in each step of its assembly line (dataset composition, algorithm supervision, model evaluation, etc.). Pipelines of endless tasks innervate from the Global North into the Global South; crowdsourced platforms of workers from Venezuela, Brazil and Italy, for instance, are crucial in order to teach German self-driving cars ‘how to see.’18 Against the idea of alien intelligence at work, it must be stressed that in the whole computing process of AI the human worker has never left the loop, or put more accurately, has never left the assembly line. Mary Gray and Siddharth Suri coined the term ‘ghost work’ for the invisible labour that makes AI appear artificially autonomous. 19 Automation is a myth; because machines, including AI, constantly call for human help, some authors have suggested replacing ‘automation’ with the more accurate term heteromation.20 Heteromation means that the familiar narrative of AI as perpetuum mobile is possible only thanks to a reserve army of workers.

Yet there is a more profound way in which labour constitutes AI. The information source of machine learning (whatever its name: input data, training data or just data) is always a representation of human skills, activities and behaviours, social production at large. All training datasets are, implicitly, a diagram of the division of human labour that AI has to analyse and automate. Datasets for image recognition, for instance, record the visual labour that drivers, guards, and supervisors usually perform during their tasks. Even scientific datasets rely on scientific labour, experiment planning, laboratory organisation, and analytical observation. The information flow of AI has to be understood as an apparatus designed to extract ‘analytical intelligence’ from the most diverse forms of labour and to transfer such intelligence into a machine (obviously including, within the definition of labour, extended forms of social, cultural and scientific production).21 In short, the origin of machine intelligence is the division of labour and its main purpose is the automation of labour.

Historians of computation have already stressed the early steps of machine intelligence in the 19^th^ century project of mechanizing the division of mental labour, specifically the task of hand calculation.22 The enterprise of computation has since then been a combination of surveillance and disciplining of labour, of optimal calculation of surplus-value, and planning of collective behaviours.23 Computation was established by and still enforces a regime of visibility and intelligibility, not just of logical reasoning. The genealogy of AI as an apparatus of power is confirmed today by its widespread employment in technologies of identification and prediction, yet the core anomaly which always remains to be computed is the disorganisation of labour.

As a technology of automation, AI will have a tremendous impact on the job market. If Deep Learning has a 1% error rate in image recognition, for example, it means that roughly 99% of routine work based on visual tasks (e.g. airport security) can be potentially replaced (legal restrictions and trade union opposition permitting). The impact of AI on labour is well described (from the perspective of workers, finally) within a paper from the European Trade Union Institute, which highlights ‘seven essential dimensions that future regulation should address in order to protect workers: 1) safeguarding worker privacy and data protection; 2) addressing surveillance, tracking and monitoring; 3) making the purpose of AI algorithms transparent; 4) ensuring the exercise of the ‘right to explanation’ regarding decisions made by algorithms or machine learning models; 5) preserving the security and safety of workers in human-machine interactions; 6) boosting workers’ autonomy in human–machine interactions; 7) enabling workers to become AI literate.’24

Ultimately, the Nooscope manifests for a novel Machinery Question in the age of AI. The Machinery Question was a debate that sparked in England during the industrial revolution, when the response to the employment of machines and workers’ subsequent technological unemployment was a social campaign for more education about machines, that took the form of the Mechanics’ Institute Movement.25 Today an Intelligent Machinery Question is needed to develop more collective intelligence about ‘machine intelligence,’ more public education instead of ‘learning machines’ and their regime of knowledge extractivism (which reinforces old colonial routes, just by looking at the network map of crowdsourcing platforms today). Also in the Global North, this colonial relationship between corporate AI and the production of knowledge as a common good has to be brought to the fore. The Nooscope’s purpose is to expose the hidden room of the corporate Mechanical Turk and to illuminate the invisible labour of knowledge that makes machine intelligence appear ideologically alive.

-

On the autonomy of technology see: Langdon Winner, Autonomous Technology: Technics-Out-of-Control as a Theme in Political Thought. Cambridge, MA: MIT Press, 2001. ↩

-

For the extension of colonial power into the operations of logistics, algorithms and finance see: Sandro Mezzadra and Brett Neilson, The Politics of Operations: Excavating Contemporary Capitalism. Durham: Duke University Press, 2019. On the epistemic colonialism of AI see: Matteo Pasquinelli, ‘Three Thousand Years of Algorithmic Rituals.’ e-flux 101, 2019. ↩

-

Digital humanities term a similar technique distant reading, which has gradually involved data analytics and machine learning in literary and art history. See: Franco Moretti, Distant Reading. London: Verso, 2013. ↩

-

Gottfried W. Leibniz, ‘Preface to the General Science’, 1677. In: Phillip Wiener (ed.) Leibniz Selections. New York: Scribner, 1951, 23. ↩

-

For a concise history of AI see: Dominique Cardon, Jean-Philippe Cointet and Antoine Mazières, ‘Neurons Spike Back: The Invention of Inductive Machines and the Artificial Intelligence Controversy.’ Réseaux 211, 2018. ↩

-

Alexander Campolo and Kate Crawford, ‘Enchanted Determinism: Power without Control in Artificial Intelligence.’ Engaging Science, Technology, and Society 6, 2020. ↩

-

The use of the visual analogy is also intended to record the fading distinction between image and logic, representation and inference, in the technical composition of AI. The statistical models of machine learning are operative representations (in the sense of Harun Farocki’s operative images). ↩

-

For a systematic study of the logical limitations of machine learning see: Momin Mailk, ‘A Hierarchy of Limitations in Machine Learning.’ Arxiv preprint, 2020. https://arxiv.org/abs/2002.05193 ↩

-

Projects such as Explainable Artificial Intelligence, Interpretable Deep Learning and Heatmapping among others have demonstrated that breaking into the ‘black box’ of machine learning is possible. Nevertheless, the full interpretability and explicability of machine learning statistical models remains a myth. See: Zacharay Lipton, ‘The Mythos of Model Interpretability.’ ArXiv preprint, 2016. https://arxiv.org/abs/1606.03490 ↩

-

Frank Rosenblatt, ‘The Perceptron: A Perceiving and Recognizing Automaton.’ Cornell Aeronautical Laboratory Report 85-460-1, 1957. ↩

-

Paul Edwards, A Vast Machine: Computer Models, Climate Data, and The Politics of Global Warming. Cambridge, MA:

MIT Press, 2010. ↩

-

See the the Community Earth System Model (CESM) that has been developed by the National Center for Atmospheric Research in Bolder, Colorado, since 1996. The Community Earth System Model is a fully coupled numerical simulation of the Earth system consisting of atmospheric, ocean, ice, land surface, carbon cycle, and other components. CESM includes a climate model providing state-of-the-art simulations of the Earth’s past, present, and future.’ http://www.cesm.ucar.edu ↩

-

George Box, ‘Robustness in the Strategy of Scientific Model Building.’ Technical Report #1954, Mathematics Research Center, University of Wisconsin-Madison, 1979. ↩

-

Post-colonial and post-structuralist schools of anthropology and ethnology have stressed that there is never territory per se, but always an act of territorialisation. ↩

-

As proven by the Universal Approximation Theorem. ↩

-

Ananya Ganesh, Andrew McCallum and Emma Strubell, ‘Energy and Policy Considerations for Deep Learning in NLP.’ ArXiv preprint,

- https://arxiv.org/abs/1906.02243

-

Os Keyes, ‘The Misgendering Machines: Trans/HCI Implications of Automatic Gender Recognition.’ Proceedings of the ACM on Human-Computer Interaction 2(88), November 2018. https://doi.org/10.1145/3274357 ↩

-

Florian Schmidt, ‘Crowdsourced Production of AI Training Data: How Human Workers Teach Self-Driving Cars to See.’ Düsseldorf: Hans-Böckler-Stiftung, 2019. ↩

-

Mary Gray and Siddharth Suri, ‘Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass.’ Boston, MA: Eamon Dolan Books, 2019. ↩

-

Hamid Ekbia and Bonnie Nardi, Heteromation, and Other Stories of Computing and Capitalism. Cambridge, MA: MIT Press, 2017. ↩

-

For the idea of analytical intelligence see: Lorraine Daston, ‘Calculation and the Division of Labour 1750–1950.’ Bulletin of the German Historical Institute 62, 2018. ↩

-

Simon Schaffer, ‘Babbage’s Intelligence: Calculating Engines and the Factory System’, Critical Inquiry 21, 1994. Lorraine Daston, ‘Enlightenment calculations’. Critical Inquiry 21, 1994. Matthew L. Jones, Reckoning with Matter: Calculating Machines, Innovation, and Thinking about Thinking from Pascal to Babbage. Chicago: University of Chicago Press, 2016. ↩

-

Matteo Pasquinelli, ‘On the Origins of Marx’s General Intellect.’ Radical Philosophy 2.06, 2019. ↩

-

Aida Ponce, ‘Labour in the Age of AI: Why Regulation is Needed to Protect Workers.’ ETUI Research Paper - Foresight Brief 8, 2020. http://dx.doi.org/10.2139/ssrn.3541002 ↩

-

Maxine Berg, The Machinery Question and the Making of Political Economy. Cambridge, UK: Cambridge University Press, 1980. In fact, even the Economist has recently warned about ‘the return of the machinery question’ in the age of AI. See: Tom Standage, ‘The Return of the Machinery Question.’ The Economist, 23 June 2016. ↩