The State of Deepfakes in 2020

On what Deepfakes are, how they are used, and how much they should concern you.

Image credit: Facebook

“The rise of synthetic media and deepfakes is forcing us towards an important and unsettling realization: our historical belief that video and audio are reliable records of reality is no longer tenable.” -The State of DeepFakes 2019 Report

Introduction

Media manipulation through images and videos has been around for decades. For example, during WWII, Mousollini released a propaganda image of himself on a horse with his horse handler edited out. The goal was to make himself seem more impressive and powerful 1. These types of tricks can have significant impacts given the scale of the audience, especially in the internet era. DARPA has constructed an entire program to develop media forensics methods for detecting manipulated media 2.

Fake news may one day pale in comparison to the impact of deepfake news 3. Deepfakes are a set of Computer Vision methods that can create doctored images or videos with uncanny realism. In recent years, they have been blowing up in both quality and popularity. The term deepfake comes from a “fake” image or video generated by a “deep” learning algorithm. You’ve likely seen a video of a movie scene with actors face-swapped with a scary degree of accuracy.

This technology has the potential to provide malicious actors with the means to sow unprecedented amounts of disinformation because it is far cheaper and more accessible than traditional special effects techniques used in Hollywood when it comes to creating videos with realistic face-swaps. This is concerning, since fake news is already prevalent and people are all too often believing news with little to no evidence 4. Just recently, a deceptively-edited video making it look like Joe Biden didn’t know what state he is in got 1 million views on Twitter 5. Deepfakes could provide “evidence” to people who are looking to further their cognitive dissonance around disinformation. This is a real threat, with two bills H. R. 3230,S. 3805 having already been proposed in Congress to counter the spread of deepfakes for illegal purposes.

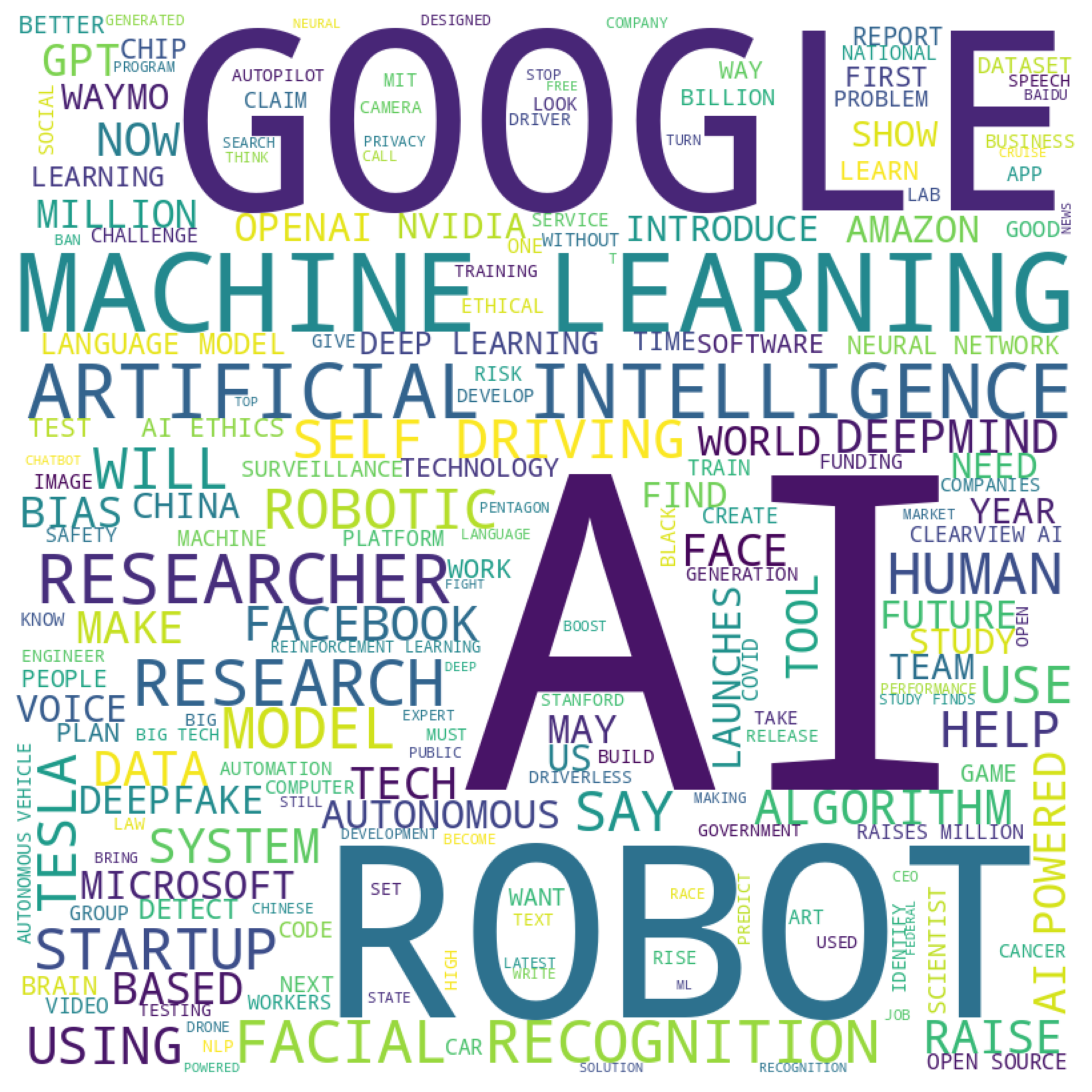

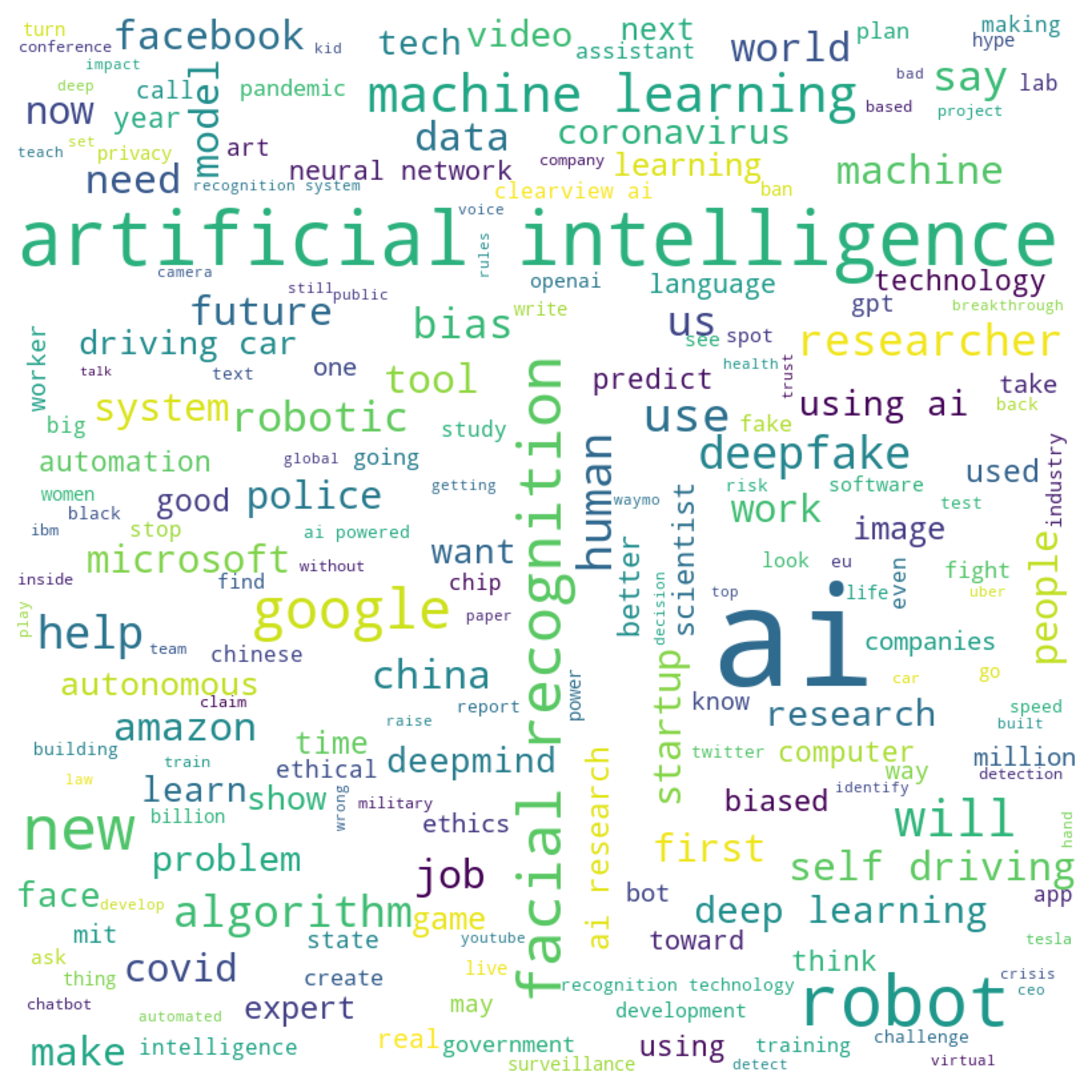

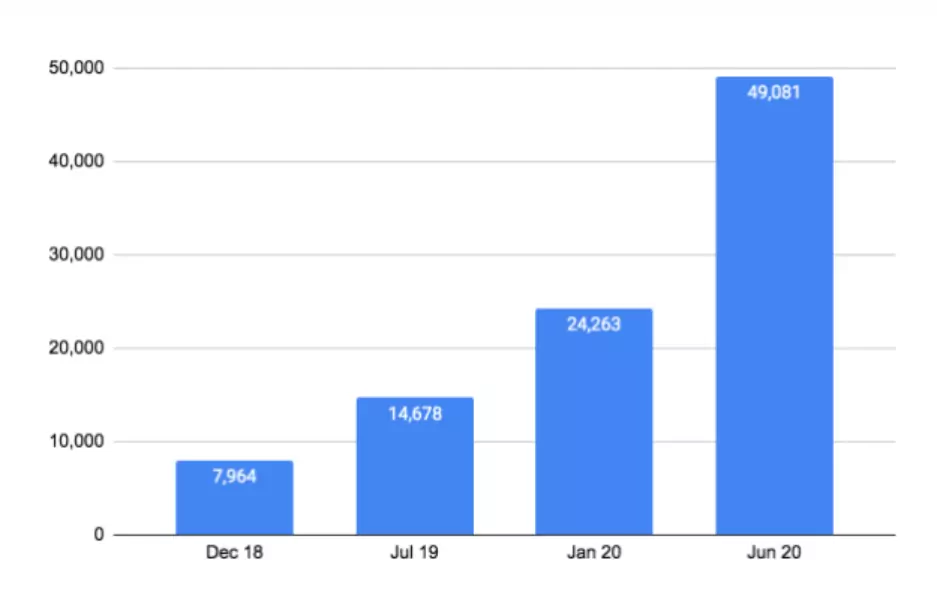

Since the term was coined in 2017, the amount of detected deepfakes on the internet has been increasing exponentially. At the same time, the underlying AI methods to generate deepfakes have improved, as have user-friendly tools that allow one to easily create deepfakes without in-depth technical knowhow. In this blog post, I’m going to go over some background behind deepfakes, what they have been used for, and how to counter them.

What are deepfakes?

A deepfake refers to a specific kind of synthetic media where a person in an image or video is swapped with another person’s likeness. -Meredith Somers

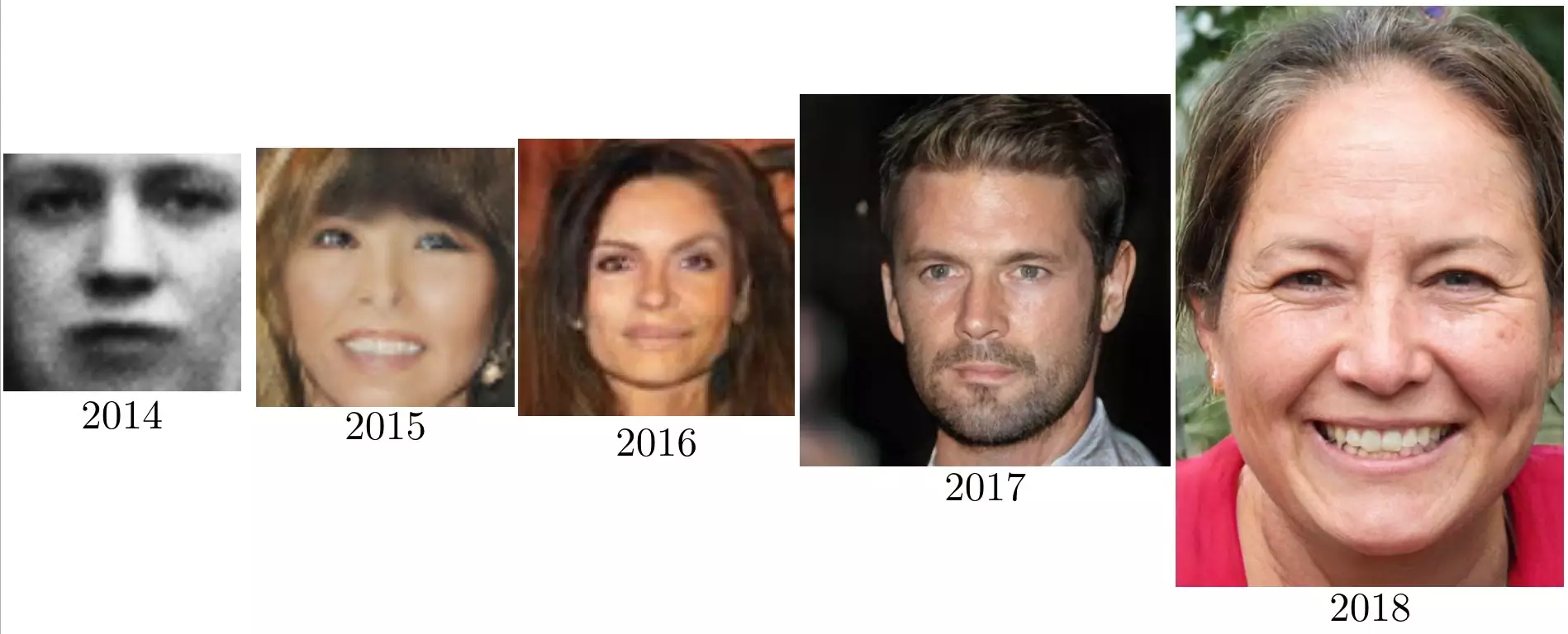

Synthetic image and video generation have been a growing computer vision subfields, and they got a lot of momentum with the introduction of generative adversarial networks (GAN) in 2014 6. The term “deepfake” was first coined by a Reddit user in 2017 who was using the technology to create fake pornography of celebrities using face swaps 7. Since then, the term has evolved to cover a wider range of image and video augmentations and generation as well as some audio applications.

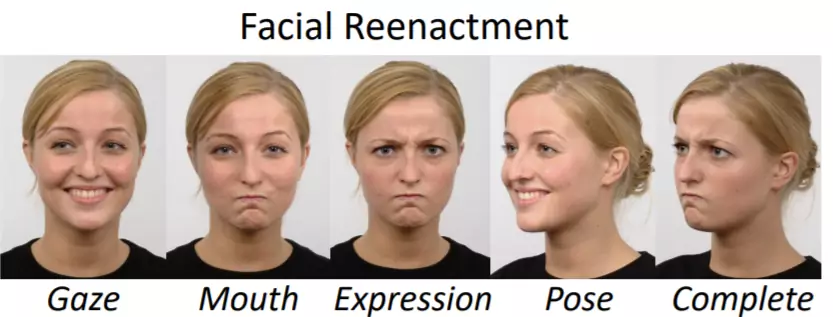

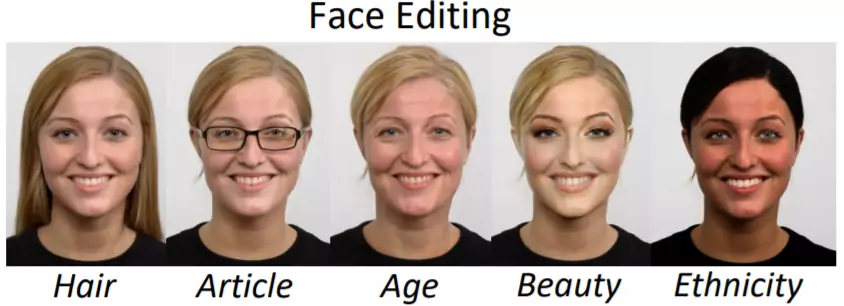

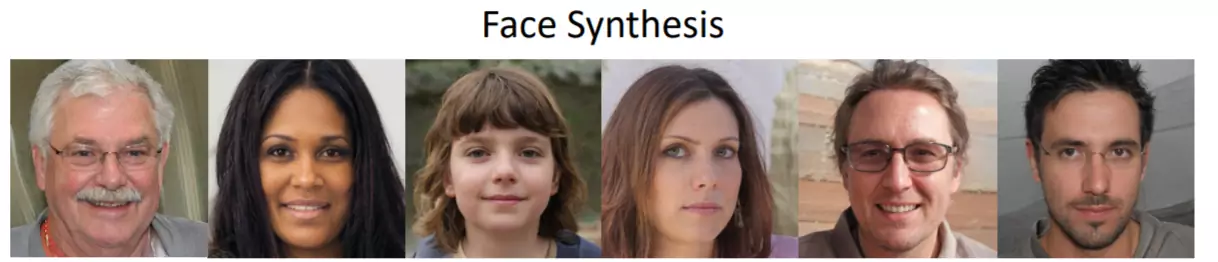

Deepfakes come in a variety of forms according to Mirsky and Lee 8. Here are examples of different types of deep fakes on a woman’s face:

Reenactment

aka Using your facial or body movements to dictate the movements of another person

Current works in reenactment are looking to minimize the amount of training data that is needed to be able to generate a modified face. One-shot and few-shot learning have become popular approaches to utilize data from many different faces but only need to fine tune on a select few samples of their target face. Some of the leading methods here are MarioNETte and FLNet.

Replacement

aka Your identity is mapped to another person (for example the face swap filter on Snapchat)

Many replacement works apply either encoder-decoder networks or variational autoencoders to learn to map the source face to the target while retaining the same expressions. Common challenges that come up with replacement is the need to deal with occlusions, when an object passes in front of the target’s face. Recent replacement models include FaceShifter and FaceSwap-GAN.

Editing

aka Altering the attributes of a person in some way (like changing their age or glasses)

Synthesis

aka Generating completely new images or videos with no target person

Reenactment and replacement are the two most prominent types of deepfakes, since they have the highest potential for destructive applications. For example, the face of a politician could be reenacted to say something they never actually said, or a person’s face could be replaced into an incriminating video for blackmail purposes. These types of manipulated media (deepfake or not) can be incriminating and/or harmful to the target’s reputation, like the post of Biden mentioned earlier in which signs that said the name of the state were edited to make it look like he didn’t know where he was.

At the core of the concern about deepfakes is the fact that they make reenactment and replacement accessible and cheap; you don’t have to understand the details of the techniques involved to be able to create deepfakes. Tools like DeepFaceLab allow anyone to take an image or video and replace a face, de-age a face, or manipulate a speech. For really seamless deepfakes, tools like this will provide a high quality generation of a face, but you still need some experience in video editing software like Adobe After Effects to add them to a video. If you don’t have the desire or ability to make them yourself, you can even find services and marketplaces willing to create deepfakes for you. One example is https://deepfakesweb.com/ where you just have to upload videos and images you want, and they will create a deepfake for you in the cloud.

How are deepfakes being used?

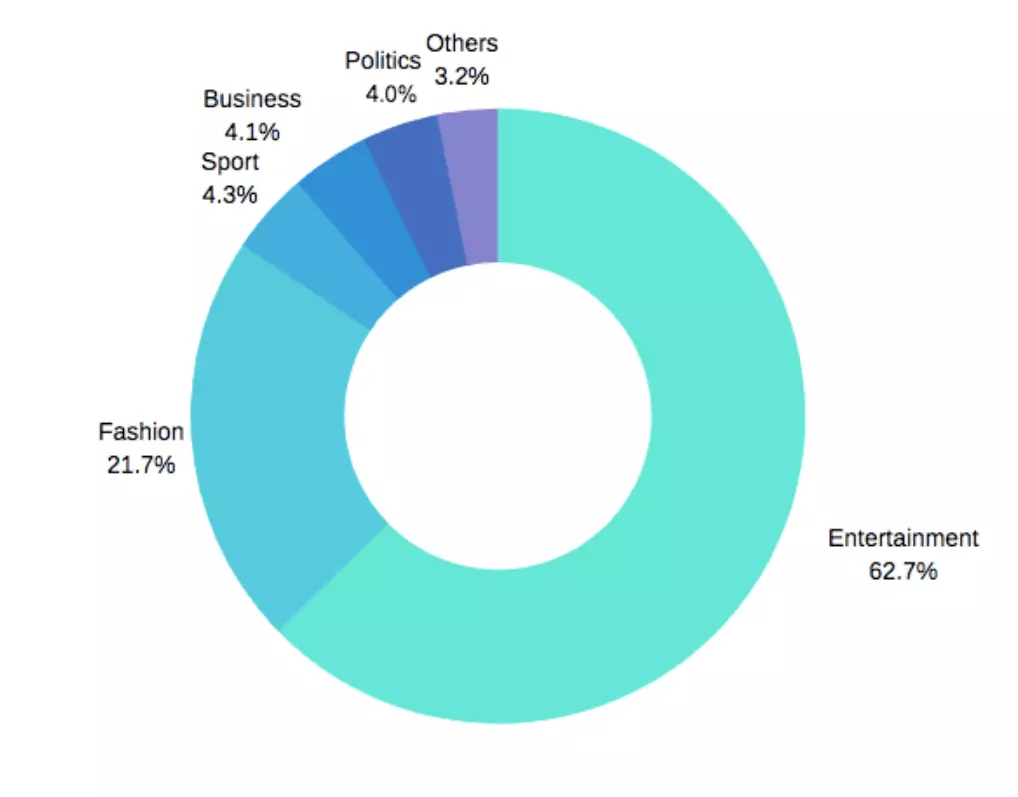

When deepfakes first gained popularity in 2017, they were overwhelmingly used in internet forums to generate fake pornography of celebrities. Even in 2019, 96% of all publicly posted deepfakes were pornographic, according to a survey done by the company sensity. The targets of deepfakes (the people who have their faces swapped into deepfakes) are generally people who work in the entertainment industry.

Deepfakes in Media

Aside from pornography, various online creators have been applying deepfakes to other forms of media, namely movie clips; individuals are constantly producing viral videos face swapping actors of movies with other actors. For example, in a recent YouTube video the faces of Arnold Schwarzenegger and Silvester Stalone were added to the movie Step Brothers 9.

While such videos suggest deepfakes could prove to be useful for the entertainment industry, most of these viral videos have just been short demonstrations, and there has yet to be a major Hollywood production to utilize deepfakes. Though, some might say that Hollywood should already be using deepfakes. Netflix received a lot of criticism for the quality of the de-aging effect that was used in the movie “The Irishman”. In response, someone applied a de-aging deepfake to the movie and got impressive results; perhaps it’s only a matter of time until Hollywood productions use these tools directly.

While no large blockbusters have used deepfakes, there are some accounts of smaller productions experimenting with deepfakes. Most notably, a producer has used deepfakes in his documentary about gay and lesbian persecution in Chechnya, to disguse the identities of the people being interviewed.

As the examples above demonstrate, besides the possible negative uses of deepfakes, they can also lead to new possibilities when it comes to the entertainment industry. For instance, it could be really cool to be able to select which actor you want to watch in a movie. And, comedy impersonations like on Saturday Night Live will no doubt also reach a whole new level with deepfakes. In fact, just the other week, the creators of South park, Trey Parker and Matt Stone, started a new comedy series on YouTube called “Sassy Justice” centered around using deepfakes for celebrity impersonations. If you’re a fan of South Park you should definitely check it out:

Deepfakes in Politics

Whenever a deepfake video gains attention online, there are always numerous comments from people expressing their concerns about using deepfakes to influence politics for nefarious purposes. These concerns are definitely valid, since in the information age being able to flood the internet with fake media about your political opponents that can fool viewers eyes and ears could be disastrous. To date, there have been quite a few deepfakes made addressing politics, many of which are satirical. One of the most popular was made by Jordan Peele where he reenacted Obama’s face 10. This was not done maliciously, but rather to raise awareness of the potential that deepfakes have to shape the political landscape.

Another example of using deepfakes to make a political statement are these videos of Kim Jong-Un and Vladimir Putin discussing the election and the need for a peaceful transition of power.

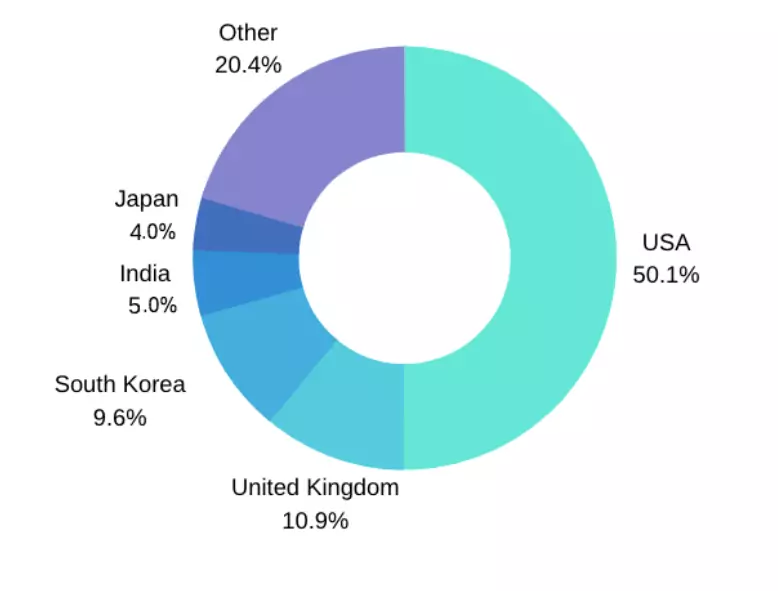

Only a few cases have been uncovered so far of non-satirical deepfakes being used to influence politics. But, just because a deepfake is made with the intent to influence politics does not automatically mean it is malicious. Positive deepfakes can be used, for example, to let politicians reach a wider audience. An Indian politician, Manoj Tiwari, made a deepfake of an announcement he made to translate it into other languages, like English 11.

In addition to this deepfake of Tiwari, a decent portion of detected deepfakes are targeting India. Though, the overwhelming majority of detected deepfakes are targeting the US and UK. With the creation of deepfakes spreading all across the globe, deepfakes have the potential to influence far more than just US politics.

While no wars have been fought due to deepfakes, there have been instances of deepfakes being used to attack political opponents and ideas. A deepfake of Donald Trump speaking about the Paris climate agreement was produced by a Belgian political group to persuade people to sign a petition calling the government to take more action against climate change [^3, 14].

Even though the quality of the deepfake is rather poor and the deepfake can easily be spotted by looking at his mouth, numerous commenters were deceived and were calling out Trump for the statements in the video. As deepfakes are increasing in quality, it not only becomes concerning that you may not be able to tell if a video was faked, but also that someone can claim a real incriminating video is actually fake. Last year, a Malaysian politician was jailed over a video of him engaging in homosexual activity (which is illegal in Malaysia) that the politician claims is a deepfake. Experts were not able to concretely determine if the video was faked or not. In the future, this defense of claiming a real video as a deepfake could be used for much more serious crimes.

Should I be worried?

As of today, there have not been any notable deepfakes that have targeted the 2020 US Election 34, and in general fake news and disinformation are still bigger concerns 5. That is, videos created by manual editing, like one showing Biden asleep during a TV interview 12, are a much greater concern than what is output by deep learning models currently. On top of that, the largest sources of disinformation are through rumors and forums, rather than any individual video 13.

Still, many are predicting deepfakes will inevitably make disinformation much worse that it is now. Luckily, as deepfake technology has progressed, so have methods for detecting them. There have been multiple competitions hosted on Kaggle designed to create better deepfake detectors 14. Additionally, a number of datasets have been collected to help train these detectors 151617.

Deepfake detectors work by being trained to find a host of different deepfake identifiers in images and videos. For example, Face X-ray is a method designed to look for boundaries when a deepfake face is blended back into the target image or video. Another method looks at the background of the image and compares it with the face to see if there are discrepancies between the two. An emotion recognition network has also been used to detect if the emotions shown on the face match the context and audio of the scene to determine if the behavior of the actor indicates a deepfake. Another way that human biology can be used to detect deepfakes and even identify the deepfake model used is by detecting heartbeats from the target of the video and analyzing the residuals of that signal. Most of these deepfake detection works have open-source code available, however, there are also some commercially available APIs, for example sensity, deepware, and Microsoft Video Authenticator.

In the literature, these detectors are compared based on their accuracy at detecting real or fake videos on common deepfake datasets. Some of the best models are able to achieve upwards of 99% accuracy on these datasets. This indicates that these datasets have been solved and there needs to be more research done to generate new datasets using state-of-the-art deepfake technologies. Since these methods rarely show results on deepfakes “in the wild,” I decided to take the winner of a Kaggle competition and try it out on some of the deepfakes shown in this post.

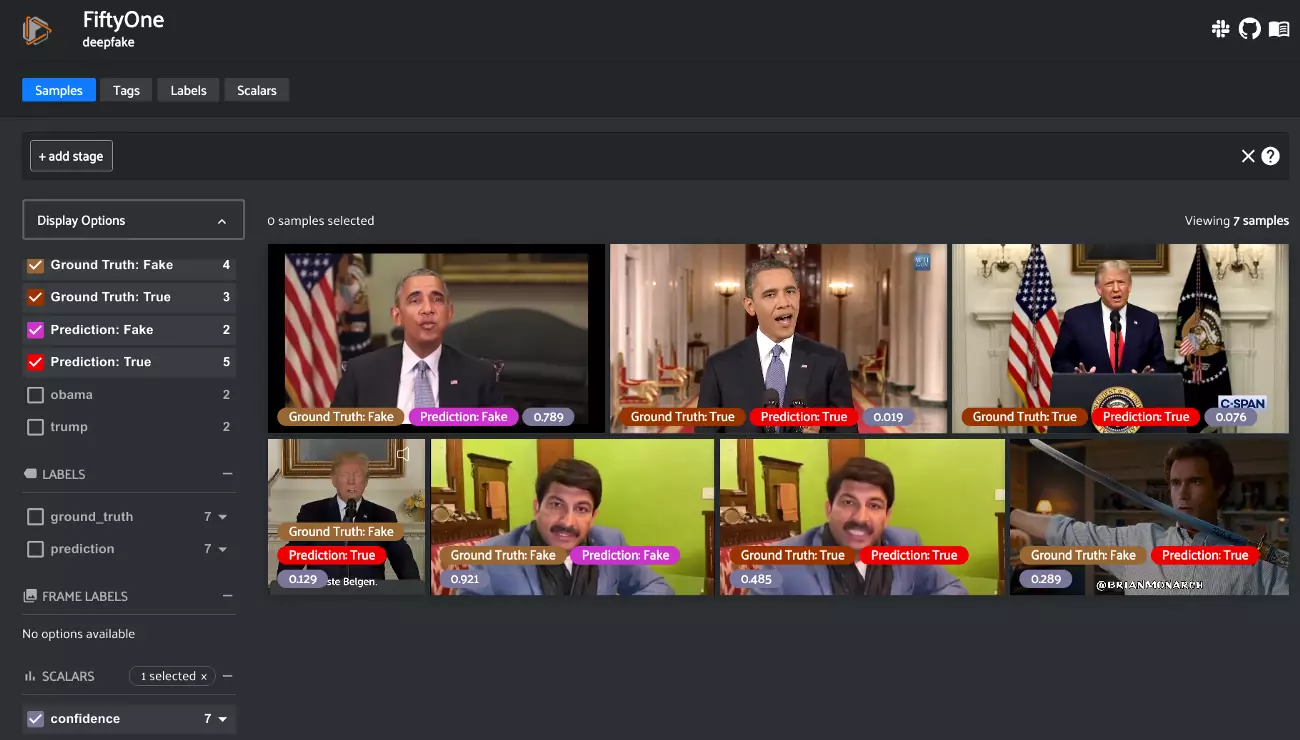

Above are deepfake detector results on videos in this post shown in our ML visualization tool FiftyOne. Download this example here.

This deepfake detection model was not able to identify the Step Brothers or Donald Trump videos as fake. The Step Brothers example is pretty seamless even to a human observer, but the video of Donald Trump is clearly a fake if you pay attention to the artifacts around the mouth. Thus, even though these models are performing well on common deepfake detection datasets, using them in real world scenarios is not as consistent as one would hope. If you are interested in performing such exploration yourself, you can check out the Jupyter notebook write-up showing the process and results.

This suggests that there is a need to continue research in deepfake detectors before deepfakes become more prevalent. Luckily, high-quality deepfakes still require human intervention to touch up the output, which will slow their spread and give deepfake detection models time to improve.

Spot the deepfake

The best way to be able to prevent deepfakes from being used maliciously is to train people to spot the tell tale signs of a deepfake so they don’t accidentally fall for it. While some deepfakes are really well made, there are oftentimes common traits that can give them away.

According to MIT researcher Matt Groh, if you think that you might be seeing a deepfake, you should look particularly closely at the:

- Face: Are parts of the face seeming to “glitch”? Are their eyebrows and facial muscles moving when they talk? Does their hair seem to be falling naturally?

- Audio: Does the audio match up with the expressions on the person’s face? Are there any weird cuts or jumps that sound unnatural in the audio?

- Lighting: Is there a portion of the video where the lighting doesn’t match the rest of the scene? Are you seeing reflections in things like glasses?

You can see how good you are at spotting deepfakes by checking out detectfakes.media.mit.edu. This website provides examples from the Deepfake Detection Kaggle challenge to test if you can spot the deepfake as good as a deepfake detection model.

Summary

Deepfakes can lead to a potentially terrifying future of misinformation in the media. However, the current state of the technology is still in its infancy and high-quality deepfakes require a significant human touch. As deepfakes are improving, so are the methods and algorithms to counter them. At least for now, a much greater concern is the spread of misinformation via manually edited images and videos.

About Me

My name is Eric Hofesmann. I received my master’s in Computer Science, specializing in Computer Vision, at the University of Michigan. During my graduate studies, I realized that it was incredibly difficult to thoroughly analyze a new model or dataset without serious scripting to visualize and search through outputs and labels. Working at the computer vision startup,Voxel51, I helped develop the tool FiftyOne so that researchers can quickly load up and start looking through datasets and model results. Follow me on twitter @ehofesmann

Citation

This piece is an updated and expanded version of the blog post Have Deepfakes influenced the 2020 Election?.

For attribution in academic contexts or books, please cite this work as

Eric Hofesmann, “The State of Deepfakes in 2020”, Skynet Today, 2020.

BibTeX citation:

@article{hofesmann2020state,

author = {Hofesmann, Eric},

title = {The State of Deepfakes in 2020},

journal = {Skynet Today},

year = {2020},

howpublished = {\url{https://skynettoday.com/overviews/state-of-deepfakes-2020 } },

}

Tools Used

Data Sources

-

https://www.youtube.com/watch?v=2Tar2O4q0qY&feature=emb_title

-

https://www.youtube.com/watch?v=88GUbuL89bQ&feature=emb_title

-

https://www.facebook.com/Vlaamse.socialisten/videos/10155618434657151/

-

www.youtube.com%2Fwatch%3Fv%3DuXwmSFjlVc0%26feature%3Demb_title

-

S. Malm,How Hitler, Mussolini, Lenin and Chairman Mao used photo editing to aid their propaganda: Before-and-after images reveal how they carefully managed their image,Daily Mail (2017) ↩

-

Media Forensics (MEDIFOR), DARPA ↩

-

O. Schwartz You thought fake news was bad? Deep fakes are where truth goes to die, The Guardian (2018) ↩ ↩2

-

G. Shao, Fake videos could be the next big problem in the 2020 elections, CNBC (2019) ↩ ↩2

-

D. O’Sullivan, False video of Joe Biden viewed 1 million times on Twitter, CNN (2020) ↩ ↩2

-

I. Goodfellow, et al.,Generative adversarial nets, NeurIPS (2014) ↩

-

S. Cole, We Are Truly Fucked: Everyone Is Making AI-Generated Fake Porn Now, Vice (2018) ↩

-

Y. Mirsky, W. Lee, *The Creation and Detection of Deepfakes: A Survey, arXiv (2020) ↩

-

B. Monarch, Instagram (2020) ↩

-

J. Vincent, Watch Jordan Peele use AI to make Barack Obama deliver a PSA about fake news, The Verge (2018) ↩

-

N. Christopher, *We’ve Just Seen the First Use of Deepfakes in an Indian Election Campaign](https://www.vice.com/en/article/jgedjb/the-first-use-of-deepfakes-in-indian-election-by-bjp), Vice (2020) ↩

-

White House social media director tweets manipulated video to depict Biden asleep in TV interview, Washington Post (2020) ↩

-

M. Parks, Fake News: How to spot misinformation, NPR (2019) ↩

-

Deepfake Detection Challenge, Kaggle (2020) ↩

-

Y. Li, et al., Celeb-DF: A Large-scale Challenging Dataset for DeepFake Forensics CVPR (2020) ↩

-

A. Rossler, et al., Faceforensics++: Learning to detect manipulated facial images. ICCV (2019) ↩

-

H. Dang, et al., On the detection of digital face manipulation. CVPR (2020) ↩