Last Week in AI #74

Deep learning's compute limits, invisible AI in healthcare, and more!

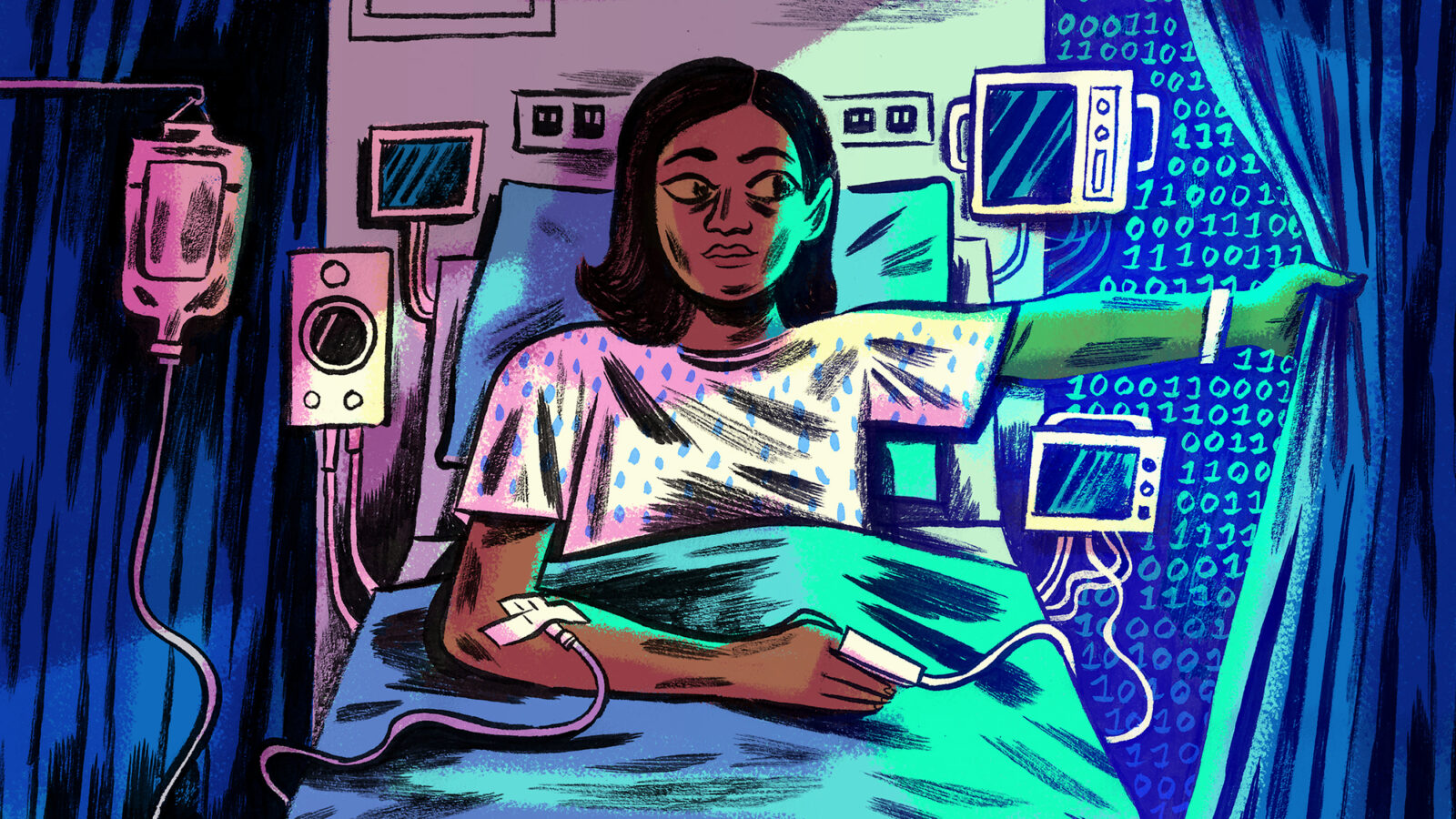

Image credit: Mike Reddy / STAT

Mini Briefs

MIT researchers warn that deep learning is approaching computational limits

An important note about the advancement of deep learning is its reliance on advances in compute. Without many powerful GPUs and similar developments, new, computationally expensive methods such as Neural Architecture Search would not be possible today. Researchers at the Massachusetts Institute of Technology, Underwood International College, and the University of Brasilia conducted a study whose results, they claim, tells us that we are approaching the computational limits of deep learning. The researchers analyzed 1,058 papers from ArXiv, paying attention to the computation used in one pass of each deep learning model studied and the capability of hardware used to train each of those models. Based on the trends they found, the researchers anticipate that without greater efficiency in its use of computational power, training state-of-the-art models will extract prohibitive hardware, environmental, and monetary costs.

An invisible hand: Patients aren’t being told about the AI systems advising their care

The coronavirus outbreak has brought news about advances in medical AI to the fore, showing how researchers are attempting to use state-of-the-art methods for applications such as epidemic forecasting and drug discovery. But AI is also being used for more routine, everyday tasks: since February 2019, AI has played a role in making discharge decisions for tens of thousands of patients hospitalized in one of Minnesota’s largest health systems, but the patients are unaware of the AI’s involvement. This case represents an greater role for AI in everyday healthcare decisions–while some use cases may be productive, it is well known that AI systems can be fraught with bias.

If patients are not informed of AI’s role in their care, its potentially biased decisions may make harmful mistakes without their knowledge. Doctors and nurses who withhold information about the use of AI worry about it derailing conversations with their patients and undermining trust–but these very worries indicate that those healthcare workers should be taking a robust, open approach to evaluating the AI’s usefulness, integrating it into their approach, and informing patients affected by its decisions. However, since disclosure of the use of AI-powered support tools falls into a regulatory gray zone, there is little incentive for hospitals to be completely transparent. Harvard Law School’s Glenn Cohen believes that doctors and nurses should be having frank conversations about the issue of disclosure. Just as he worries, it is likely that patients who find out that AI was used to make healthcare decisions without their knowledge will lose trust in the technology.

Podcast

Check out our weekly podcast covering these stories! Website | RSS | iTunes | Spotify | YouTube

News

Advances & Business

-

GPT-3: An AI that’s eerily good at writing almost anything - I got access the the OpenAI GPT-3 API and I have to say I’m blown away. It’s far more coherent than any AI language system I’ve ever tried. All you have to do is write a prompt and it’ll add text it thinks would plausibly follow.

-

A new way to train AI systems could keep them safer from hackers - The context: One of the greatest unsolved flaws of deep learning is its vulnerability to so-called adversarial attacks. When added to the input of an AI system, these perturbations, seemingly random or undetectable to the human eye, can make things go completely awry.

-

Disney’s new AI is facial recognition for animation - The system could revolutionize how we search and discover streaming content.

-

Powerful AI Can Now Be Trained on a Single Computer - New machine learning training approach could help under-resourced academic labs catch up with big tech.

Concerns & Hype

-

Prepare for Artificial Intelligence to Produce Less Wizardry - A new paper argues that the computing demands of deep learning are so great that progress on tasks like translation and self-driving is likely to slow.

-

DeepMind researchers propose rebuilding the AI industry on a base of anticolonialism - Researchers from Google’s DeepMind and the University of Oxford recommend that AI practitioners draw on decolonial theory to reform the industry, put ethical principles into practice, and avoid further algorithmic exploitation or oppression.

-

Why are Artificial Intelligence systems biased? - A machine-learned AI system used to assess recidivism risks in Broward County, Fla., often gave higher risk scores to African Americans than to whites, even when the latter had criminal records.

-

Deepfake used to attack activist couple shows new disinformation frontier - Oliver Taylor, a student at England’s University of Birmingham, is a twenty-something with brown eyes, light stubble, and a slightly stiff smile. The catch? Oliver Taylor seems to be an elaborate fiction.

-

German court bans Tesla ad statements related to autonomous driving - Germany has banned Tesla from repeating what a court says are misleading advertising statements relating to the capabilities of the firm’s driver assistance systems and to autonomous driving, a Munich judge ruled on Tuesday. Tesla can appeal the ruling.

Analysis & Policy

- Pentagon AI Gains “Overwhelming Support” From Tech Firms - Even Google - Despite past battles over Project Maven and other military uses of AI, “Google and many others” are now working with the Pentagon’s Joint Artificial Intelligence Center, its new acting director says.

Expert Opinions & Discussion within the field

- Announcing nominees for the second annual Women in AI Awards - The women nominated below have all made outstanding contributions in the AI field, from advancing the work in ethics and fairness in AI, to trailblazing research critical to AI innovation, to ensuring young women entering the field have the opportunity and mentorship necessary to thrive.

That’s all for this week! If you are not subscribed and liked this, feel free to subscribe below!