Google's LYmph Node Assistant - a Boost, not Replacement, for Doctors

A new tool from Google promises 99 percent accuracy in identifying cancer in lymph nodes - but it's too early to claim it surpasses humans

Image credit: Photo from Google's blog post

What Happened

On October 12, Google announced a new AI tool in a blog post accompanied by two papers.

The work is a follow up to last year’s announcement of the LYmph Node Assistant (LYNA) algorithm, which achieved high accuracy in a competition. This month’s research validates earlier results and shows how the tool can be used by doctors to improve their decision making.

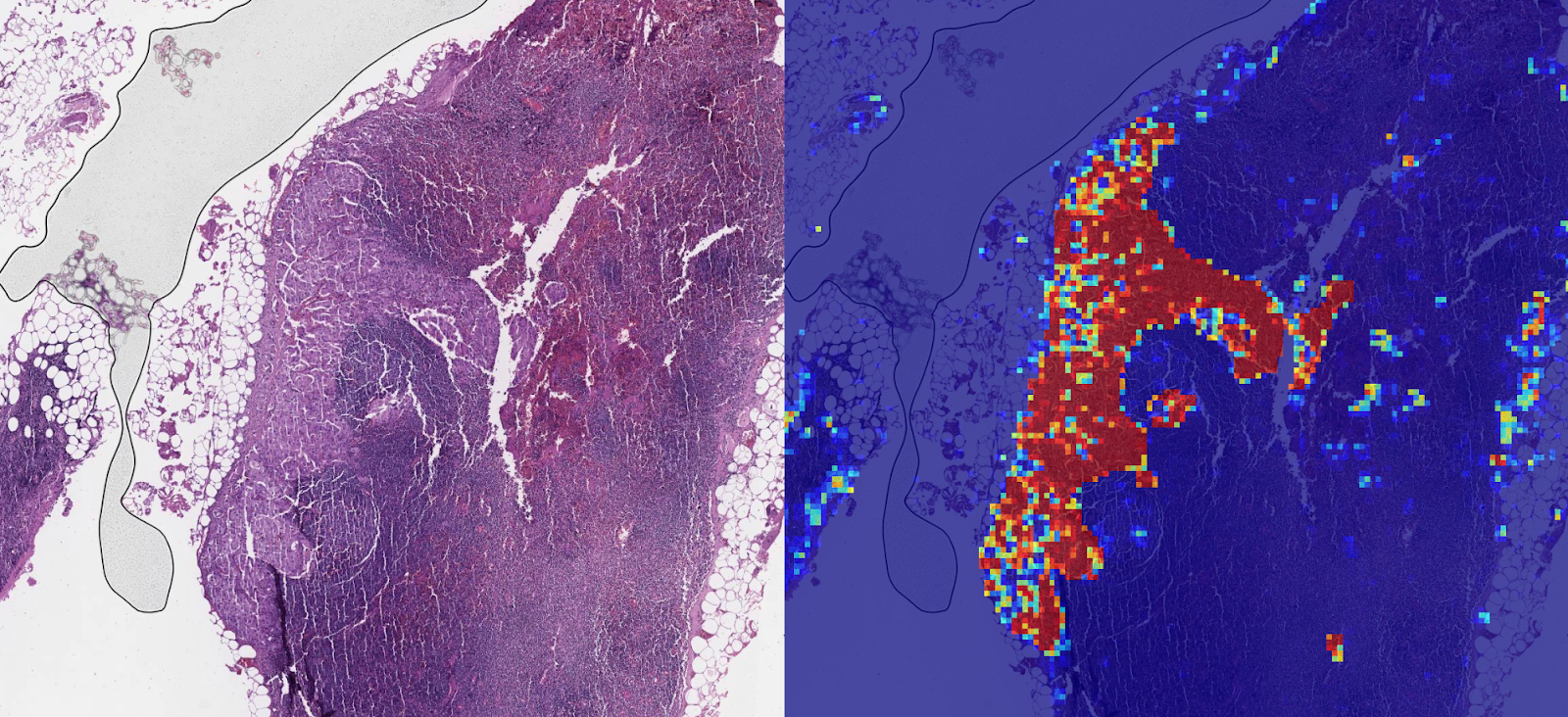

LYNA is a neural network for identifying cancer cells in lymph node biopsies. Because lymph nodes collect fluid throughout the body, they are a common site for cancer to spread. Cancers that have spread to lymph nodes often require more-aggressive treatments like chemotherapy and radiation.

Unfortunately, identifying cancer cells in biopsies is a tedious and error-prone job for pathologists, since only a small fraction of cells may be cancerous. Google points to research showing that about 1 in 4 staging decisions for breast cancer metastasis would be changed after a second look.

In the first paper, Google applied the algorithm to biopsy images from two independent medical centers, which is important because the centers use different techniques for preparing the images. LYNA proved robust to these differences, showing about 99% accuracy on both data sets.

In the second paper, Google put LYNA in the hands of six pathologists and tested how well they could detect small ‘micrometastases’. Working together with LYNA, the pathologists were able to complete the task in half the time and with greater accuracy than either a pathologist or algorithm alone.

The Reactions

The studies received some media coverage:

- Venture Beat does a great job summarizing the research and contextualizing it in the overall context of breast cancer. It also outlines a few weaknesses of the algorithm and briefly mentions future directions.

- Engadget has a three-paragraph summary of the research that unfortunately gets a few details wrong. It questions the clinical value of the algorithm because it only looks “for late-stage breast cancer where there’s no known solution,” but sensitive cancer staging is still valuable because it allows aggressive treatment to start before the cancer advances.

- Becker Hospital Review summarizes the research accurately and emphasizes that its clinical value lies in making pathologists faster and more accurate, not necessarily replacing them. The distinction is of course important for hospitals considering whether to adopt similar technologies.

- BGR, reprinted in the NY Post, has a short but comprehensive summary of the research. It correctly identifies the accuracy of the method, the significance of the problem, and that it can work side-by-side with pathologists. However, we take issue with the headline “Google’s AI is now better than doctors at spotting breast cancer.”

Although the above sources generally covered the topic well, many other sources made the same mistakes as BGR by claiming that LYNA is “better than” pathologists:

- Google AI’s LYNA Better Than Humans In Detecting Advanced Breast Cancer — Healthcare Weekly

- Google’s AI is now better than doctors at spotting breast cancer — BGR

- A Google Tool Is Better Than Pathologists At Detecting Advanced Breast Cancer — A Plus

- Google’s AI can spot breast cancer better than humans — The Telegraph

- Google’s AI tool ‘LYNA’ Is Better Than Doctors At Spotting Breast Cancer — Wonderful Engineering

- Google’s AI Tool Can Identify One Type of Breast Cancer More Accurately Than Human Doctors — Fortune

- Google’s AI may be better than doctors at spotting breast cancer — New York Post

- Google AI Detects Breast Cancer Better Than Humans — Geek.com

This is a strong and irresponsible claim, especially because the Google authors are upfront about the limitations of their work: pathologists in their study could only see one image, whereas they are typically able to examine multiple. It’s also possible that the pathologists were less-motivated knowing the work was for a research study instead of real patient care. Without a more-realistic test and formal statistical analysis, it’s too early to say LYNA is better than human experts.

Many articles also mistakenly muddy the timeline — Google originally announced the project over a year ago, and the new research is an incremental improvement. It’s also incorrect to call LYNA an “AI” (artificial intelligence), when it is really a computer program that uses AI techniques to classify pathology images. It’s not an “intelligence” in itself.

Our Perspective

This is excellent follow up research to last year’s LYNA announcement. It improves baseline accuracy, demonstrates robustness to common image artifacts, and, crucially, shows that pathologists and LYNA can work together to become faster and more accurate. It is an important demonstration that current AI techniques are more likely to augment human abilities than replace them.

However, the research will likely need to be scaled up significantly before LYNA can help real patients. The algorithm is so far trained and tested on only about ~500 images from three medical centers and used by only six practicing pathologists. LYNA will likely need to be tested with more biopsy samples, centers, and pathologists before it can win FDA approval. It would also be nice to generalize LYNA to other cancers like lung cancer, which kills far more people in the United States than any other cancer.

Media coverage was overall good, but most outlets ran quick articles without interviewing or quoting experts. It would have been nice to interview physicians or AI researchers, who could contextualize the research or ensure the accuracy of technical details. Numerous outlets ran sensational headlines claiming the LYNA outperforms doctors, but the results are too preliminary to make this claim.

From the perspective of AI research, it’s heartening to see a three-year-old deep learning technique (Inception-V3, the same architecture used in Google’s DeepVariant) achieving such strong performance on the problem of diagnosing cancer. With powerful building blocks like Inception-V3 available, deep learning researchers can focus on harder problems like how humans and deep neural networks can work together.

TLDR

Google’s LYNA is an extremely promising approach for assisting pathologists in a challenging cancer diagnosis task. It has cleared early hurdles, but must still prove itself in larger clinical trials before it can help real patients. It also provides a model for how deep learning algorithms can assist doctors in their jobs.

Disclosure: I worked for Google and still have some equity.