Last Week in AI #35

AI-powered X-Ray vision, the state of Deep Fakes, and more!

Mini Briefs

Watch Out, MIT’s New AI Model Knows What You’re Doing Behind That Wall

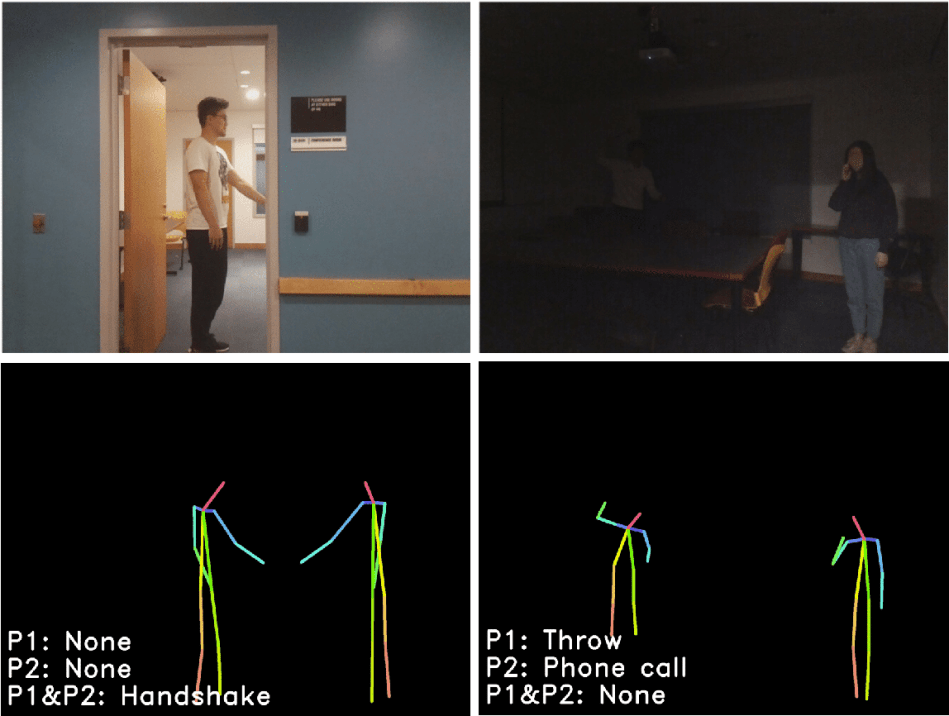

MIT’s Computer Science & AI Lab (CSAIL) has unveiled a neural network model that can detect human actions through walls or in extremely dark places. Previous camera-based approaches to computer vision could only sense visible light, but the MIT CSAIL researchers “overcame these challenges by using radio signals in the WiFi frequencies, which can penetrate occlusions.” SyncedReview describes the model:

Their “RF-Action” AI model is an end-to-end deep neural network that recognizes human actions from wireless signals. The model uses radio frequency (RF) signals as input, generates 3D human “skeletons” as an intermediate representation, and can track and recognize actions and interactions of multiple people. The skeleton step enables the model to learn not only from RF-based datasets, but also from existing vision-based datasets.

This technology’s ability to see without clear light might spark further concern regarding AI-powered surveillance and security technology, but the MIT researchers believe differently. Because the inputs to the neural network model only include radio signals, this wouldn’t allow the system to identify personal information. And, while the model represents a fascinating development in the field of vision, there is still plenty of work to be done in this space.

The biggest threat of deepfakes isn’t the deepfakes themselves

In late 2018, a video of the missing president of Gabon, Ali Bongo, surfaced. Bongo hadn’t been seen in public for months and the government announced he had suffered a stroke but remained in good health. However, citizens noticed that Bongo looked off in the video footage and began to suspect it was a deepfake–“a piece of media forged or altered with the help of AI.” The video was followed by an unsuccessful military coup, and while no alternations were found in the video, it raised grave concerns:

In the lead-up to the 2020 US presidential elections, increasingly convincing deepfake technology has led to fears about how such faked media could influence political opinion. But a new report from Deeptrace Labs, a cybersecurity company focused on detecting this deception, found no known instances in which deepfakes have actually been used in disinformation campaigns. What’s had the more powerful effect is the knowledge that they could be used that way.

In a world in which extremely realistic-looking videos and pictures can be made with the help of AI, situations like Gabon’s may become increasingly common. The mere idea of deepfakes has the potential to scare people into being unable to even believe the truth of real things. It’s the second threat, that deepfakes could be used in misinformation campaigns, that represents a serious threat that needs to be dealt with by tech companies and policymakers. Without robust and effective counter-measures, the mere existence of deepfakes threatens to instill a skepticism in people that could cause them to question everything, real or fake.

Advances & Business

-

Scaling responsible machine learning at the BBC - An overview of the BBC Machine Learning Principles, describing how “ BBC’s machine learning engines will support public service outcomes (i.e. to inform, educate and entertain) and empower our audiences.”

-

The Style-Quantifying Astrophysicists of Silicon Valley - Chris Moody knows a thing or two about the universe. As an astrophysicist, he built galaxy simulations, using supercomputers to model the way the universe expands and how galaxies crash into one another. He belongs to a growing group of astrophysicist deserters, who have stopped researching the cosmos to start building recommendation algorithms and data models for the tech industry.

-

Could machine learning put impoverished communities back on the map? - Satellite images reveal enormous amounts of information about oncoming hurricanes, military troop movements and changes to the polar ice cap. Stanford computer scientist Stefano Ermon recently embarked on a two-year study that builds on research in which his team created machine learning models to accurately infer poverty and wealth at the community level from satellite imagery.

-

How deepfakes evolved so rapidly in just a few years - You probably saw that recent report about how 96% of online deepfake videos are pornographic. That’s sure to change in the coming years—veering into political manipulation and propaganda—says the head of Deep Trace Labs, the company behind the study.

-

Driverless cars are stuck in a jam - Few ideas have enthused technologists as much as the self-driving car. Advances in machine learning, a subfield of artificial intelligence (AI), would enable cars to teach themselves to drive by drawing on reams of data from the real world.

-

There’s An Art To Artificial Intelligence - As hard as it is to believe, artificial intelligence (AI) was rarely mentioned in the discussions related to digital transformation that began almost a decade ago. Now, no mention of transformation would make sense without how AI is making it all possible.

-

AI Could Prevent Marital Arguments Before They Even Begin - Researchers use listening devices and algorithms to detect speech patterns that typically precede fights. A group of four engineers and psychologists are trying to develop AI systems that use speech patterns and physiological, acoustic and linguistic data from wearable devices and smartphones to detect conflict between couples.

Concerns & Hype

-

This is how you kick facial recognition out of your town - In San Francisco, a cop can’t use facial recognition technology on a person arrested. But a landlord can use it on a tenant, and a school district can use it on students. While there is growing momentum toward curtailing private surveillance, a quick federal ban is not in the cards.

-

Miami Police Want to Spent $70K on Powerful Cell-Phone Spying Software - Back in 2018, New Times reported that the Miami-Dade Police Department wanted to renew its longstanding contract with a Nebraska company called PenLink, which sells some extremely powerful cell-phone tracking technology to local cops. The MDPD now claims cops will use the service to find missing persons and “violent offenders” throughout the city.

-

Passport facial recognition checks fail to work with dark skin - When it launched a new passport checking service, the Home Office knew that it had trouble handling some shades of skin, a report in the New Scientist has revealed. The facial recognition technology failed to recognise the features of some people with very light or dark skin.

-

Prepare for the Deepfake Era of Web Video - It has always been good advice to take what you see on the internet with a pinch of salt, but online video has lately become even less trustworthy. Deepfakes are now being used in web videos–many current ones are being used to harras and discredit women journalists and activists.

Analysis & Policy

-

The US just blacklisted 8 Chinese AI firms. It could be what China’s AI industry needs. - The US Commerce Department has said it is adding 28 Chinese government organizations and private businesses, including eight tech giants, to its so-called Entity List for acting against American foreign policy interests.

-

Political “deepfake” videos banned in California before elections - Ahead of what is expected to be a contentious election next year, California has made it illegal to distribute deceptively edited videos and audio clips intended to damage a politician’s reputation or deceive someone into voting for or against a candidate.

Expert Opinions & Discussion within the field

-

AI in 2019: A Year in Review - On October 2nd, the AI Now Institute at NYU hosted its fourth annual AI Now Symposium to another packed house at NYU’s Skirball Theatre. The Symposium focused on the growing pushback to harmful forms of AI, inviting organizers, scholars, and lawyers onstage to discuss their work.

-

Machine behaviour is old wine in new bottles - The call of Iyad Rahwan and colleagues for a science of “machine behaviour” that empirically studies artificial intelligence (AI) “in the wild” is an example of “columbusing”.

-

Debate on Instrumental Convergence between LeCun, Russell, Bengio and More - A public debate about instrumental convergence among big names in the field of AI.

That’s all for this week! If you are not subscribed and liked this, feel free to subscribe below!