Last Week in AI #71

AI bias, Flawed face recognition leads to wrongful arrest, and more!

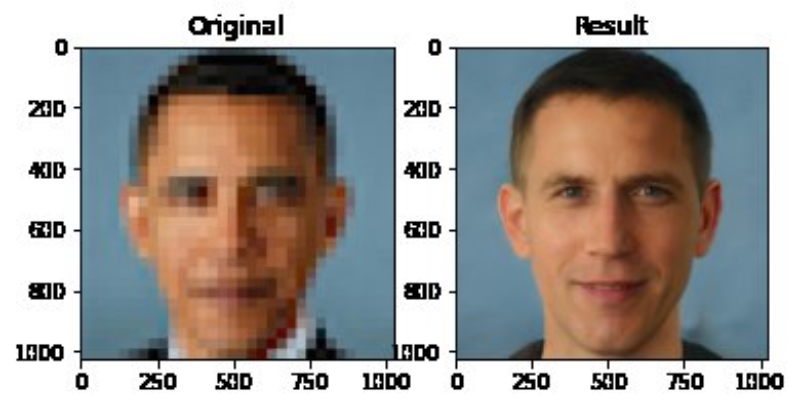

Image credit: @Chicken3gg via Twitter

Mini Briefs

AI Weekly: A deep learning pioneer’s teachable moment on AI bias

Researchers recently released a computer vision algorithm capable of realistically upsampling, or “deblurring,” photos of human faces. Others soon realized that the model produces predominantly White faces, even if the input is a low-resolution photo of a person of color. Yann LeCun, a renowned machine learning researcher and Turing award winner, commented on Twitter that the source of this bias lies with the training dataset alone. This sparked a fierce debate on Twitter, where many pointed out that the root of such bias extends far beyond datasets in the field of AI, where a lack of diverse teams and awareness of social contexts all contribute to the problem.

Timnit Gebru, an AI ethics researcher and a co-author on the Gender Shades study, summarizes in her talk at an AI fairness workshop:

Fairness is not just about data sets, and it’s not just about math. Fairness is about society as well, and as engineers, as scientists, we can’t really shy away from that fact.

Wrongfully Accused by an Algorithm

Earlier this year, the Detroit Police Department wrongfully arrested a Black man because of an incorrect match from a face recognition system. This may be the “first known case of its kind,” where a flawed face recognition system led to the arrest of someone who did not commit the crime.

Commercial face recognition systems often perform poorly on people of color. The companies that provide these technologies disclaim that their face matches are only “investigative leads,” not “probable causes for arrest.” Still, “Mr. Williams’s case combines flawed technology with poor police work, illustrating how facial recognition can go awry.”

Podcast

Check out our weekly podcast covering these stories! Website | RSS | iTunes | Spotify | YouTube

News

Advances & Business

- Apple’s AI plan: a thousand small conveniences - Because AI is too dumb to do anything else.

Concerns & Hype

-

AI gatekeepers are taking baby steps toward raising ethical standards - AI is upending companies, industries, and humanity. For years, Brent Hecht, an associate professor at Northwestern University who studies AI ethics, felt like a voice crying in the wilderness.

-

If AI is going to help us in a crisis, we need a new kind of ethics - What opportunities have we missed by not having these procedures in place? It’s easy to overhype what’s possible, and AI was probably never going to play a huge role in this crisis. Machine-learning systems are not mature enough.

-

AI researchers condemn predictive crime software, citing racial bias and flawed methods - The controversial research is set to be highlighted in an upcoming book series by Springer, the publisher of Nature.

-

Microsoft Says It Won’t Sell Facial Recognition To The Police. These Documents Show How It Pitched That Technology To The Federal Government. - Last week, Microsoft said it would not sell its facial recognition to police departments. But new documents reveal it was pitching that technology to at least one federal agency as recently as two years ago.

-

Biased AI perpetuates racial injustice - The murder of George Floyd was shocking, but we know that his death was not unique. Too many Black lives have been stolen from their families and communities as a result of historical racism.

Analysis & Policy

-

A new US bill would ban the police use of facial recognition - US Democratic lawmakers have introduced a bill that would ban the use of facial recognition technology by federal law enforcement agencies. Specifically, it would make it illegal for any federal agency or official to “acquire, possess, access, or use” biometric surveillance technology in the US.

-

Trump’s freeze on new visas could threaten US dominance in AI - The president’s executive order to temporarily suspend H-1B visas exacerbates the US’s precarious position in the global competition for AI talent. Even before president Trump’s executive order on June 22, the US was already bucking global tech immigration trends.

Expert Opinions & Discussion within the field

-

Joy Buolamwini Inks Deal With Random House for Book ‘Justice Decoded’ - The book is an “investigative look into the harms and biases of AI and other technologies.”

-

AI experts say research into algorithms that claim to predict criminality must end - A coalition of AI researchers, data scientists, and sociologists has called on the academic world to stop publishing studies that claim to predict an individual’s criminality using algorithms trained on data like facial scans and criminal statistics.

That’s all for this week! If you are not subscribed and liked this, feel free to subscribe below!